When will a company catch up?OpenAI?This question must have been the confusion that has lingered in the minds of many readers over the past year.

If only one company in the world could surpass OpenAI,GoogleShould be the most promising player.

Google, also a North American AI giant, has the sameAGITarget,World ClassGoogle has created a lot of technical talent, global financial resources, and even the core Transformer architecture of OpenAI's large model.

However, since 2023, the field of AI has been full of ups and downs, and OpenAI is always one step ahead of Google. Every time Google comes up with a "revenge killer" to avenge its previous shame, it is always stolen by OpenAI.

for exampleup to dateThe nuclear-level multimodal large model Gemini1.5 was only a hot topic on the technology topic list for a few hours, and no one paid attention to it because Sora, which was launched shortly after, was too explosive and more eye-catching.

There is no doubt that the AI field is experiencing the most exciting "Fast and Furious" competition in the world. The leading OpenAI won beautifully, and Google, which followed closely, also lost decently. I found that their situation was accurately captured by the popular movie during the Spring Festival of the Year of the Dragon.

If OpenAI is the stunning and exciting "Hot" then Google is like the middle-aged racing driver in "Speed 2" who is struggling to pursue his dream. He mustered up the courage to go full speed ahead, but ended up not winning but having a big crash.

It remains to be seen who will take the holy grail of AGI at the end of the track, but over the past year or so, just watching the initial stages of this long-distance race has been extremely exciting.

Google and OpenAI have been fighting against each other repeatedly in the AI battle. Let's take a look at the overall situation of the industry competition between North American AI giants from this exciting "two-horse race".

Google's three-game losing streak

The passionate confrontation between North American AI giants

Currently, there are three North American AI giants competing for the holy grail of AGI: OpenAI, Google, and Meta.

Among them, Meta is an open source project, and its large model series LLaMA is currently the most active AI open source community in the world. OpenAI and Google are on the same track, mainly building "closed source" large models.

Although OpenAI has been mocked as “no longer open”, Google staff have also boldly advised that “neither we nor OpenAI have a moat”. However, from another perspective, a closed-source business strategy must provide high-quality models and irreplaceable capabilities to convince users to pay. This will also drive model manufacturers to continue to innovate and maintain competitive advantages, and is an indispensable commercial force in the AI industry.

Therefore, the competition between the three AI giants in North America is the Meta volume ecosystem, OpenAI and Google volume model.

So, let’s focus on the model track. How is the competition going?

Throughout 2023, Google, which is on the same track as OpenAI, has deeply tasted the taste of peer pressure.

This race can be divided into three stages:

Round 1. ChatGPT VS Bard.

Needless to say, this was a competition in which Google was "taken advantage" by OpenAI, and since then it has had no choice but to follow in OpenAI's footsteps.

In November 2022, OpenAI released ChatGPT, which made a big splash and kicked off a global craze for large language models.

Among them, the basic technology of ChatGPT, Transformer, was launched by Google, and the emergence of large language models was discovered by Jason Wei, a Google researcher (who later jumped to OpenAI). OpenAI is using Google's technology, stealing Google's people, and impacting Google's AI leadership.

Google’s response was to “get angry for a moment”.

In March 2023, Google urgently released Bard. However, the performance of this model itself is relatively weak, with limited functions when it was launched, only supporting English, only for a small number of users, and completely unable to compete with ChatGPT.

Round2.GPT-4VS PaLM2.

Some people say that Google is using the "Tian Ji Horse Racing" strategy.FirstThe bureau deliberately released the relatively weak machine learning model Bard. This makes sense, but every horse of OpenAI is a good horse.

OpenAI soon launched an upgraded version of GPT-4 and opened the GPT-4 API, leaving Google further behind.

At the Google I/O 2023 conference in May, PaLM2, which was sent to compete with GPT-4, was also a "transitional product." Zoubin Ghahramani, vice president of Google Research, said that PaLM2 was an improvement on the earlier model, which only narrowed the gap between Google and OpenAI in AI, but did not surpass GPT-4 as a whole.

In this round, Google is still behind. Google is obviously aware of this, and announced at the conference that it is training the successor of PaLM, named Gemini, and is betting hundreds of millions of dollars on it, preparing to stage a "Prince's Revenge" at the end of the year.

Round 3. Gemini family VS Sora+GPT-5.

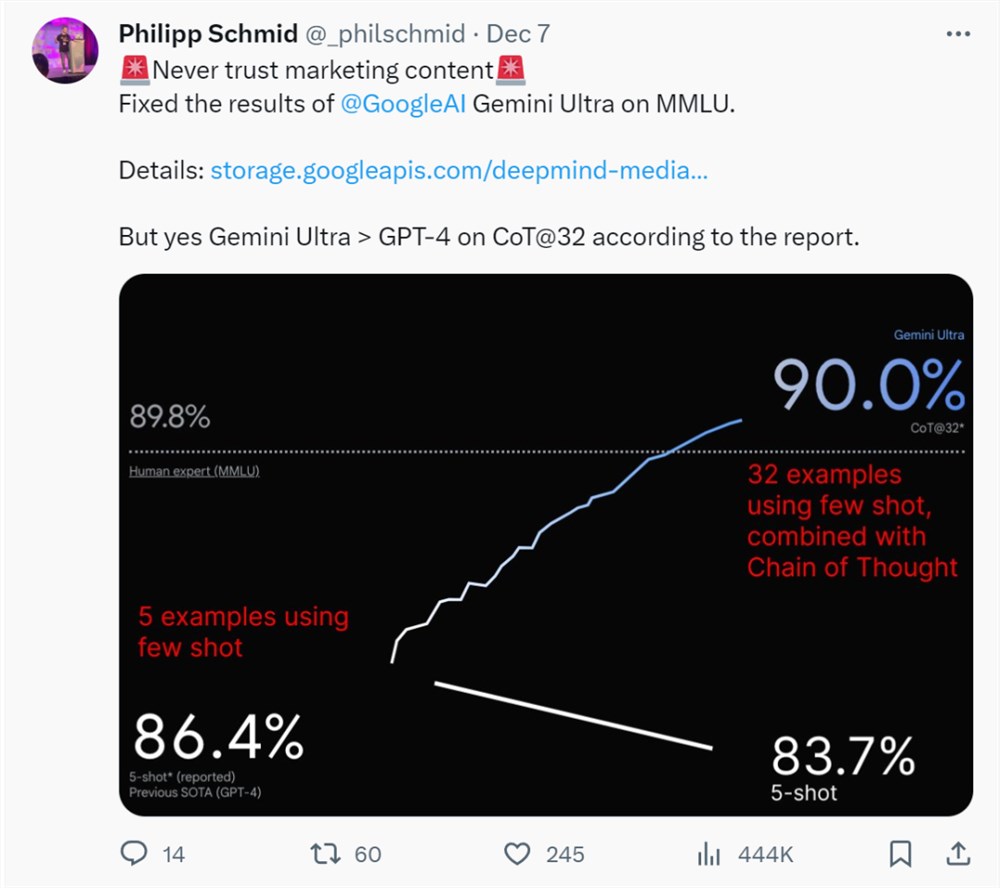

Google Gemini is coming in December 2023.StrongestThe largest and most versatile AI model was called a "revenge killer" by the media. During this period, OpenAI staged a palace drama of "Empress Zhen Huan Returns to the Palace" and did not have any particularly explosive products. This time, can Google take back everything that belongs to it?

Unfortunately, Google has not been able to stage the "Return of the Dragon King" in the field of AI.

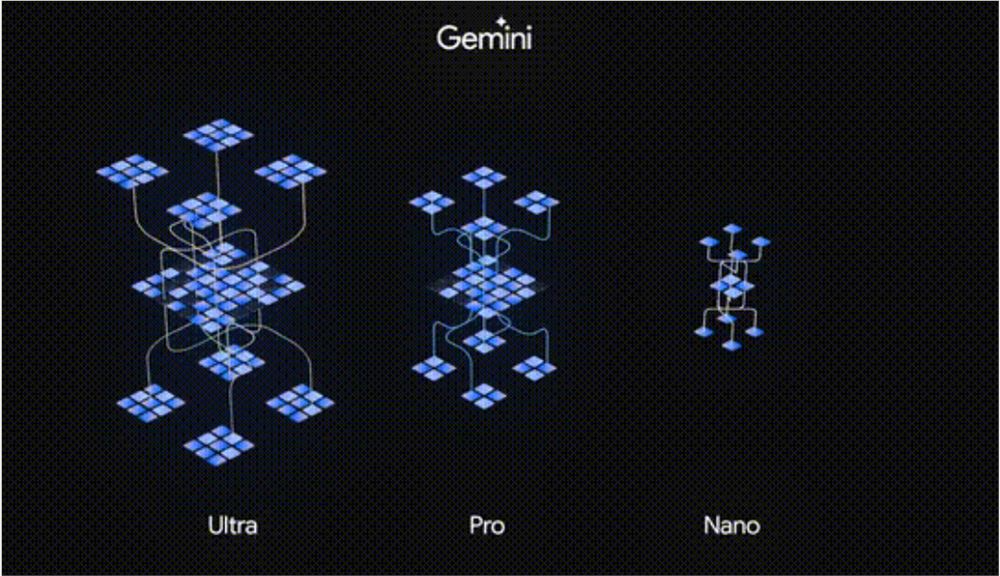

There are three sizes of Gemini: Nano, Pro and Ultra. Gemini Pro lags behind OpenAI's GPT model in common sense reasoning tasks, and Gemini Ultra has only a few percentage points advantage over GPT-4, which was produced by OpenAI a year ago. Moreover, Gemini was also exposed that the multimodal video that claimed to beat GPT-4 had post-production and editing elements, and was trained with Chinese corpus generated by Chinese models, claiming to be Wenxin Yiyan.

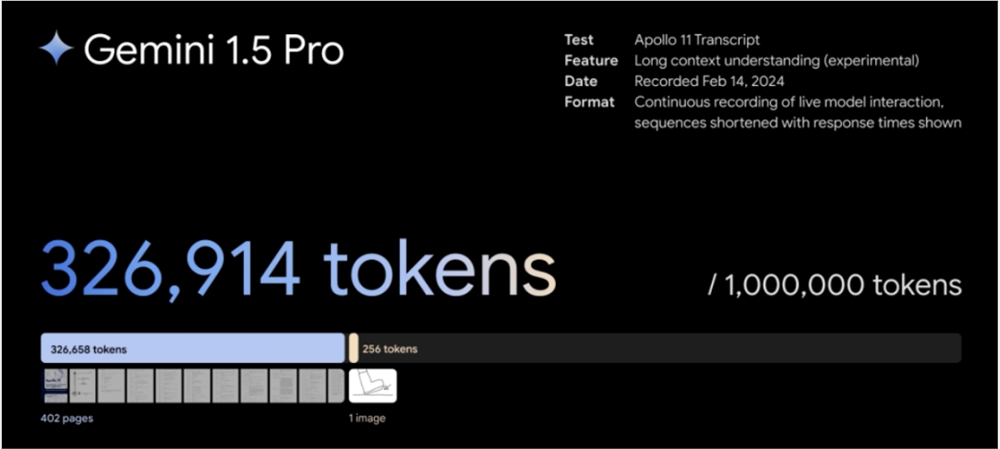

Google continued to work hard and released the multimodal large model Gemini1.5 just a few days after the release of Gemini Ultra. It can stably process up to 1 million tokens and set a record for the longest context window.

This is an exciting achievement, if it weren't for Sora.

A few hours later, OpenAI launched Sora, a text-video generation model. It once again amazed the world with its unprecedented video generation performance and the commercialization of world models, snatching the topic that should have belonged to Gemini 1.5 and strengthening its AI leadership. At present, people tend to think that OpenAI is still one step ahead of Google.

Previously, everyone speculated that GPT-5 had been trained almost to completion.StrongestModel Gemini1.5, some people have already called out to Ultraman, asking him how long he will keep the baby secret and why not release GPT-5 soon.

At this point, the North American AI "Tian Ji Horse Race" that lasted for about a year has come to a temporary end with Google's three consecutive defeats.

AGI’s Different Path

Google's difficulty in flying

AGI is a long race. If we look at the time axis, the one-year competition between Google and OpenAI and the success or failure of the moment may not be a big deal in the future.TopThe track itself is a proof of Google's AI strength.

Rather than the win or loss result, what is more worth discussing is why Google has been the "king" for a whole year, but has always been left behind by OpenAI and can't catch up no matter how hard it tries?

In the story of Tian Ji's horse racing, if he loses once it is a tactical mistake, but if he loses every time, he might want to pay attention to whether there are problems with the horse breeds, stables, fodder, etc.

Going back to the source, Google and OpenAI can be said to have the same destination but different paths.

The common goal is that both sides aim to achieve general artificial intelligence and take the holy grail of AGI;

Different paths mean that the two parties have chosen different technical routes.OpenAI regards more general language capabilities as the basis for achieving AGI, so it adopted the Transformer architecture, which is crucial to the field of NLP, and created a series of GPT models, which led to the stunning debut of ChatGPT.

Google is different. Over the years, Google's AI research and development organization DeepMind has used reinforcement learning and deep learning to solve various AI problems, and has accumulated a wide range of technologies, such as the groundbreaking AlphaGo, the AlphaFold that completely changed biology, and NLP technologies such as Transformer.

This is like two drivers preparing cars for a competition. OpenAI selected a venue for AGI, such as a "Formula car", and then developed and manufactured models with language as the core, optimizing and transforming (engineering) the structure, length and width, engine, cylinder, etc. of the car (model). Google's DeepMind was not sure which car would end the AGI competition, and it had a lot of technical tools, so it tried to build formula cars, sports cars, and motorcycles.

There is no difference between the two routes.However, with the emergence of intelligence in large language models, it has been proven that the technical route chosen by OpenAI is more promising for achieving AGI, while Google DeepMind's technical route has exposed obvious shortcomings:

1. The directions are scattered and the cost is high.The extensive innovations invested in various technical directions consumed a lot of money, and the contradiction between DeepMind and Google's parent company AlphaBeta in commercialization became increasingly serious. When OpenAI's financing accelerated significantly, Google reduced costs by laying off employees in order to increase its investment in AI.

2. There are too many options to choose from and it’s hard to focus.Google has pioneered many technologies, but the importance and continuous research intensity of each technology have been dispersed, and it has been difficult to find any results. The most typical example is the Transformer architecture, which was invented by Google but was further developed by OpenAI. The emergence of ChatGPT was also discovered by a researcher at Google, but was not taken seriously. He left OpenAI and pushed forward.

3. Implementation is slow and results are too slow.Google is also well-known for its conservatism towards AI, which results in low efficiency in converting results even though it has mastered advanced technology. A former Google employee once complained that Google's projects usually start with a lot of hype, then release nothing, and then kill the project a year later. This can be seen in the popularity of Sora. Google has the corresponding technical reserves and results for the diffusion model and text graph model used to train Sora, but it just failed to come up with a product like Sora.

As we can see, because they bet on the wrong track at the beginning, by the time the large language model became the most promising path to achieve AGI, OpenAI had already taken the lead. At this time, if Google wanted to return to the technical track where OpenAI was, it would of course be at a disadvantage.

One wrong step leads to another wrong step in life

Holding on means everything

To be honest, Google is already actively addressing problems, including incorrect technology strategy choices, internal management efficiency and personnel redundancy, and the outflow of AI technical talent.

Last April, Google merged its two AI "leading" teams, Google Brain and DeepMind, to jointly develop Gemini. From the final results, Gemini's performance is very good, and version 1.5 is currently one of the world's leading large models. Internal resources have also been greatly tilted towards the AI field, and some outflow AI talents have returned to Google.

Actual actions show that after Google has identified the track, its determination and speed to catch up with OpenAI are first-class.

But the reality of continued backwardness also fully illustrates one point: although one's own failure is terrible, the success of a friend is even more worrying.

Although Google has tried its best to solve its own problems and promote large models, it cannot withstand the faster acceleration of OpenAI.

On the one hand, OpenAI's R&D team is working at full capacity, while Google's newly merged team still needs to get used to each other.Bill Peebles, a core developer of Sora, once revealed that the team worked intensively for a year without sleeping. After the merger of Google Brain and DeepMind, many employees had to give up the software they were familiar with and the projects they were working on to develop Gemini. The delays and stagnation of the projects caused by these internal adjustments will inevitably hinder Google from catching up with OpenAI.

In addition, compared with Google's re-entry of talent, OpenAI is siphoning talent from around the world.TopAI talent is in high spirits.Just in February, Altman publicly stated on social media that "all key resources are in place and are very focused on AGI" and recruited talents online. The competition in AI is ultimately a competition for talent, because the most important thing for AGI is intellectual resources, and the most important thing is to have a good understanding of the subject.top notchOptimalThere are only so many talented people out there, which makes people worry about whether Google can catch up with OpenAI.

In the movie "Speeding Life 2", after the protagonist overturned his car while trying to race again, he did not continue to pursue winning on the track. Instead, as a driver who deeply loves racing, he stepped onto the track just to prove himself.

The battle between Google and OpenAI cannot be simply concluded as a win or a loss. As Google said in “Why We Focus on AI (and to what end)”: We believe that AI can become a foundational technology that will revolutionize the lives of people around the world - this is exactly what we are pursuing and what we are passionate about!

All the AI "racers" who have the courage to step onto the track deserve applause. And this AGI competition full of speed and passion will surely bring more shocks to us in the audience.