MicrosoftRecently released an open source automation framework PyRIT, a Python risk identification toolkit,It mainly helps security experts and machine learning engineers identify the risks of generative AI and prevent their artificial intelligence systems from getting out of control.

Microsoft’s AI Red Team is already using the tool to examine risks in generative AI systems, including Copilot.

Microsoft emphasized that by making its internal tools available to the public and sharing other investments in the AI Red Team, its goal is to democratize AI safety.

Red Team is a group that plays the role of the enemy or competitor in military exercises, cybersecurity exercises, etc. Those who play the role of the friendly side are called Blue Team. Red Team is usually defined as an enemy force that improves product security by attacking the network.

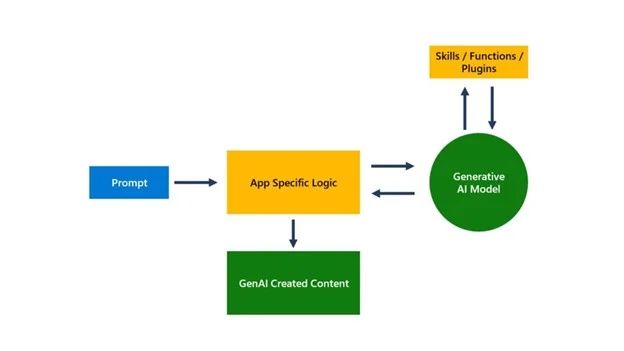

Microsoft AI Red Team has set up a cross-disciplinary group of security experts to manage complex attack exercises. The PyRIT framework works as follows:

-

The PyRit Agent sends malicious prompt words to the target Gen AI system; when it receives a response from the Gen AI system, it sends a response to the PyRIT scoring engine.

-

The scoring engine sends responses to the PyRit agent; the agent then sends new prompts based on the scoring engine's feedback.

-

This automated process continues until the security expert achieves the desired result.

Microsoft has hosted the relevant code on GitHub Interested users can read further.