GoogleVideoPoet Project Research Lead, CMU Adjunct ProfessorJiang LuGoogle Scholar data shows thatTikTok.

Recently, there have been rumors that TikTok has recruited the author of a certain paper as the head of its North American technology department to develop video-generating AI that can compete with Sora.

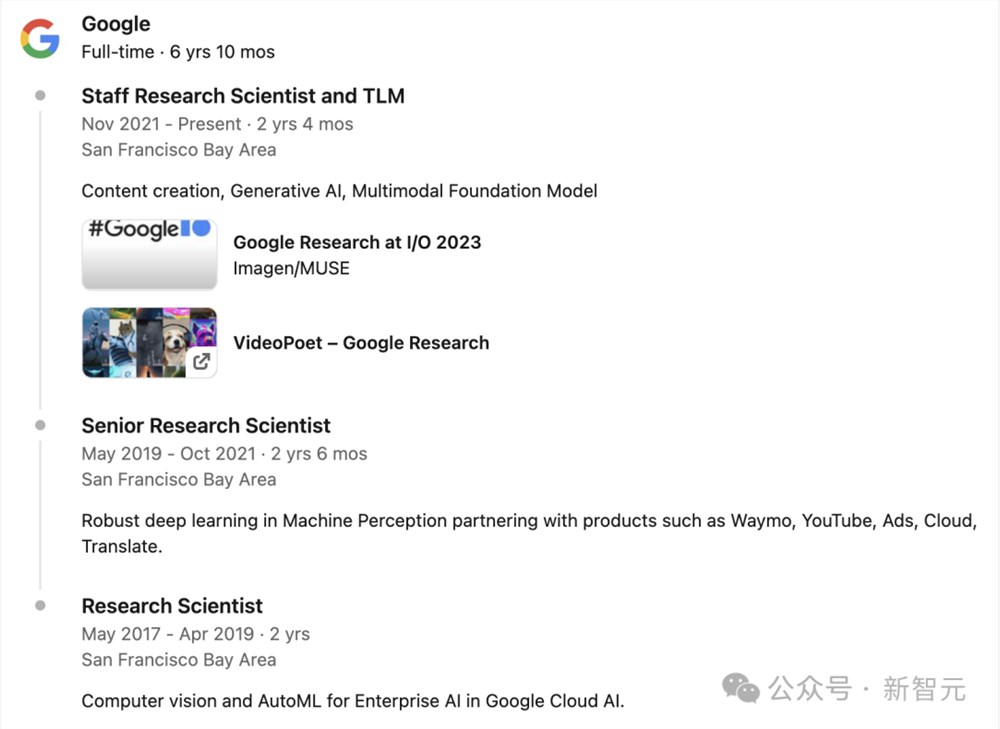

Jiang Lu announced his resignation from Google on LinkedIn three weeks ago, which revealed the mystery to the outside world.

As the head of Google's VideoPoet project, he will leave Google Research, but will remain in the Bay Area to continue his work in the field of video generation.

TalentFirst, data second, computing power third", Xie Saining's AI breakthrough "3 elements" has pointed out the direction for large companies to build their own AI moats in the future.

Jiang Lu led the Google team to launch a video generation technology similar to Sora in technical route at the end of last year: VideoPoet, making him one of the few scientists in the world capable of building the most cutting-edge AI video generation technology.

VideoPoet has pushed the frontier of AI video to generate 10-second long, highly consistent videos with large and coherent movements before the release of Sora.

At the same time, he is also an adjunct professor at CMU and has extensive scientific research experience and achievements.

Jiang Lu is a compound expert who has both a solid theoretical foundation and engineering and management experience in cutting-edge large-scale projects. Naturally, he has become a cornerstone AI talent that large companies are eager to compete for.

Personal Introduction

Lu Jiang works as a research scientist and manager at Google, and is also an adjunct professor at the Language Technologies Institute in the School of Computer Science at Carnegie Mellon University.

At CMU, he not only guides graduate students' research projects, but also teaches courses himself.

His research results are in the fields of natural language processing (ACL) and computer vision (CVPR).TopIt has won numerous awards at conferences, and has also won awards at important conferences such as ACM ICMR, IEEE SLT and NIST TRECVID.

His research has played a critical role in the development and improvement of many Google products: including YouTube, Cloud, Cloud AutoML, Ads, Waymo, and Translate.

These products serve billions of users around the world every day.

In addition to the above, there is another aspect that can well illustrate the high academic level of Jiang Lu: he has collaborated with many scholars in the fields of computer vision and natural language processing.top notchThe researchers have collaborated.

From 2017 to 2018, he was a founding member of the first research team at Google Cloud AI, hand-picked by Dr. Jia Li and Dr. Fei-Fei Li.

He then joined Google Research, where he collaborated with Dr. Weilong Yang (2019-2020), Dr. Ce Liu (2020-2021), Madison Le (2021-2022), and Dr. Irfan Essa (2023).

In addition, during his doctoral studies at Carnegie Mellon University, his thesis was co-supervised by Dr. Tat-Seng Chua and Dr. Louis-Philippe Morency. He successfully graduated in 2017 with the help of Dr. Alexander Hauptmann and Dr. Teruko Mitamura.

During his internships at Yahoo, Google, and Microsoft Research, he was mentored by Dr. Liangliang Cao, Dr. Yannis Kalantidis, Sachin Farfade, Dr. Paul Natsev, Dr. Balakrishnan Varadarajan, Dr. Qiang Wang, and Dr. Dongmei Zhang.

From his resume on LinkedIn, we can see that he has left his footprints in many major technology companies.

I have had internship experiences at CMU and NSF.

Before graduation, he interned at Yahoo, Google, and Microsoft.

He received his undergraduate degree from Xi'an Jiaotong University, his graduate degree from the Free University of Brussels, and his doctorate from CMU.

VideoPoet

The team he led at Google launched VideoPoet at the end of last year, which replaced the traditional UNet with Transformer and became the SOTA of AI video generation at that time.

This achievement also became the main reason why TikTok chose him.

Compared to Gen-2, which can only generate small movements, VideoPoet can generate 10 seconds of ultra-long, continuous large-movement videos at a time, which can be said to be a complete win!

In addition, VideoPoet is not based on a diffusion model, but a large multimodal model, which has capabilities such as T2V and V2A, and may become the mainstream of video generation in the future.

Compared to other models, Google's approach is to seamlessly integrate multiple video generation functions into a single large language model without relying on dedicated components trained separately for each task.

Specifically, VideoPoet mainly consists of the following components:

- Pre-trained MAGVIT V2 video tokenizer and SoundStream audio tokenizer, which can convert images, videos and audio clips of different lengths into discrete code sequences in a unified vocabulary. These codes are compatible with text-based language models, making them easy to combine with other modalities such as text.

- Autoregressive language models can perform cross-modal learning between video, image, audio, and text, and predict the next video or audio token in a sequence in an autoregressive manner.

- Introduced multiple multimodal generative learning objectives in the large language model training framework, including text to video, text to image, image to video, video frame continuation, video restoration/expansion, video stylization, and video to audio. In addition, these tasks can be combined with each other to achieve additional zero-shot capabilities (e.g., text to audio).

VideoPoet is able to multitask on a variety of video-centric inputs and outputs. Among them, LLM can choose to take text as input to guide the generation of text-to-video, image-to-video, video-to-audio, stylization, and image augmentation tasks.

A key advantage of using LLM for training is that many of the scalable efficiency improvements introduced in the existing LLM training infrastructure can be reused.

However, LLM operates on discrete tokens, which may pose challenges for video generation.

Fortunately, there are video and audio tokenizers that can encode video and audio clips into sequences of discrete tokens (i.e., integer indices) and can convert them back to their original representation.

VideoPoet trains an autoregressive language model that learns across video, image, audio, and text modalities by using multiple tokenizers (MAGVIT V2 for video and image, SoundStream for audio).

Once the model has generated tokens based on the context, a tokenizer decoder can be used to convert these tokens back into a viewable representation.

VideoPoet task design: Different modalities are converted to and from tokens through tokenizer encoders and decoders. There are boundary tokens around each modality, and task tokens indicate the type of task to be performed.

Compared with previous video generation models, VideoPoet has three major advantages.

One is that it can generate longer videos, another is that users can have better control over the generated videos, and the last one is that VideoPoet can also generate different camera techniques based on text prompts.

In the test, VideoPoet also took the lead, crushing many other video generation models.

Text Fidelity:

User preference ratings of text fidelity, i.e., accuracy in following promptsFirst choicePercentage of Video

Action fun:

Users’ preference ratings on the fun of actions, i.e., in terms of producing interesting actions,First choicePercentage of Video

In summary, we can see that on average 24-35% of people think that the examples generated by VideoPoet follow the prompts better than other models, while this proportion is only 8-11% for other models.

Furthermore, 41%-54% of the evaluators rated the example actions in VideoPoet as more interesting, while only 11%-21% for the other models.

As for future research directions, Google researchers said that the VideoPoet framework will achieve "any-to-any" generation, such as extending text to audio, audio to video, and video subtitles, etc.