OpenAI At the DevDay event held in November last year, GPT-4 Turbo was launched, which supports up to 128,000 token: The input token price is one-third of that of GPT-4, and the output token price is half of that.

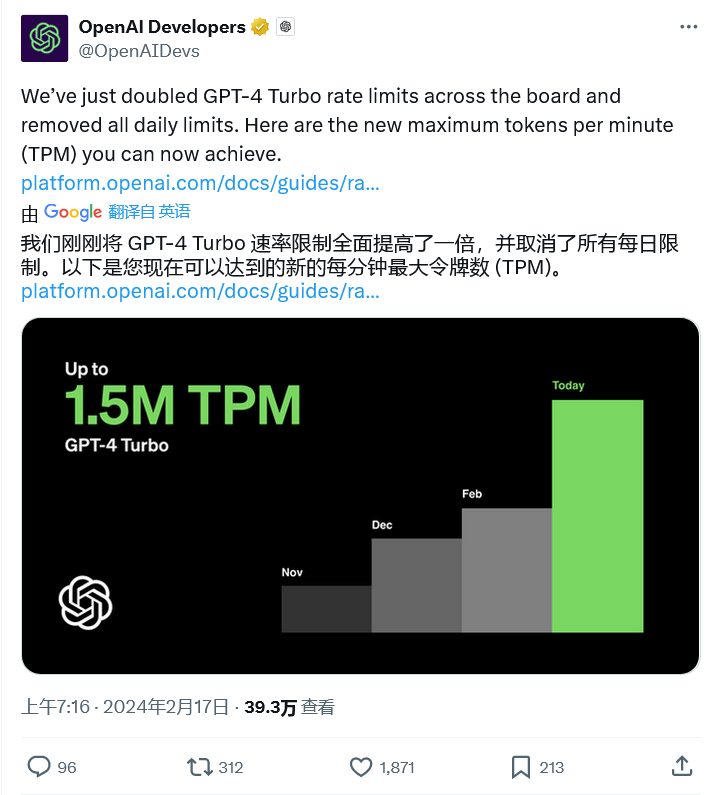

OpenAI recently released a press release announcing that the GPT-4 Turbo rate limit has doubled.Up to 1.5 million tokens can be processed per minute (TPM), and the daily limit has been completely removed.

OpenAI limits the rate at which companies can access the API to prevent abuse, ensure fairness, and manage infrastructure load. This limit prevents malicious overloading of the API, ensures fair access for all users, and maintains smooth performance during periods of high demand by throttling the number of requests allowed in a given time.

OpenAI uses a total of 5 rate limits: RPM (requests per minute), RPD (requests per day), TPM (tokens per minute), TPD (tokens per day), and IPM (images per minute).

It is important to note that rate limits are enforced at the organization level and have no impact on individual users. Rate limits vary based on the mode used, and there are also "usage limits" on the total monthly spend of the organization on the API.