In-depth understandingSoraBefore we discuss how to process diverse visual data, let's first imagine a scenario in life: you are flipping through an album of world attractions, which contains photos of scenery from different countries and styles, some of which are wide sea views, some are narrow alleys, and some are brightly lit city views at night. Although these photos have different contents and styles, you can easily identify the place and emotion represented by each photo because your brain can unify these different visual information.

Now, let's compare this process to how Sora handles diverse visual data. The challenge Sora faces is like having to process and understand millions of images and videos from all over the world, captured by different devices. These visual data vary in resolution, aspect ratio, color depth, etc. In order for Sora to understand and generate such rich visual content like the human brain,OpenAIA set of methods is developed to transform these different types of visual data into a unified representation.

Machines in ancient ruins

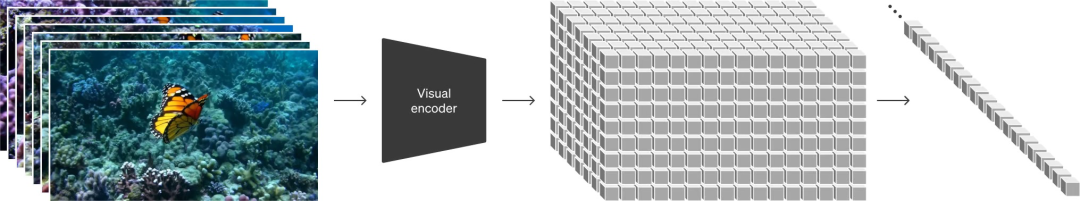

First, Sora compresses the input image or video into a lower-dimensional representation through a technology called "video compression network", a process similar to "standardizing" photos of different sizes and resolutions for easy processing and storage. This does not mean ignoring the uniqueness of the original data, but converting them into a format that is easier for Sora to understand and operate.

Next, Sora further decomposes these compressed data into so-called "space-time patches", which can be thought of as basic building blocks of visual content, just like each photo in our previous album can be decomposed into small fragments containing unique landscapes, colors, and textures. In this way, regardless of the length, resolution, or style of the original video, Sora can process them into a consistent format.

In this way, Sora is able to unify visual data from different sources and styles into an actionable internal representation while preserving the richness of the original visual information. It’s like looking at an album of world landmarks, where despite the diversity of the photos, you can still understand and appreciate them in the same way.

Butterfly in the underwater ruins (what the hell!)

This ability to process diverse visual data allows Sora to not only understand the intent behind a text prompt like "cat sitting on the windowsill," but also use its internal representation to combine different types of visual information to generate a video or picture that matches the text prompt. It's like finding those fragments from the world's visual data that can piece together the "cat sitting on the windowsill" scene you imagined, and combining them to create a brand new visual work.

Diffusion model with text conditionalization

Following the concept of space-time patches, let's explore the mechanism of how Sora generates content based on text prompts. The core of this process relies on a "text-conditioned Diffusion model." In order to understand the principle of this technology, we can use an analogy from daily life to help understand: Imagine that you have a doodle draft book in your hand. At the beginning, there are only random mottled handwriting on the draft book, which seems meaningless. But if you follow a specified theme, such as "garden", and gradually modify and optimize these mottled handwriting, eventually, these disordered lines will gradually become a beautiful garden picture. In this process, your "specified theme" is like a text prompt, and the process of gradually optimizing the draft book is similar to the way the Diffusion model works.

Specifically for Sora's implementation, the process starts with a video of the same length as the target video, but with completely random noise content. You can think of this noisy video as meaningless, mottled handwriting on a draft book. Then, Sora starts to "alter" the video based on the given text prompt (such as "a cat sits on the windowsill watching the sunset"). In this process, Sora uses the knowledge learned from a large amount of video and image data to decide how to gradually remove noise and transform the noisy video into content close to the text description.

This "alteration" process is not done overnight, but through hundreds of gradual steps, each of which brings the video closer to the final goal. A key advantage of this method is its flexibility and creativity: the same text prompt can generate visually different video content that is consistent with the text prompt through different noise initial states or by slightly adjusting the conversion steps. This is like multiple painters creating paintings of different styles based on the same theme.

Through this text-based Diffusion model, Sora can not only generate highly creative videos and pictures, but also ensure that the generated content is highly consistent with the user's text prompts. Whether simulating real scenes or creating a fantasy world, Sora can "alter" amazing visual works based on text prompts.

The text-conditioned Diffusion model gives Sora powerful understanding and creativity, allowing it to cross the barriers between language and vision and transform abstract text descriptions into concrete visual content. This process not only demonstrates the progress of AI in understanding natural language, but also opens up new possibilities in video content creation and visual art.

Following this section, we will further explore the Sora video generation process, especially the role and importance of the video compression network and spatial-temporal latent patches in this process.

Spacetime Patches

Before we dive into the three key steps of how Sora generates videos, let’s first focus on exploring the concept of spacetime patches, which is crucial to understanding how Sora processes complex visual content.

Space Time PatchIt can be simply understood as breaking down the video or image content into a series of small blocks or "patches", each of which contains part of the spatiotemporal information. This approach is inspired by the technology of processing static images, where the image is divided into small blocks for more efficient processing. In the context of video processing, this concept is extended to the time dimension, including not only space (i.e. parts of the image) but also time (i.e. how these areas change over time).

To understand how space-time patches work, we can borrow a simple metaphor from everyday life: Imagine that you are watching an animated movie. If we cut this movie into static frames, and each frame is further cut into smaller regions (i.e., "patches"), then each small region will contain information about a part of the picture. Over time, the information in these small regions will change as objects move or scenes change, adding dynamic information in the time dimension. In Sora, such "space-time patches" allow the model to process each small fragment of the video content in more detail, while taking into account their changes over time.

Specifically, in Sora's processing of visual content, the spatial-temporal patches are first generated by a video compression network. This network is responsible for compressing the raw video data into a lower-dimensional representation, a dense network consisting of many small blocks. These small blocks are what we call "patches", and each patch carries the spatial and temporal information of a part of the video.

Once these spatial-temporal patches are generated, Sora can start their transformation process. Through the pre-trained transformer model, Sora is able to identify the content of each patch and modify it accordingly based on the given text prompt. For example, if the text prompt is "dog running in the snow", Sora will find patches related to "snow" and "running dog" and adjust them accordingly to generate video content that matches the text prompt.

This spatial-temporal patch-based processing has several significant advantages. First, it allows Sora to operate on video content at a very fine level because it can process each small piece of information in the video independently. Second, this approach greatly improves the flexibility of processing videos, allowing Sora to generate high-quality videos with complex dynamics, which is a huge challenge for traditional video generation technology. In addition, by effectively managing and transforming these patches, Sora is able to create rich and diverse visual effects while ensuring the coherence of video content to meet the various needs of users.

As we further explore Sora's video generation process, we can see that spatial-temporal patches play an extremely important role in this process. They are not only the cornerstone of Sora's processing and understanding of complex visual content, but also one of the key factors that enable Sora to efficiently generate high-quality videos. Next, we will take a deeper look at the video compression network and its relationship with spatial-temporal latent patches, as well as their role and importance in the video generation process.

Video generation process

Then the aboveSpace Time PatchWith the introduction of Sora, we will explore in detail the three key steps in Sora's video generation process: video compression network, spatial-temporal latent patch extraction, and the Transformer model for video generation. Through a series of metaphors, we will try to make these concepts easier to understand.

Step 1: Video Compression Network

Imagine you are cleaning and reorganizing a messy room. Your goal is to fit everything into as few boxes as possible while making sure you can find what you need quickly later. In the process, you might put small items into small boxes, and then put those small boxes into larger boxes. This way, you store the same amount of items in less, more organized space. Video compression networks work on this principle. They "clean and organize" the content of a video into a more compact and efficient form (i.e., reduce the dimensionality). This allows Sora to process it more efficiently while still retaining enough information to reconstruct the original video.

Step 2: Spatial-temporal latent patch extraction

Next, if you want to keep a detailed record of what is in each box, you might write a list for each box. That way, when you need to retrieve an item, you can quickly locate which box it is in by simply looking at the corresponding list. In Sora, a similar "list" is a spatial-temporal latent patch. After processing through the video compression network, Sora breaks the video into small blocks that contain a small portion of the spatial and temporal information in the video, like a detailed "list" of the video content. This allows Sora to process each part of the video in a targeted manner in subsequent steps.

Step 3: Transformer model for video generation

Finally, imagine playing a jigsaw puzzle with a friend, but the goal of the game is to put a picture together based on a story. You start by breaking the story into sections, each of you responsible for a section. Then, you choose or draw a part of the puzzle based on the section of the story you are responsible for. Finally, everyone combines their own puzzle parts to form a complete picture that tells the whole story. In Sora's video generation process, the Transformer model plays a similar role. It receives spatial-temporal latent patches (i.e., "puzzle pieces") of video content and text prompts (i.e., "story"), and then decides how to transform or combine these fragments to generate a final video that tells the story in the text prompt.

Through the collaborative work of these three key steps, Sora is able to transform text prompts into video content with rich details and dynamic effects. Not only that, this process also greatly enhances the flexibility and creativity of video content generation, making Sora a powerful video creation tool.

Technical features and innovations

Next, we will take a closer look at Sora’s technical features and innovations to better understand its leading position in the field of video generation.

Support various video formats

First of all, Sora demonstrates its support for a variety of video formats. For example, Sora can handle widescreen 1920x1080p videos, vertical 1080x1920 videos, or videos of any other ratio. This capability allows Sora to directly generate content in its native ratio for different devices to adapt to changing viewing needs. In addition, Sora can quickly prototype content at a lower resolution and then generate it at full resolution, all under the same model. This feature not only increases the flexibility of content creation, but also greatly simplifies the process of video content generation.

Flat turtle

Square Turtle

Long turtle

Improved video composition and framing

Furthermore, Sora also shows significant improvements in video composition and framing. By training on native scale, Sora can better grasp the composition and framing design of the video, and can more accurately maintain the full view of the video subject compared to models that crop all training videos into squares. For example, for videos in widescreen format, Sora can ensure that the main content is always in the viewer's line of sight, rather than showing only part of the subject like some models do. This not only improves the visual quality of the generated video, but also improves the viewing experience.

Running car

Language Understanding and Video Generation

Another important feature of Sora is its ability to deeply understand text. Using advanced text parsing technology, Sora can accurately understand the user's text instructions and generate characters and vivid scenes with rich details and emotions based on these instructions. This ability makes the transition from short text prompts to complex video content more natural and smooth. Whether it is a complex action scene or delicate emotional expression, Sora can accurately capture and present it.

Delicious burger

Multimodal input processing

Finally, Sora's multimodal input processing capabilities cannot be ignored. In addition to text prompts, Sora can also accept static images or existing videos as input to perform operations such as content extension, filling missing frames, or style conversion. This capability greatly expands the scope of Sora's application. It can not only be used to create video content from scratch, but also for secondary creation of existing content, providing users with more creative space.

First input

Second input

1+2=3, video synthesis, start!

Through the above four technical features and innovations, Sora has established its leadership in the field of video generation. Whether in video format support, video composition improvement, or language understanding and multimodal input processing, Sora has demonstrated its strong capabilities and flexibility, making it a powerful tool for creative professionals in different fields.

Sora can not only generate videos with dynamic camera motion, but also simulate simple world interactions. For example, it can generate a video of a person walking, showing 3D consistency and long-term consistency.

Simulation capabilities

Sora's simulation capabilities demonstrate unique advantages in the field of AI video generation. The following are its key capabilities in simulating real-world dynamics and interactions:

3D consistency

Sora is able to generate videos that exhibit dynamic camera motion, which means that it can not only capture the action in a flat image, but also present the movement of objects and people in 3D. Imagine that when the camera rotates around a dancing figure, you can see the person's movements from different angles, while each movement of the character and the background can be kept in the correct spatial position. This ability shows the depth of Sora's understanding of three-dimensional space, making the generated videos more visually realistic and vivid.

The rotating mountain

Long-term consistency

When generating long videos, it is challenging to keep the characters, objects, and scenes in the video consistent. Sora demonstrates excellent ability in this regard, being able to accurately maintain the appearance and attributes of characters across multiple shots of a video. This includes not only the appearance of the characters, but also their behavior and interaction with the environment. For example, if a character in a video starts out wearing red, the character's clothing will remain consistent even in different parts of the video. Similarly, if a video depicts a character walking from one table to another, the character's relative position and interaction with the tables will remain accurate even if the perspective changes, demonstrating Sora's strong ability to maintain long-term consistency.

The dog that is always looking around

World Interactive Simulation

Going a step further, Sora can simulate simple interactions between characters and the environment, such as the dust flying underfoot when a person walks, or the color changes on the canvas when painting. These details are small, but they greatly enhance the realism of the video content. For example, when a character is painting in a video, Sora can not only generate the action itself, but also ensure that each stroke leaves a mark on the canvas, which accumulates over time, showing Sora's delicate handling of simulating real-world interactions.

Flowers I can't draw

Through these technical features, Sora is able to simulate dynamic visual effects when generating video content, and also capture deeper interaction patterns that are consistent with our daily life experience. Although there are still challenges in dealing with complex physical interactions and long-term consistency, Sora has demonstrated significant capabilities in simulating simple world interactions, opening up new paths for the development of future AI technology, especially in the field of understanding and simulating real-world dynamics.

Discussion and limitations

Although Sora, OpenAI's latest video generation AI model, has made significant progress in simulating real-world dynamics and interactions, it still faces some limitations and challenges. Here are Sora's current main limitations and ways to overcome these challenges.

Limitations of Physical World Simulations

Although Sora can generate dynamic scenes with a certain degree of complexity, it still has limitations in simulating the accuracy of the physical world. For example, Sora sometimes cannot accurately reproduce complex physical interactions, such as the delicate process of glass breaking, or scenes involving precise mechanical movements. This is mainly because Sora's current training data lacks enough examples for the model to learn these complex physical phenomena.

Broken cups (this train of thought...)

Strategies to overcome challenges:

- Expanding the training dataset: Integrate more high-quality video data containing complex physical interactions to enrich the samples for Sora learning.

- Physics engine integration: Integrating a physics engine into Sora’s framework allows the model to refer to physical rules when generating videos, improving the realism of physical interactions.

Difficulty in Generating Long Videos

Another challenge Sora faces when generating long videos is how to maintain the long-term consistency of the video content. For longer videos, it becomes more difficult to maintain the continuity and logical consistency of characters, objects, and scenes. Sora may sometimes produce inconsistencies in different parts of the video, for example, a character's clothing suddenly changes, or the position of an object in the scene is inconsistent.

Strategies to overcome challenges:

- Enhanced temporal continuity learning: By improving the training algorithm, the model's learning ability for time continuity and logical consistency is enhanced.

- Serialization: During the video generation process, a serialization processing method is adopted to generate the video frame by frame in chronological order to ensure that each frame is consistent with the previous and next frames.

Accurately understand complex text instructions

Although Sora performs well in understanding simple text instructions and generating corresponding videos, the model sometimes has difficulties with complex text instructions that contain multiple meanings or require precise depiction of specific events. This limits Sora's application in generating more complex creative content.

Strategies to overcome challenges:

- Improving language models: Improve the complexity and accuracy of Sora's built-in language understanding model, enabling it to better understand and analyze complex text instructions.

- Text preprocessing: Introducing advanced text preprocessing steps to decompose complex text instructions into multiple simple subtasks that are easy for the model to understand, generate them one by one, and finally integrate them into a complete video.

Training and Generation Efficiency

As a highly complex model, the time efficiency of Sora's training and video generation is a challenge that cannot be ignored. The generation of high-quality videos usually takes a long time, which limits the application of Sora in real-time or fast feedback scenarios.

Strategies to overcome challenges:

- Optimizing model structure: Optimize Sora's architecture to reduce unnecessary calculations and improve operating efficiency.

- Hardware Acceleration: Use more powerful computing resources and specialized hardware acceleration technology to shorten the time of video generation.

In general, although Sora's performance in video generation and simulating real-world interactions is already excellent, there are still many challenges. Through the implementation of the above strategies, we have reason to believe that in the future Sora will be able to overcome the current limitations while maintaining innovation and show more powerful and extensive application potential.