Meta Chief AI scientist Yann LeCun launched the JEPA (Joint Embedding Predictive Architectures) model architecture in 2022.The following year, an “I-JEPA” image prediction model was developed based on the JEPA architecture, and a new model called “V-JEPA"ofVideo Prediction Model.

It is reported that the relevant JEPA architecture and I-JEPA/V-JPA models focus on "predictive ability", claiming that they can use abstraction to efficiently predict and generate the obscured parts of images/videos in a "human-understandable" way.

IT Home noticed that the researchers used a series of specific masked videos to train the I-JEPA/V-JEPA model. The researchers required the model to use an "abstract method" to fill in the missing content in the video, so that the model can learn the scene during the filling and further predict future events or actions, thereby achieving a deeper understanding of the world.

▲ Image source: Meta official press release (the same below)

The researchers said this training method allows the model to focus on the high-level concepts of the film, rather than "getting bogged down in details that are not important for downstream tasks."The researchers gave an example: "When humans watch a video containing trees, they don't particularly care about the movement of leaves." Therefore, the model using this abstract concept is more efficient than competing products in the industry..

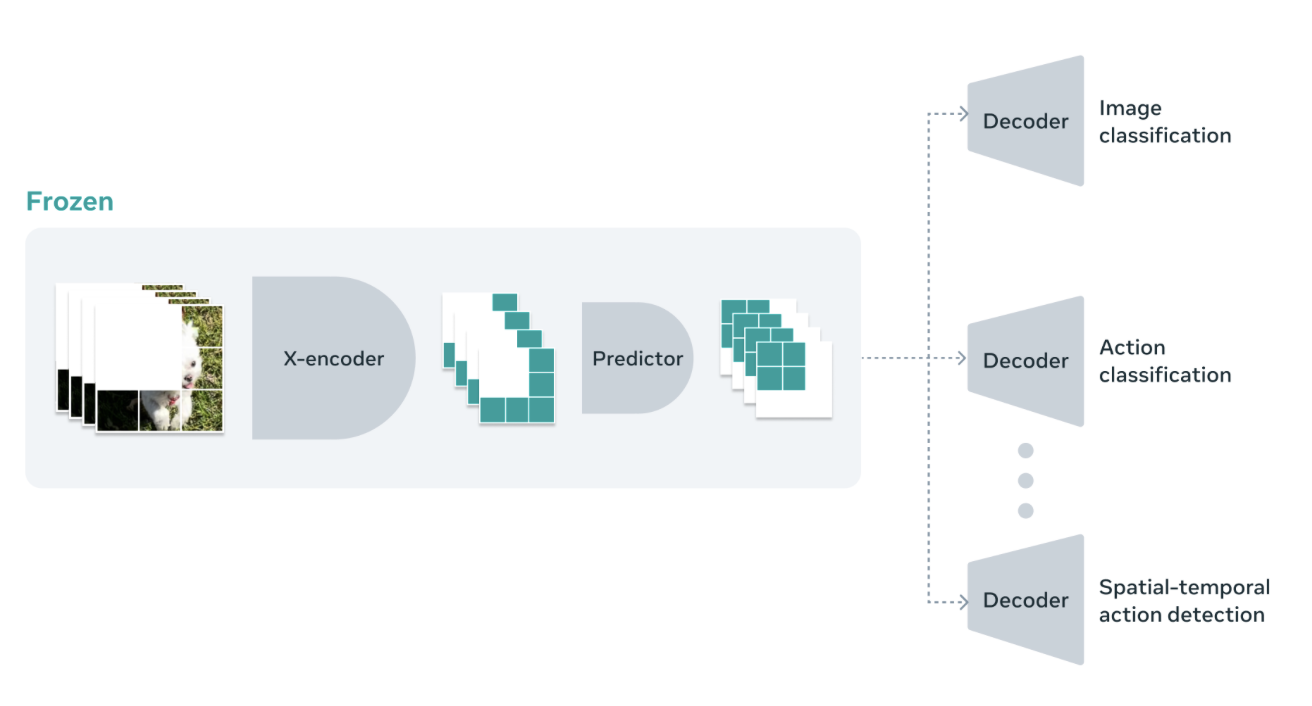

The researchers also mentioned that V-JEPA uses a design structure called "Frozen Evaluations", which means that "the core part of the model will not change after pre-training", so only a small specialized layer needs to be added to the model to adapt to new tasks, making it more universal.