NvidiaToday marks the release of the "Chat with RTX" application, a Windows PC-basedChatbots, powered by TensorRT-LLM.

The "Chat with RTX" chatbot is designed as a localized system that users can use without the need for Internet access, and the application is supported by all GeForce RTX 30 and 40 GPUs with at least 8 GB of video memory.

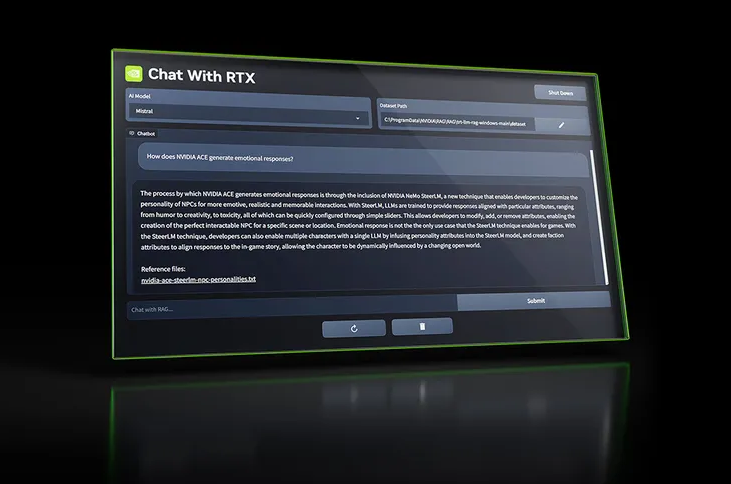

Chat with RTX supports a wide range of file formats including text, pdf, doc / docx and xml.. Simply point the app to the folder containing the files and it will load them into the library in seconds. Additionally, users can provide the URL of a YouTube playlist and the app will load transcriptions of the videos in the playlist, enabling users to look up what they cover.

From the official description, users can use Chat With RTX in the same way as they use ChatGPT, through different queries.However, the results generated will be based solely on the specific dataset, which seems to be better suited for operations such as generating summaries, quickly searching documents, and so on.

Having an RTX GPU that supports TensorRT-LLM means users will use all data and projects locally rather than keeping them in the cloud, which saves time and provides more accurate results.

NVIDIA says that TensorRT-LLM v0.6.0, which boosts performance by 5x, will be available later this month. It will also support other LLMs such as Mistral 7B and Nemotron 3 8B.