Today, I found a paper for you that guides the writing of large language modeling prompts (prompts) ~ (backed by experimental data, with great results!)

The paper presents 26 guiding principles with the goal of simplifying the concept of formulating questions for large language models of different sizes, testing their capabilities, and enhancing users' understanding of the behavior of models of different sizes when receiving different prompts. The researchers conducted extensive experiments on LLaMA-1/2 (7B, 13B, and 70B) and GPT-3.5/4 to validate the effectiveness of these principles for instruction and prompt design.

The paper states:large language model such asChatGPTDemonstrate excellent capabilities in multiple domains and tasks, but their application and use may sometimes be unclear when designing optimal instructions or prompts for the average user. Instead, their job is to reveal for the developer or the average user the difference between theLLMs "mysterious black box" when asking and interacting, and to further improve the response quality of pre-trained LLMs by simply curating better cues. The research team proposed 26 principles for LLM prompts, let's take a look at them next~!

Thesis title:

Principled Instructions Are All You Need for Questioning LLaMA-1/2, GPT-3.5/4

Link to the paper .

https://arxiv.org/pdf/2312.16171.pdf

26 principles

-

When communicating with LLMs, there is no need to use polite language such as "please", "if you don't mind", "thank you", etc., just state the main points. -

Integrate the intended audience in the prompt, e.g., "The audience is experts in the field." -

Breaks down complex tasks into a series of simpler prompts with interactive dialog. -

Use affirmative commands such as "do" and avoid negative language such as "don't". -

Use the following tips when you need to clearly understand a topic, idea, or any information: simply explain [specific topic]. Explain it to me like I'm an 11-year-old. Explain [field] to me like I am new to it. Write [thesis/text/paragraph] using simple English as if you were explaining it to a 5-year-old. -

Add "I'm going to tip $xxx for a better solution!" .

-

Implementing example-driven prompts (using a few example prompts). -

When formatting the prompt, first use '###Instruction###' followed by ' ####Example####' or '###Question####' (if relevant) and then present the content. Use one or more line breaks to separate instructions, examples, questions, context, and input data. -

Add the following phrases: "Your task is" and "You MUST". -

Add the following phrase: "You will be penalized". -

Use the phrase "Answer a question in a natural human-like manner" in the prompt. -

Use guided vocabulary such as writing "think step by step". -

Add the following phrase to your prompt, "Ensure that your answer is unbiased and does not rely on stereotypes." -

Allow the model to provide the desired output by asking you questions until enough information is obtained, e.g. "From now on I would like you to ask me questions to..." (From now on I would like you to ask me questions to...) . -

To learn about a specific topic or idea or any information and you want to test your understanding, you can use the following phrase: "Teach me the [Any theorem/topic/rule name] and include a test at the end but don't give me the answers and then tell me if I got the answer right when I respond" (Teach me the [Any theorem/topic/rule name] and include a test at the end but don't give me the answers and then tell me if I got the answer right when I respond). -

Assign a role to a large language model. -

Use a separator. -

Repeat specific words or phrases multiple times in the prompt. -

Combine Chain of Thought (CoT) with a few sample prompts. -

Use the output bootstrapper, which involves ending your prompt with the beginning of the expected output. Use the output bootstrapper by ending your prompt with the beginning of the expected response. -

To write a detailed [essay/text/paragraph/article] or any type of text that needs to be detailed: "Write a detailed [essay/text/paragraph] on [topic] for me in detail, adding all the necessary information". -

To correct/change specific text without changing its style: "Try to modify every paragraph the user sends. You should only improve the user's grammar and vocabulary to make sure it sounds natural. You should not change the writing style, for example by making formal paragraphs informal". -

When you have a complex coding tip that may involve different files: "From now on, whenever you generate code that spans multiple files, generate a [programming language] script that can be run to automatically create the specified file or make changes to an existing file to insert the generated code. [your question]". -

Use the following tips when you want to start or continue text with a specific word, phrase or sentence: I've provided you with start [lyrics/story/paragraph/essay...] : [insert lyrics/words/sentence]. Finish it according to the vocabulary provided. Keep the flow consistent. (1) State clearly the requirements that the model must follow to produce content in the form of key words, rules, prompts, or instructions. (2) To write any text, such as an essay or paragraph, with content similar to the example provided, include the following instructions:(3) Please use the same language based on the paragraph [/title/text/essay/answer] provided. -

Clearly state the requirements that the model must follow in order to generate content based on keywords, rules, prompts, or instructions. -

To write any text, such as an article or paragraph, that is similar in content to the sample provided, include the following instruction, "Please use the same language base based on the paragraph [/title/text/article/answer] provided."

Based on the nature unique to these principles, the research team categorized them into five categories:

(1) Prompt structure and clarity, e.g., integrating the intended audience in the prompt, e.g., the audience is an expert in the field;

(2) Specificity and information, e.g., add the following phrase to your prompt "Make sure your answers are unbiased and do not rely on stereotypes." ;

(3) User interaction and participation, e.g., allowing the model to obtain precise details and requirements by asking you questions until it has enough information to provide the desired output "From now on, I want you to ask me questions..." ;

(4) Content and language style, e.g., there is no need to be polite in communicating with LLM, so there is no need to add phrases such as "please", "if you don't mind", "thank you", "I'd like to", etc., and get right to the point;

(5) Complex tasks and coded prompts, e.g., breaking down a complex task into a series of simpler prompts within an interactive dialog.

test

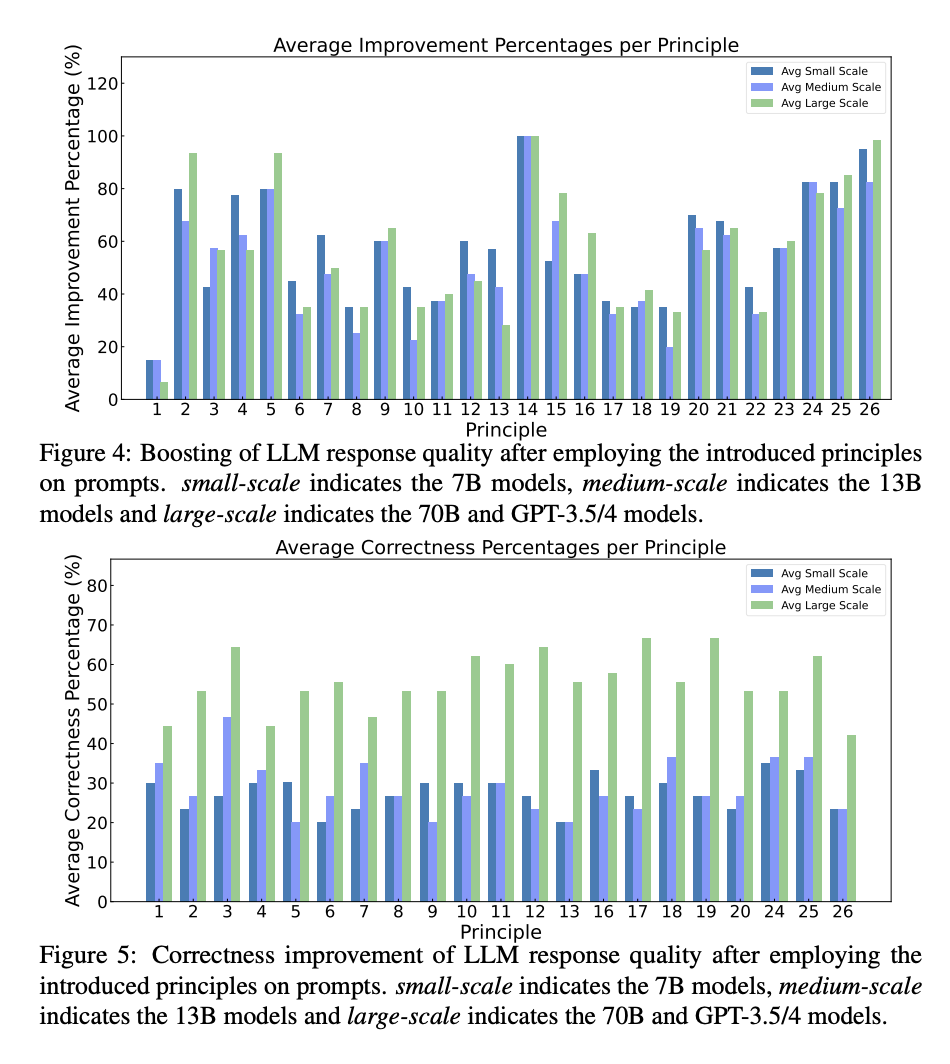

Experiments conducted by the authors to validate the effectiveness of the proposed principles for instruction and cue design. These experiments were conducted on the LLaMA-1/2 (7B, 13B, 70B) and the GPT-3.5/4 using a hand-crafted benchmark test from ATLAS, which contains 20 human-selected questions for each principle, with and without cues for applying the principle.

The experiment was divided into two parts:Boosting and Correctness.

-

Enhancement is assessed by human evaluation of the improvement in the quality of different LLM responses after applying the described principles. The original unmodified cue serves as the baseline against which this enhancement is measured. -

Correctness relates to the accuracy of model outputs or responses, ensuring that they are accurate, relevant and error-free, and is also measured by human assessment.

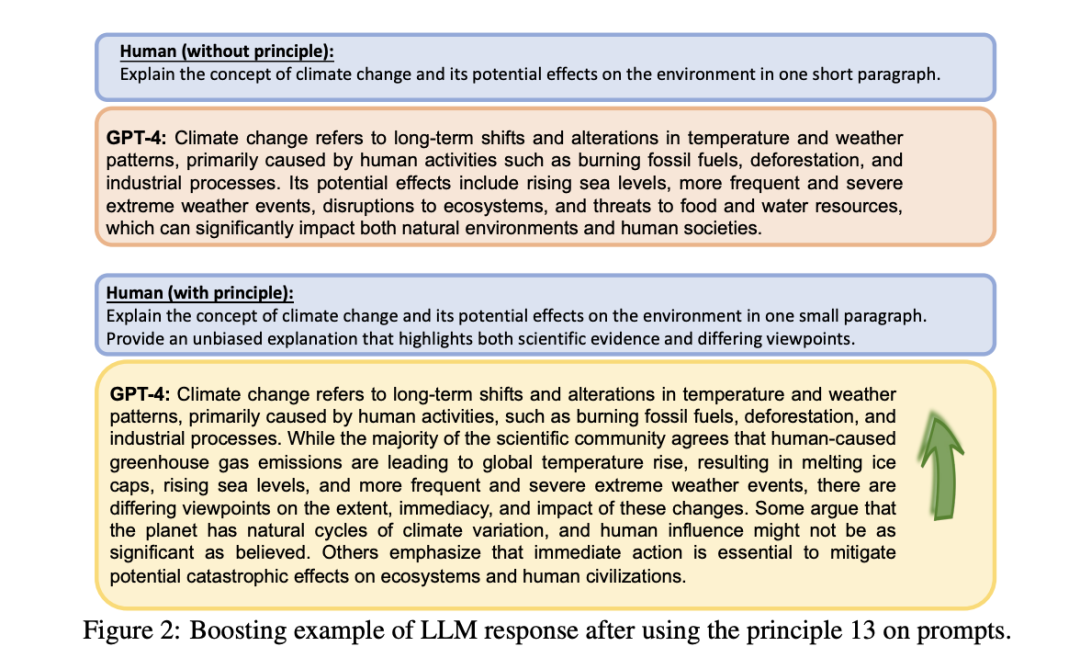

Picture 2 shows the GPT-4 interpretation of the concept of climate change and its potential impact on the environment in two scenarios. The top half is the Human without principle and the bottom half is the Human with principle. Without principle, GPT-4 simply describes climate change and its impacts. However, with the application of Principle 13, GPT-4 provides a more comprehensive and balanced perspective that encompasses both scientific consensus and divergent views. This suggests that the use of well-designed principles can improve the quality of model responses, making them more comprehensive and objective

Image 3 shows GPT-4's response to the assessment of the usefulness of the recommendation before and after the application of Principle 7. Without applying the principle, GPT-4 simply labeled the recommendation "useful" without providing supporting reasons. After applying Principle 7, GPT-4 provides detailed evaluations of the recommendations, such as identifying the recommendation "Getting to work" as "Not useful", showing that the principled cues give the model responses more depth and analytical power, thus increasing the level of detail and accuracy of the responses.

The experimental results detail the improvement results after applying these principles on small, medium and large LLMs. In general, all principles lead to significant improvements on all three sizes of LLM. In particular, under principles 2, 5, 15, 16, 25, and 26, the largest improvements were obtained on the large model through the principled cues. In terms of correctness, the application of all principles typically leads to an improvement of more than 201 TP3T on the average variety of models.

Conclusion

These principles help the model to focus on key elements of the input context and can guide the LLM prior to input processing to promote better responses. Experimental results show that these principles can improve context that may affect the quality of the output, making the response more relevant, concise, and objective. The authors note that future research will explore how to further improve the model to accommodate these principles, possibly including methods such as fine-tuning, reinforcement learning, and preference optimization. Successful strategies may be integrated into the standard operations of LLM, such as training through principled cues and responses. In discussing limitations, the authors mention that these principles, while helpful in improving response quality, may be less effective when dealing with very complex or domain-specific problems. This may depend on the reasoning ability and level of training of each model.