With the continuous iteration of AI painting technology,AI face-changingIt is also becoming more and more perfect.

From the route point of view, there are two main routes. One is to replace the face with a picture. The advantage is that the operation is simple, and the disadvantage is that when changing the posture, it is often not very similar. Plug-ins such as roop are based on this idea; the second is to refine a dedicated LoRA, use 5-20 or even more facial photos of the target, train a LoRA, and thus generate a satisfactory face-changing picture. Tools such as Miaoya Camera and EasyPhoto are all based on this idea.

Now, a third route has emerged.

Xiaohongshu InstantX team published the paper " InstantID: Zero-shot Identity-Preserving Generation in Seconds and the inference code, they said:

InstantID cleverly avoids the training of the UNet part of the text-based graph model. By only training a lightweight pluggable module, it achieves the elimination of test-time tuning during the inference process while maintaining the flexibility of text control and ensuring the high fidelity of facial features.

How InstantID works can be broken down into three key parts:

ID Embedding: The team used a pre-trained facial recognition model instead of CLIP to extract semantic facial features, and used a trainable projection layer to map these features to the text feature space to form Face Embedding, which has rich semantic information, including facial features, expressions, age, etc., providing a solid foundation for subsequent image generation.

Image Adapter: Introduces a lightweight adapter module that combines the extracted identity information with textual cues. This module uses a decoupled cross-attention mechanism to allow images and text to independently influence the generation process, thereby maintaining identity information while allowing users to finely control the image style, achieving a "win-win" situation.

IdentityNet: Xiaohongshu proposed a network called IdentityNet, which is the core part of InstantID. It encodes the complex features of the reference facial image through strong semantic conditions (such as a detailed description of facial features) and weak spatial conditions (such as the location of facial key points). In IdentityNet, the generation process is completely guided by Face Embedding without any text information. Only the newly added modules are updated, while the pre-trained text-to-image model remains frozen to ensure flexibility.

In the actual image generation process, InstantID first receives the user's text prompt and facial image. Then it extracts key information through ID Embedding, and then Image Adapter fuses this information with the text prompt. IdentityNet generates an image based on this fused information.

The entire process is automated, and users do not need to perform any additional fine-tuning or training. They only need to wait for twenty seconds or so to get a customized image that both matches the text description and retains personal identity features.

This tool has an independent installation version and a comfyUI version. Today I will introduce how to use the WebUI version.

1. Upgrade ControlNet to at least version 1.1.440.

2. Download the models used by ControlNet. The models are divided into two categories.

https://www.123pan.com/s/ueDeVv-v1uI.html

(1) ControlNet model

They are ip-adapter_instant_id_sdxl.bin and control_instant_id_sdxl.safetensors respectively.

After downloading, copy it to extensions\sd-webui-controlnet\models. For example, my WebUI is installed in E:\sd-webui, so you need to copy it to:

E:\sd-webui\extensions\sd-webui-controlnet\models

PS, you can also copy to

E:\sd-webui\models\ControlNet

(2) Facial Recognition Model

There are 5 in total, copy them to the following directory (my WebUI is in E:\sd-webui)

E:\sd-webui\extensions\sd-webui-controlnet\annotator\downloads\insightface\models\antelopev2

3. Configuration interface

In the ControlNet configuration interface, we need to configure two pages.

First page:

First upload the face prototype that needs to be output, such as a very familiar beautiful girl.

Note the relevant parameters.

(1) Select Enable.

(2) Select Instant_ID as the type

(3) Preprocessor selects instant_id_face_embedding

(4) Select ip-adapter_instant_id_sdxl as the model. If the model is empty or an error message is displayed, check whether the downloaded model is copied to the specified location.

Second page:

Upload the pose you want to pose in here, which does not need to be the picture you want to change your face. instantID will analyze the pose of this photo and then adapt the face shape of the first photo to this pose. However, it is not a simple face change. Except for the pose, it is completely different, which is equivalent to regenerating.

(1) Note that if your video memory is less than 16G, you should select low video memory optimization here, otherwise the video memory will be exceeded.

(2) As in the first page, select Instant_ID.

(3) Select instant_id_face_keypoints for the preprocessor and control_instant_id_sdxl for the model.

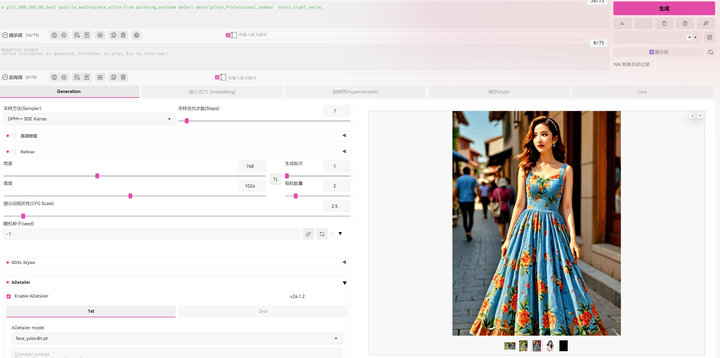

4. Enter the prompt word and click "Generate".

It should be noted that the prompt word should not be too complicated, as overly complicated prompt words can easily make Instant_ID invalid.

For example, I enter the prompt word:

a girl,HDR,UHD,8K,best quality,masterpiece,ultra-fine painting,extreme detail description,Professional,summer dress,light_smile,

Does it look familiar?

Another pose reference picture:

The prompt word remains unchanged and is generated as follows:

However, I feel that it doesn’t seem as similar as the legend says. What do you think?