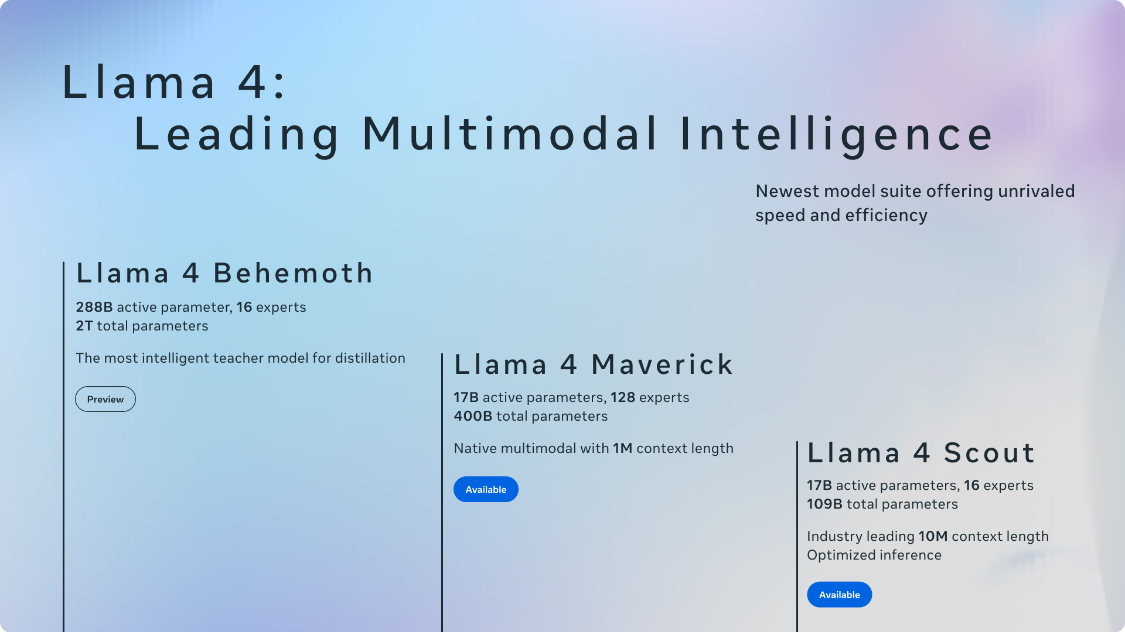

April 6 News.Meta Now releasing its latest Llama 4 series AI ModelsContains Llama 4 Scout, Llama 4 Maverick and Llama 4 Behemoth.Meta reveals that the corresponding models are trained on "large amounts of unlabeled text, image, and video data" to give them "broad visual comprehension capabilities.".

Currently, Meta has uploaded Scout and Maverick to Hugging Face (visit here), while the Behemoth model is still in training. Scout can be run on a single NVIDIA H100 GPU, while Maverick requires the NVIDIA H100 DGX AI platform or an "equivalent device".

Meta stated thatThe Llama 4 Series models are the first of the company's models to utilize the Mixed Expertise (MoE) architectureThis architecture is more efficient at training and answering user queries, and the so-called "hybrid expert architecture" basically breaks down data processing tasks into subtasks and delegates them to smaller, specialized "expert" models.

For example,Maverick has 400 billion parameters.The number of active parameters (the number of parameters roughly corresponds to the model's problem solving ability) is only 17 billion in 128 "expert" models.Scout has 17 billion active parametersThe model has 16 "expert" models and a total of 109 billion parameters.

It is worth noting, however, that none of the models in the Llama 4 series are truly "inferential" in the sense that OpenAI's o1 and o3-mini are. By way of comparison, "inference models" fact-check their answers and generally answer questions more reliably, but as a result they also take longer to answer than traditional "non-inference" models.

Meta's internal tests show that the Maverick model is best suited for use in "general purpose AI assistants and chat" scenarios, outperforming models like OpenAI's GPT-4o and Google's Gemini 2.0 in creative writing, code generation, translation, inference, contextual summarization of long text, and image benchmarks. Gemini 2.0. However, Maverick still has some room for improvement over more powerful recent models such as Google's Gemini 2.5 Pro, Anthropic's Claude 3.7 Sonnet, and OpenAI's GPT-4.5.

Scout's strengths are summarizing documents and reasoning based on a large code base. The model supports 10 million lexical elements ("lexical elements" represent fragments of the original text, e.g., the word "fantastic" can be split into "fan", "tas", and "tic"), so it can process "up to millions of words at a time", "tas" and "tic"), and can therefore process "up to millions of words of text" at a time.

1AI notes that Meta further teased its Behemoth model, which according to the companyBehemoth has 288 billion active parameters.Meta's internal benchmarks show Behemoth outperforming GPT-4.5, Claude 3.7, Sonnet, and Gemini 2.0 Pro, but not as well as Gemini 2.5 Pro, on a number of assessments that measure science, technology, engineering, and math (STEM) skills such as solving math problems. Sonnet and Gemini 2.0 Pro, but not as well as Gemini 2.5 Pro.