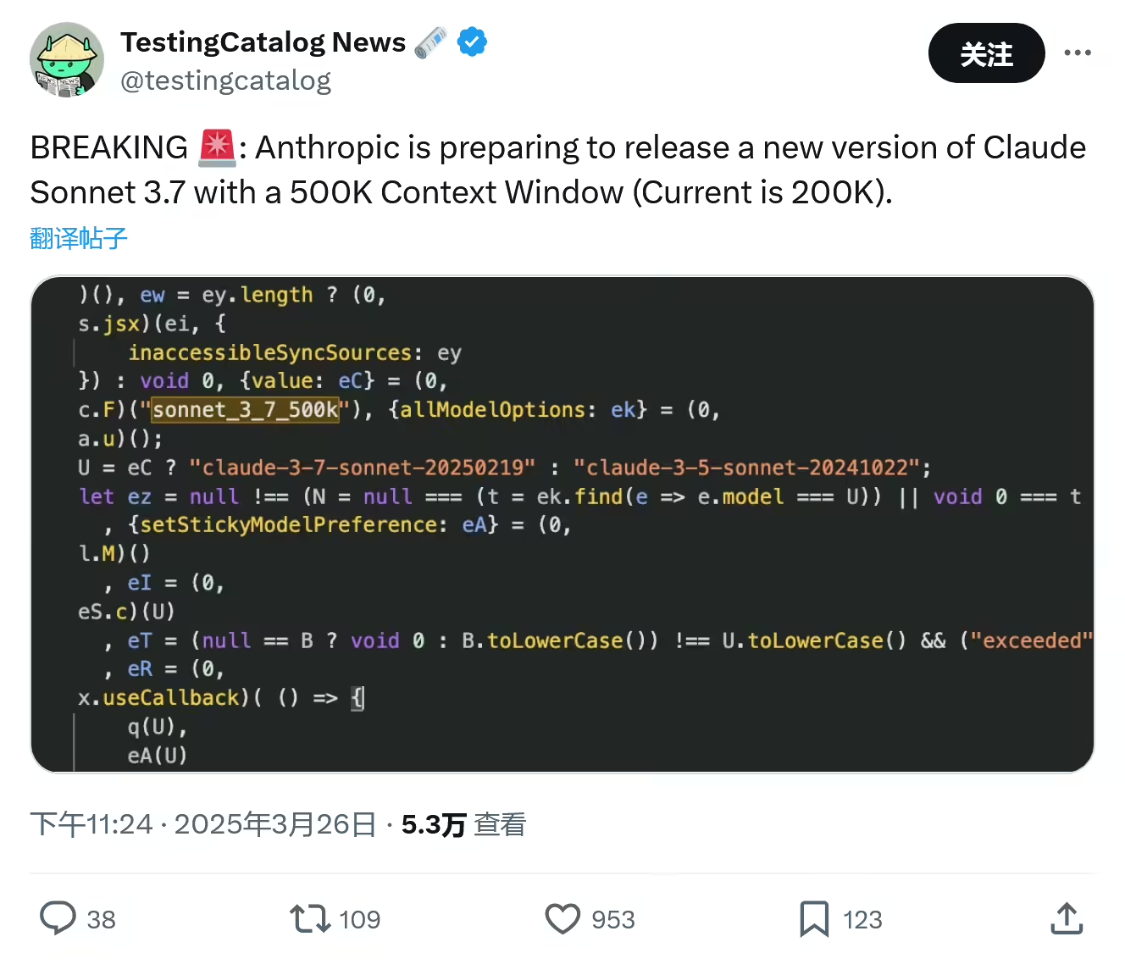

March 27, 2011 - Tech media testingcatalog published a blog post yesterday (March 26) reporting that AI company Anthropic Plans are underway to "expand"Claude 3.7 The Sonnet model.Jumping its context window from 200,000 tokens to 500,000 tokens.

The 500,000 tokens window can directly process massive amounts of information, avoiding the contextual misalignment problem that may result from retrieval-enhanced generation (RAG), and is suitable for complex tasks such as political document analysis, management of very long code bases (e.g., hundreds of thousands of lines of code), and cross-document summary generation. However, the media also pointed out that mega-context may bring pressure on memory and arithmetic costs, and the actual utilization rate of the model still needs to be verified.

1AI Note: The Context Window is the range of previous content that the model actually refers to when generating each new token. It can be likened to the extent to which you can focus your attention at a given time, as if you could only focus on a limited number of tasks at hand.

The Context Window determines the amount of contextual information that the model can refer to during the generation process. This helps the model to generate coherent and relevant text without referring to too much context, resulting in confusing or irrelevant output.

Anthropic has always focused on enterprise solutions, and this upgrade is aimed squarely at the ultra-long contextual advantages of competitors like Google Gemini.

This upgrade coincides with the rise of AI-driven vibe coding. Developers generate code from natural language descriptions, and the 500,000 token window supports continuous development of larger projects, reducing interruptions due to token limitations and further lowering the barriers to programming.