March 24th.DeepSeek Announced in its official exchange group, DeepSeek-V3 model has completed a small version upgrade, welcome to the official web page, app, small program trial experience (off deep thinking), API interface and use remains unchanged.

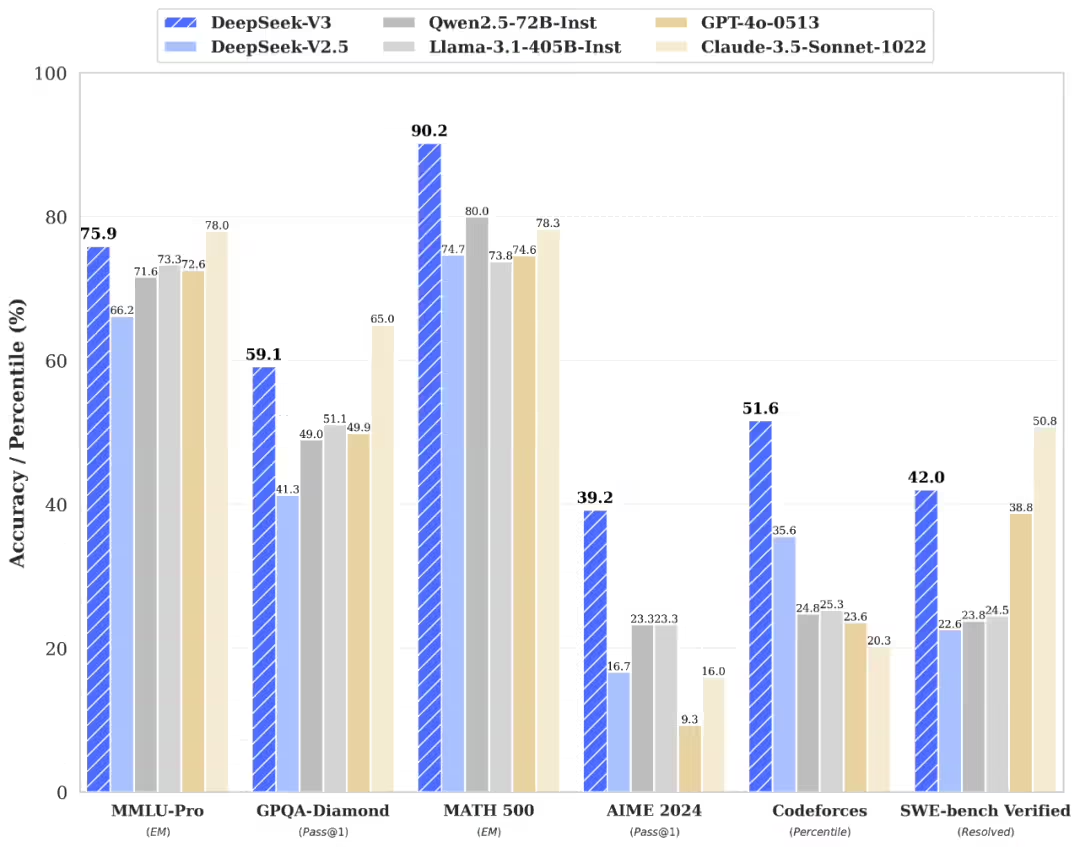

Officials did not disclose the specific details of this upgrade, according to 1AI, DeepSeek-V3 model was released in December last year and open source, for DeepSeek self-developed MoE model, with 671B parameters, DeepSeek-V3 a number of evaluation scores than Qwen2.5-72B and Llama-3.1-405B and other open source models, and in terms of performance and the world's top closed-source model GPT-4o and Claude-3.5-Sonnet are comparable. In terms of performance, DeepSeek-V3 outperforms other open source models such as Qwen2.5-72B and Llama-3.1-405B, and matches the world's top closed-source models GPT-4o and Claude-3.5-Sonnet.

In January this year, DeepSeek also launched and open-sourced the DeepSeek-R1 inference model, whose performance is on par with that of the official version of OpenAI o1, and which is rapidly gaining popularity around the world due to its low training cost and high performance.