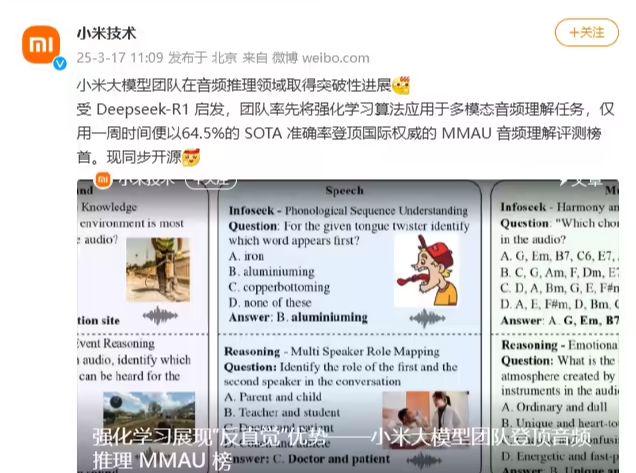

March 17, @MilletTechnology official microblogging today said that XiaomiLarge ModelTeam inAudio ReasoningThe team has made breakthrough progress in the field of multimodal audio understanding. Inspired by DeepSeek-R1, the team is the first to apply reinforcement learning algorithms to multimodal audio comprehension tasks, and in just one week, the team has topped the international authoritative MMAU audio comprehension review with a SOTA accuracy of 64.5%, which is now open-sourced.

1AI attached the full official text below:

Reinforcement Learning Demonstrates "Counterintuitive" Advantage -- Xiaomi's Big Model Team Tops Audio Reasoning MMAU Chart

Faced with a recording of a car's cockpit in motion, can AI determine if there is a potential malfunction in the car? At a symphony performance, can AI predict the mood of the composer when creating the music? In the chaos of footsteps at a subway station during the morning rush hour, can AI predict the risk of a potential collision at the gate? In the era of big models, people are no longer satisfied with machines just recognizing the content of speech and the type of voice, but expect them to have the ability of complex reasoning.

The MMAU (Massive Multi-Task Audio Understanding and Reasoning) review set (https://arxiv.org/ abs / 2410.19168) is a quantitative scale of this audio reasoning ability, which is measured by 10,000 audio samples covering speech, ambient sound, and music, combined with 10,000 audio samples covering speech and ambient sound and music, combined with Q&A pairs labeled by human experts, to test the model's performance on 27 skills, such as cross-scene reasoning, expertise, and other applications, with the expectation that the model achieves a level of logical analysis close to that of a human expert.

As a benchmark ceiling, human experts on MMAU achieved an accuracy of 82.231 TP3T. This is a difficult set of reviews, and the best performing model on the current MMAU official list is GPT-4o from OpenAI, with an accuracy of 57.31 TP3T. A close second is Gemini 2.0 Flash from Google DeepMind, with an accuracy of 55.61 TP3T. Gemini 2.0 Flash from Google DeepMind, with an accuracy of 55.61 TP3T.

The Qwen2-Audio-7B model from Ali has an accuracy of 49.21 TP3T on this review set. Due to its open-source nature, we attempted to fine-tune this model using a smaller dataset, the AVQA dataset released by Tsinghua University (https://mn.cs.tsinghua.edu.cn/ avqa/). The AVQA The AVQA dataset contains only 38,000 training samples, and with full supervised fine-tuning (SFT), the accuracy of the model on MMAU is improved to 51.8%. This is not a particularly significant improvement.

Our research on this task was inspired by the release of DeepSeek-R1, a Group Relative Policy Optimization (GRPO) methodology that allows models to evolve autonomously through trial-and-error-reward mechanisms alone, emerging with human-like reasoning capabilities such as reflection and multi-step verification. At the same time, Carnegie Mellon University released a preprint of the paper "All Roads Lead to Likelihood: The Value of Reinforcement Learning in Fine-Tuning (https://arxiv.org/ abs / 2503.01067)". 2503.01067)", which made an interesting assertion through sophisticated experiments: reinforcement learning has a unique advantage over supervised fine-tuning when there is a significant generation-verification gap, i.e., the task is much more difficult to generate than to verify the correctness of the results.And AQA tasks happen to be perfect for tasks with significant generation-validation gaps.

As an analogy, offline fine-tuning methods, such as SFT, are a bit like memorizing a question bank, where you can only train based on existing questions and answers, but may not be able to do the new questions you encounter; whereas reinforcement learning methods, such as GRPO, are like the teacher asking you to think of a few more answers, and then the teacher tells you which answer is good, so that you can actively think and stimulate your own ability, rather than being " Fill-in-the-blank" teaching. Of course, if the amount of training is sufficient, for example, some students are willing to spend many years to memorize the questions, may eventually achieve good results, but the efficiency is too low, wasting too much time. Active thinking, on the other hand, is more likely to achieve the effect of learning by example quickly. Reinforcement learning's real-time feedback may help the model target areas of the distribution of high-quality answers more quickly, whereas offline methods require traversing the entire possibility space, which is much less efficient.

Based on the above insights.We try to migrate the GRPO algorithm of DeepSeek-R1 to the Qwen2-Audio-7B model. Surprisingly, with only 38,000 training samples from AVQA, theThe reinforcement learning fine-tuned model achieves an accuracy of 64.5% on the MMAU review set, a result that is nearly 10 percentage points better than GPT-4o, the commercial closed-source model that is currently number one on the list.

Interestingly, when we force the model to output the reasoning process during training (similar to the traditional chain-of-consciousness approach), the accuracy instead drops to 61.11 TP3T. this suggests that explicit chain-of-consciousness result output may not be conducive to model training.

Our experiments reveal several conclusions that are different from traditional perceptions:

- On fine-tuning methods: reinforcement learning significantly outperforms supervised learning on the 38,000 dataset with 570,000 datasets

- Regarding parameter size: compared to the hundreds of billions of models, a 7B parameter model can also show strong inference ability through reinforcement learning.

- On implicit reasoning: explicit thought chain output instead becomes a performance bottleneck

Although the current accuracy rate has exceeded 64%, it is still far from the level of 82% of human experts. In our current experiments, the reinforcement learning strategy is still relatively rough, and the training process is not sufficiently guided by the chain of thought, which we will do further exploration in the follow-up.

This experiment verifies the unique value of reinforcement learning in the field of audio reasoning and opens a new door for subsequent research. When the machine can not only "hear" the sound, but also "understand" the causal logic behind the sound, the era of truly intelligent hearing will come.

We open-source the training code, model parameters, and provide technical reports for academic industry reference and exchange.

Training code:https://github.com/xiaomi-research/r1-aqa

Model parameters:https://huggingface.co/mispeech/r1-aqa

Technical report:https://arxiv.org/abs/2503.11197

Interaction Demo:http://120.48.108.147:7860/