March 6, 2011 - 1AI has learned from theTencent HunyuanWeChat was informed thatTencent Hybrid ReleaseImage video modelexternallyOpen SourceThe game also features lip-syncing and motion-driven gameplay, as well as support for generating background sound effects and 2K high-quality video.

Based on the ability of graphic video, users only need to upload a picture and briefly describe how they want the picture to move, how the camera is dispatched, etc., and Hybrid can make the picture move according to the requirements.Turns into a 5-second video with automatic background sound effectsIn addition, by uploading a picture of a character and entering the text or audio you wish to "lip sync", the character in the picture can "talk" or "sing". In addition, by uploading a picture of a character and typing in the text or audio you want to "lip-sync", the character in the picture can "talk" or "sing"; using the "motion-driven" capability, you can also generate a dance video of the same character in one click. With the "motion-driven" ability, you can also generate the same dancing video with one click.

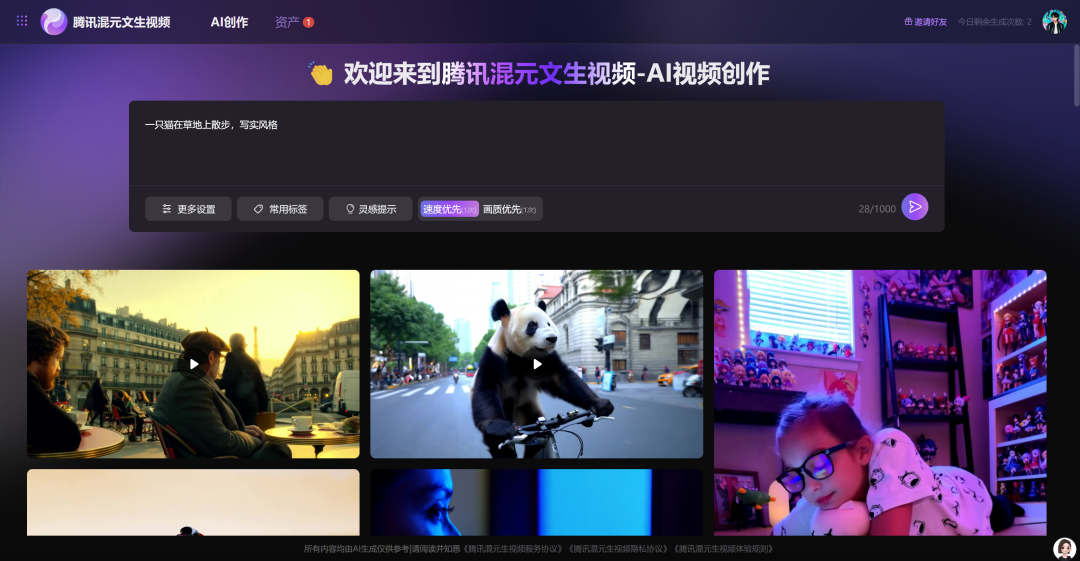

Currently, users are able to view the video through the official Mixed AI Video website (https://www.1ai.net/26196.html) can be experienced, and enterprises and developers can apply to use the API interface at Tencent Cloud.

The open-source graph-generated video model is a continuation of the open-source work on the hybrid Vincennes video model, which maintains a total number of 13 billion participants in the model.Models are suitable for many types of characters and scenes, including generation for realistic video production, anime characters and even CGI character production.

The open source content includes weights, inference code and LoRA training code, which supports developers to train proprietary LoRA and other derived models based on mixed elements. Currently, it can be downloaded from Github, HuggingFace and other mainstream developer communities.

The hybrid open source technical report discloses that the hybrid video generation model has flexible scalability, with graph-generated and text-generated videos carrying out pre-training on the same dataset.On the basis of maintaining the characteristics of ultra-realistic picture quality, smooth rendition of large-scale actions, and native camera switching, the model is allowed to capture rich visual and semantic information and combine multiple input conditions such as image, text, audio, and gesture to realize multi-dimensional control of the generated video.

At present, the open source model series of Mixed Meta has a complete coverage of text, image, video and 3D generation and other modalities, and has gained the attention and star of more than 23,000 developers in Github.

Attachment: hybrid Tusheng video open source link

Github:https://github.com/Tencent/HunyuanVideo-I2V

Huggingface:https://huggingface.co/tencent/HunyuanVideo-I2V