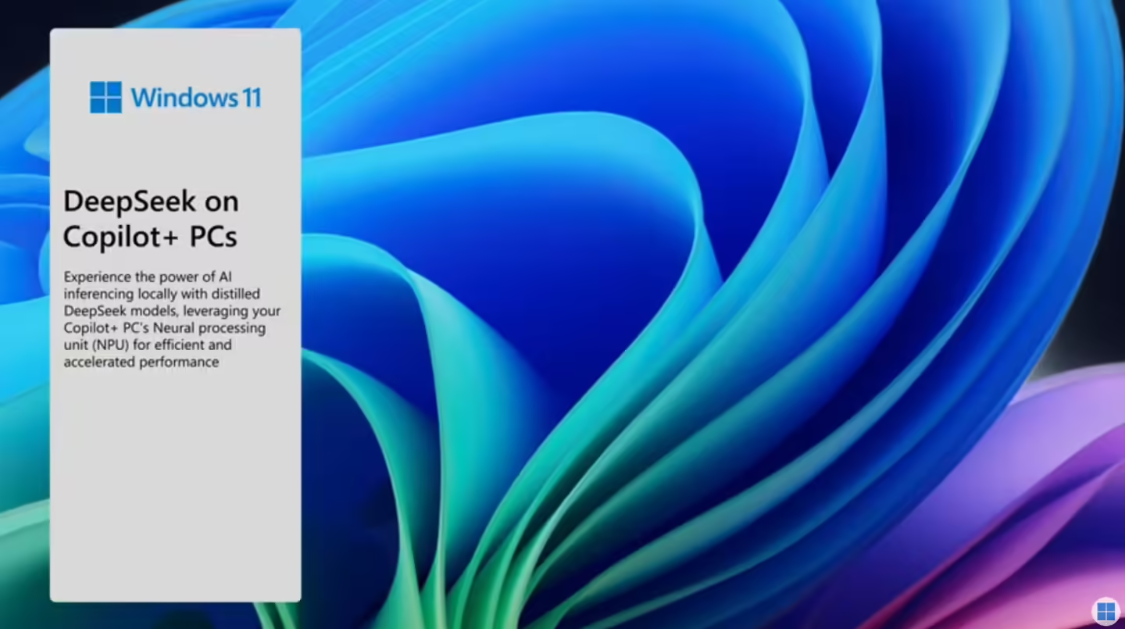

March 4 News.Microsofttoday announced the availability of the Azure AI Foundry to access the DeepSeek-R1 7B and 14B distillation models.because of Copilot+ PC provides the ability to run 7B and 14B models locally.

Back in January, Microsoft announced plans to bring an NPU-optimized version of the DeepSeek-R1 model directly to Copilot+ PCs with Qualcomm Snapdragon X processors. Now, that promise has finally been realized.

1AI learned from the official Microsoft blog thatModels will go live starting with Copilot+ PCs with Qualcomm Snapdragon X processors, followed by Intel Core Ultra 200V and AMD Renegade devices.

Because the model runs on the NPU, AI computational power is continuously available while reducing the impact on PC battery life and thermal performance, and the CPU and GPU are available for other tasks.

Microsoft emphasized that it used Aqua's internal automatic quantization tool to quantize all DeepSeek model variants into int4 weights. Unfortunately, model labeling speeds are quite low. Microsoft reported that the 14B model had a labeling speed of only 8 tok/sec, while the 1.5B model had a labeling speed of nearly 40 tok/sec. Microsoft mentioned that the company is working on further optimizations to increase the speed.

Developers can download and run versions 1.5B, 7B, and 14B of DeepSeek models on Copilot+ PCs via the AI Toolkit VS Code extension.