Following Tencent's hybridization, Ali also announcedOpen SourceUnderVideo Generation Model: Universal Phase 2.1 (Wan2.1), claiming SOTA-level performance, with highlights including:

1, better than existing open source models, and even "comparable to some closed source models".

2、It is the first video model that can generate Chinese and English text at the same time.

3. Supports consumer GPUs, of which the T2V-1.3B model requires only 8.19GB of video memory.

Currently 10,000 Phase 2.1 is available throughComfyUILocal deployment, here's how:

I. Installation of the necessary tools

Please keep your "network free" and make sure you have Python, Git, and the latest version of ComfyUI installed (if not, please refer to the second half of this post: ComfyUI Latest Version Installation for instructions on how to install it).

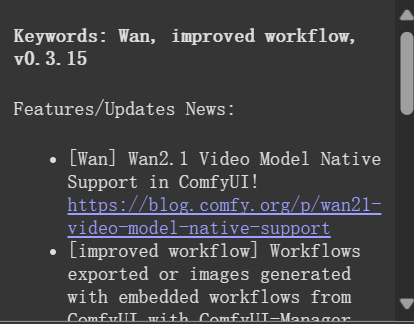

After updating ComfyUI to the latest version, you can see the message that 10,000 phases 2.1 has been supported in the main interface:

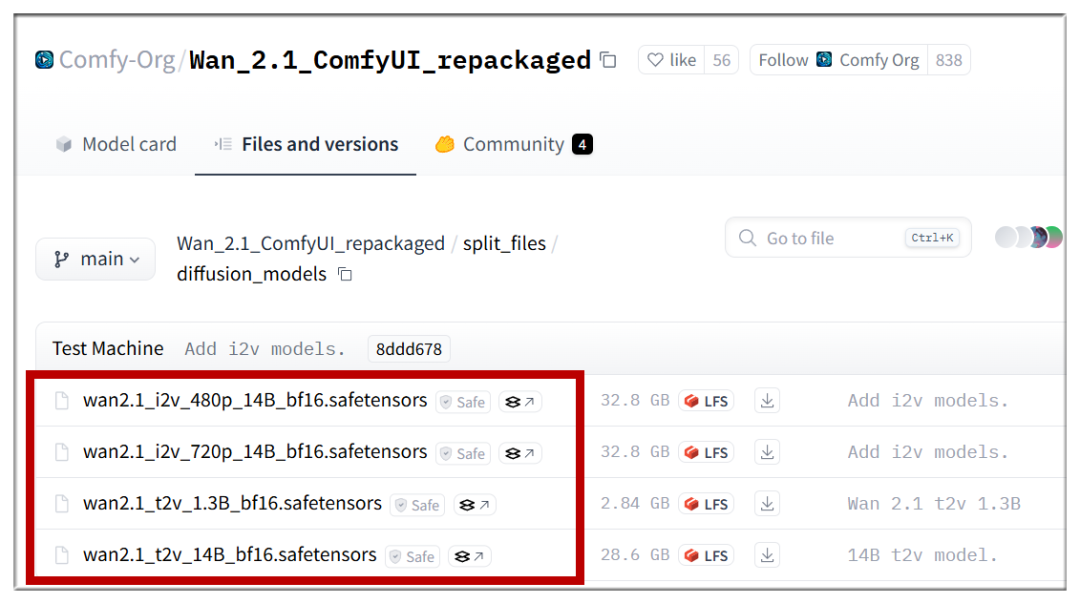

2. Download the model

Next, download the model in four parts.

1. Open the URL:

https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/tree/main/split_files/diffusion_models

Please download the above models according to your needs, and all of them have a download capacity of more than 80GB.

2. Download the following file and put it into the ComfyUI/models/text_encoders directory

https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/main/split_files/text_encoders/umt5_xxl_fp8_e4m3fn_scaled.safetensors?download=true

3. Download the following file and put it into the ComfyUI/models/clip_vision directory

https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/main/split_files/clip_vision/clip_vision_h.safetensors?download=true

4. Download the following file and put it into the ComfyUI/models/vae directory

https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/main/split_files/vae/wan_2.1_vae.safetensors?download=true

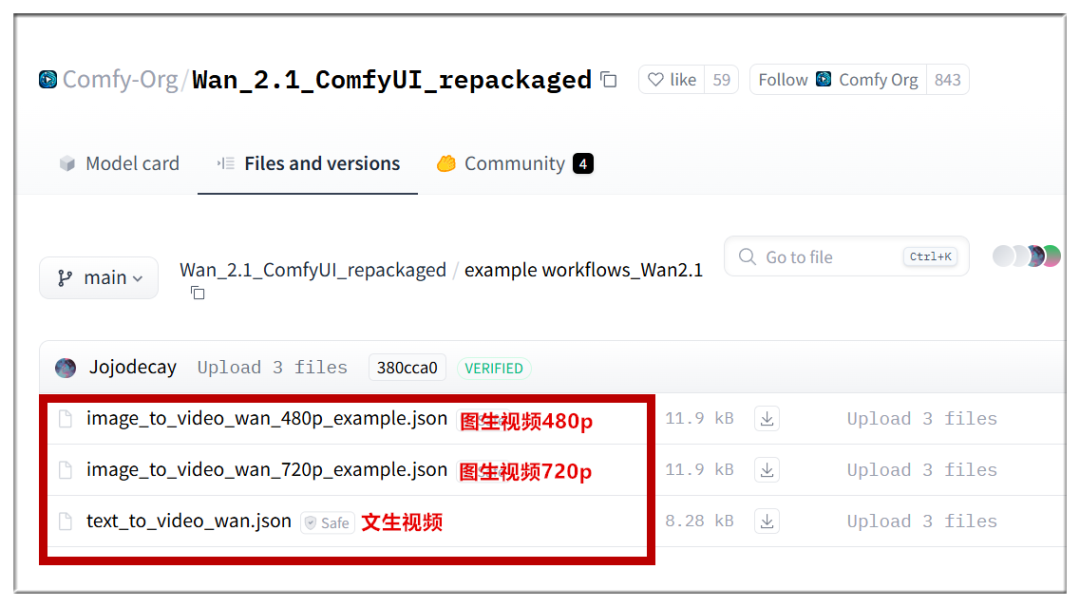

III. Downloading workflows

Next go to the workflow page and download the three workflows at the following URLs:

https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/tree/main/example%20workflows_Wan2.1

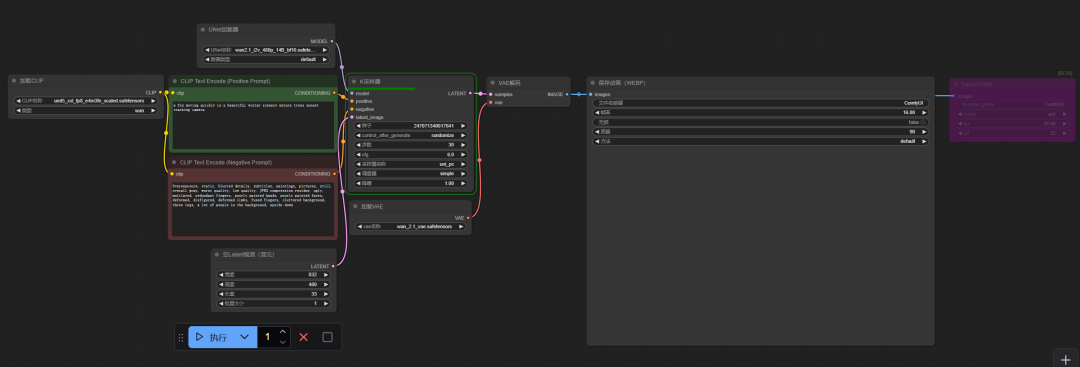

Drag the workflow to the ComfyUI interface to load it, and you'll see something like the following:

IV. Basic Functional Tests

By this point the installation is complete, so let's first test the Vincennes video (using the T2V-14B).

Cue in: a big-breasted Victoria's Secret model in a tiny red bikini with a smile on a Mediterranean beach, a few yachts in the sea in the distance, a blue sky with a few white clouds

Cue word: a big-breasted Victoria's Secret model in a tiny red bikini on a Mediterranean beach, smiling and holding up a sign that says "welcome."

Cue word: a big-breasted Victoria's Secret model in a tiny red bikini on a Mediterranean beach, smiling and holding up a sign that says "I love China."

Continuing to test the Tucson video (using the I2V-14B-480P), use this image:

Cue word: a girl laughing and talking in a car with a vehicle moving outside the window

ComfyUI official video raw video (using T2V-14B), but kijai big brother made the workflow has been supported, here just generated video, modify the characters in the screen.

Cue word: a cyborg girl sitting in a car

Note: kijai's workflows and models are available as a separate download, see the link to the code page at the bottom of the tweet for details.

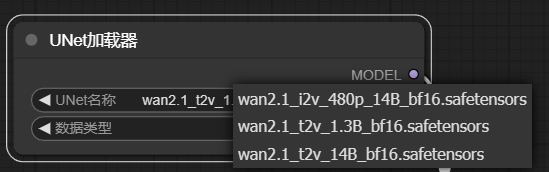

Also note that since Manphase 2.1 involves multiple models, please make sure you have selected the correct model in the ComfyUI dialog box before generating:

V. Preliminary test conclusions

1, from the point of view of the effect, Ali Wanphase 2.1 is not inferior to the Tencent hybrid, and the degree of compliance with the cue word is also better.

2、It is true that Chinese can be generated, but only some cases can get the correct result, need to draw cards.

3, the speed of operation, in the 4090 running Vincennes video (T2V-14B model), about 8 minutes to generate 5 seconds of 480P video (no quantization and other technologies), the use of T2V-1.3B is as little as 2 minutes or so, but at the expense of the effect. Graph-generated video (I2V) is even faster, generating 5 seconds of video in about 3 minutes on the 4090.

4, Vince video can be selected according to the hardware 1.3B and 14B model, Tucson video can only choose 14B model, the resolution can be selected 480P and 720P.

Web sites covered by the article

The official code page for Manphase 2.1:

https://github.com/Wan-Video/Wan2.1

The official support page for Manphase 2.1 ComfyUI:

https://blog.comfy.org/p/wan21-video-model-native-support

Manphase 2.1 ComfyUI code page (kijai):

https://github.com/kijai/ComfyUI-WanVideoWrapper