Last year was the year of AI video explosion. In January 2023, there is no public text-to-video model. So far,AI VideoThere are dozens of generated products with millions of users. Let's review the development of AI-generated videos and noteworthy technologies and applications in the past year. It mainly includes the following aspects:

- Current AI video classification

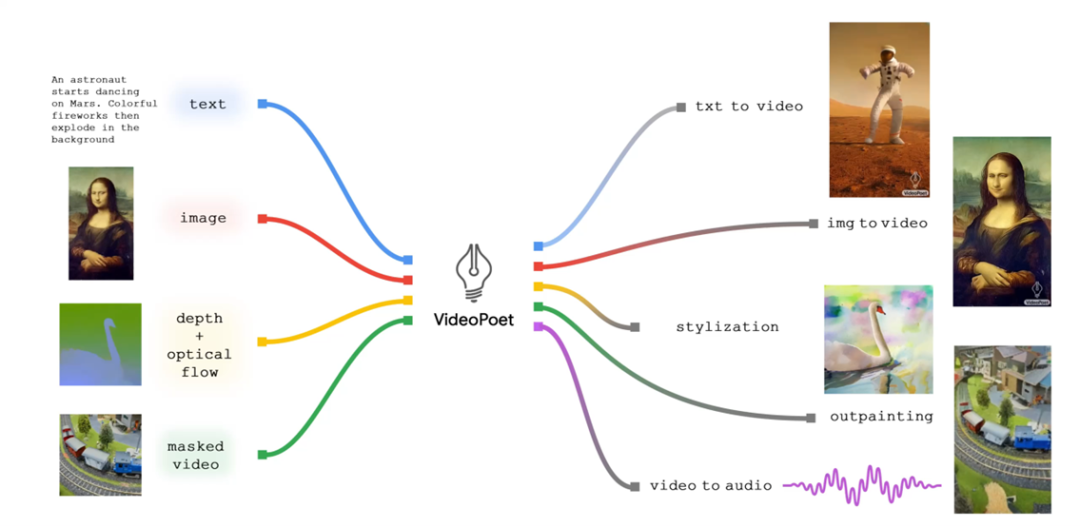

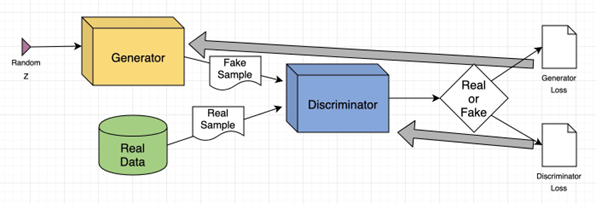

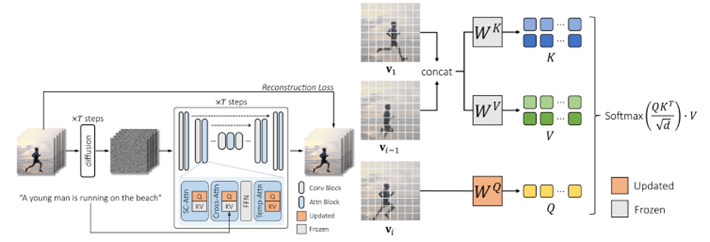

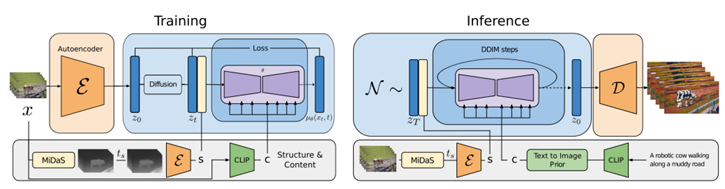

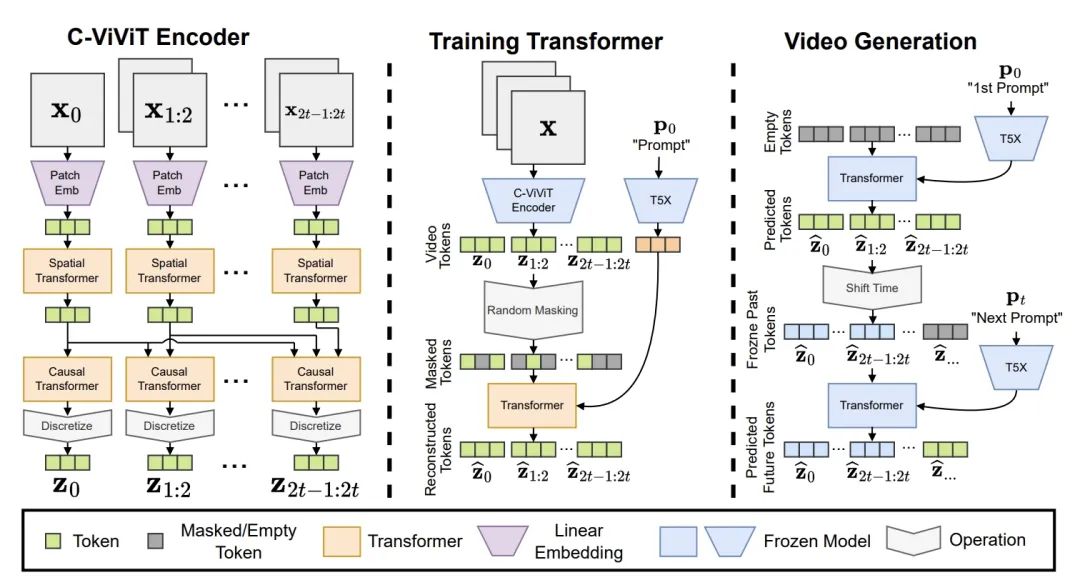

- Generative AI Videotechnology

- AI video extension technology and applications

- Generative AI Video Outlook

-

Challenges of Generative AI Video

AI Video Classification

1. Text/Picture Generated Video

2. Video to video generation

3. Digital Humans

4. Video Editing Type

AI video extension technology and applications

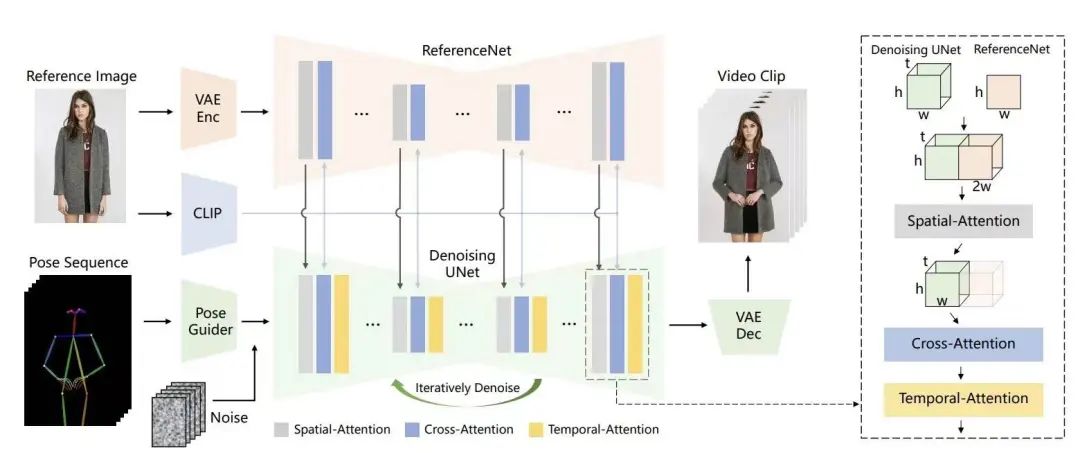

"Photo Dance" - Animate anyone

Based on diffusion model + Controlnet related technologies

“Converting live video into animation”——DomoAI

AI Video Technology Outlook

"The future of unification?" - Transformer architecture