February 28th.OpenAI Chief Executive OfficerOltmann (name)said today that as a result of the "GPU resource constraints," the company had tophased roll-outupdated GPT-4.5 Model.

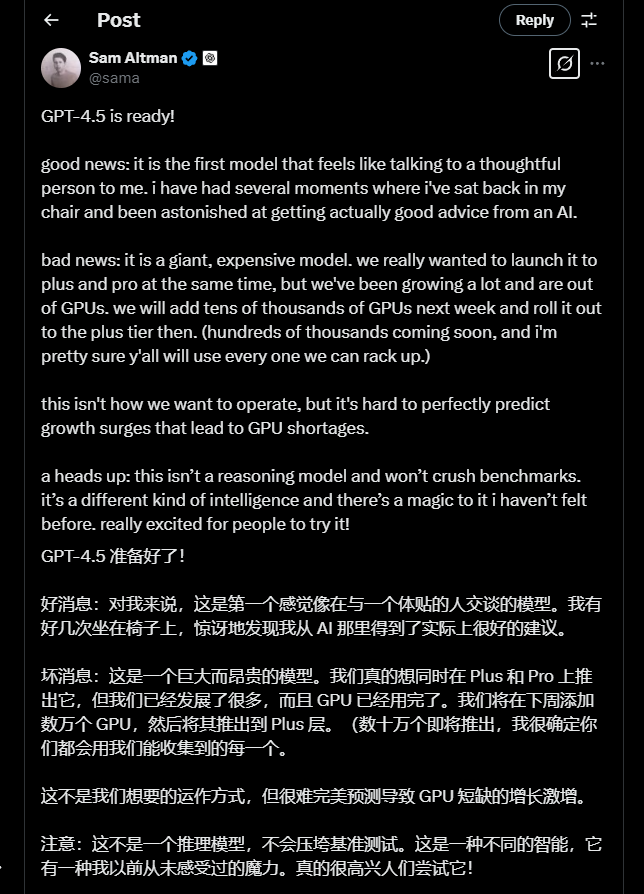

In a post on the X platform, Altman noted that GPT-4.5 was a "large" and "expensive" model that would requireTens of thousands of additional GPUs GPT-4.5 will be rolled out to ChatGPT Pro subscribers first, followed by ChatGPT Plus users next week.

The high cost of GPT-4.5 stems in part from its enormous scale. openAI charges $75 per million input tokens (note: currently around Rs. 546) and $150 per million generated tokens. That's 30 times the input cost and 15 times the output cost of its main model, GPT-4o.

"We've been growing rapidly.But now the GPU is not enough.." Altman writes, "We will be adding next weekTens of thousands of GPUs, and rolled out ...... to Plus users at that time It's not our ideal modus operandi, but it's hard to accurately predict the growth fluctuations that lead to GPU shortages."

Altman has said that a lack of computing power is causing delays in the company's product releases. openAI plans to address this issue in the next few years by developing its own AI chips and building a vast network of data centers.