Many of my friends have recently asked whatDigital Human? How to be a digital person?

To put it bluntly, creating your ownclone human, just type in the text and generate a video of yourself talking.

Today, then, I'm going to hand-hold you through the use of the Digital Man tool so you can easily make your own digital man without having to show your face.

There are only two total steps to building a digital person, and I'm going to break down the underlying logic for you in steps.

The first step is to get the digitizers moving; the second step is to get the digitizers lip-synching.

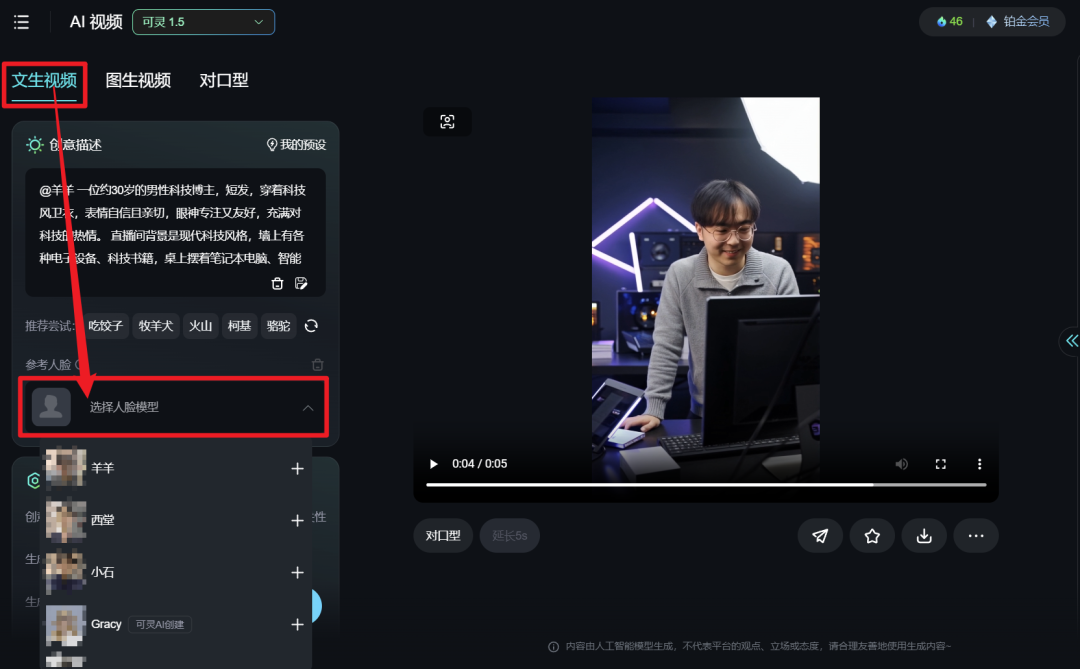

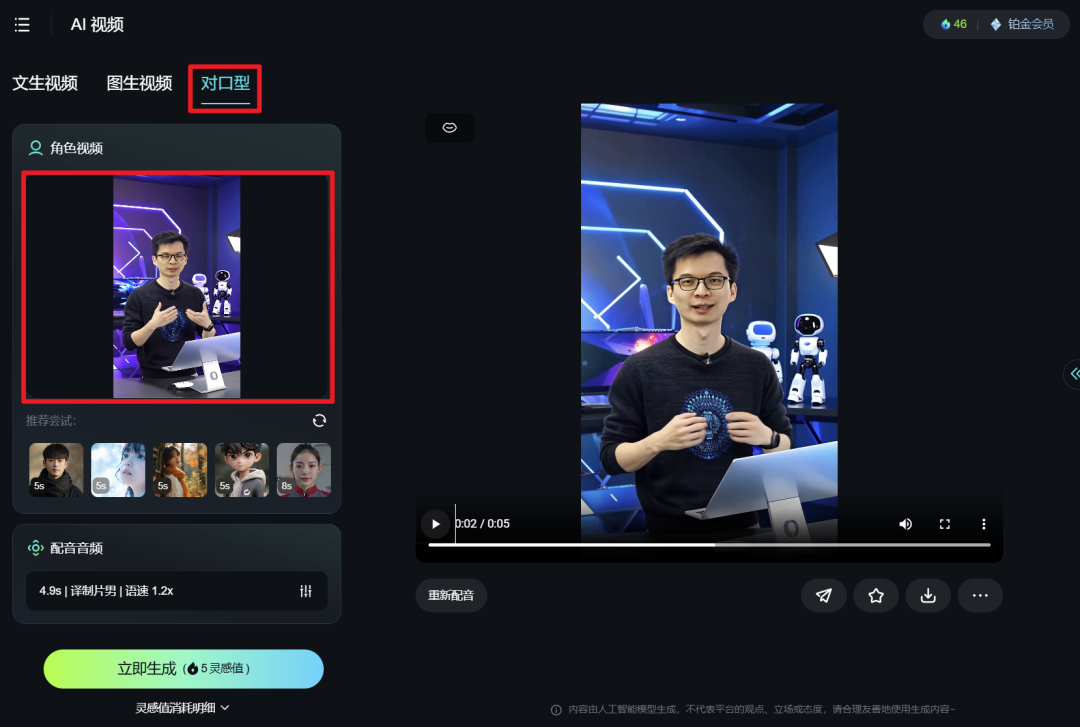

For the first step, "Get Moving," we can use theKeling AI, because its dynamically generated effects are both stable and realistic.

There are three ways to implement this here:

first type

Use the Vincennes video feature of Collin AI.

Can be spiritual:https://www.1ai.net/12558.html

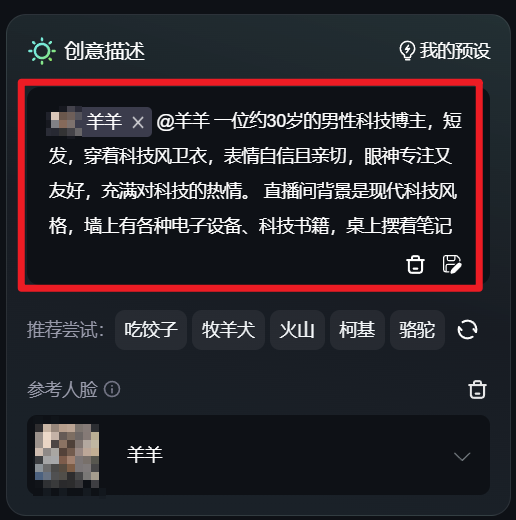

After clicking this function, we can choose to refer to the face. (This function is only available to Platinum members and above)

For example, if I want to use my own face, I'll just select "Sheep", but I need to record my face in advance.

In the upper left corner of the creative description area, use the face of "Sheep" and describe it in detail.

Things like "what are my eyes like as a blogger, what does the background look like, what is the action, what is the overall style of the image".

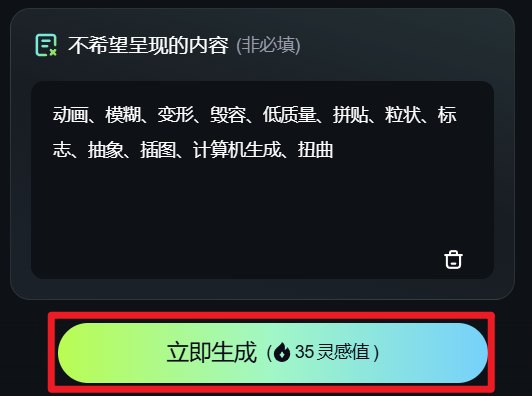

Once the description is complete, fill in the undesired presentations, such as deformities, distortions, disfigurements, and so on.

Then click "Generate Now", and you'll get a video with my face as the main character and stabilized movements. We can use this video for post-production lip-synching.

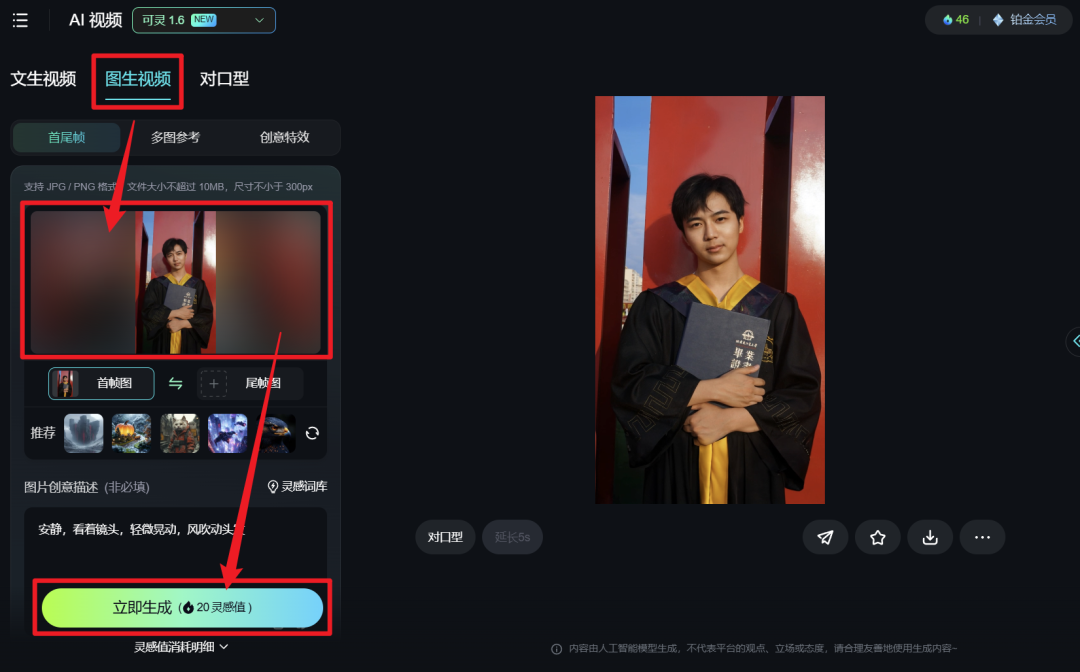

second type

The method is to use a graphic born video.

For example, let's get a generated image, like I've taken a screenshot of it here, and after uploading it to this location, we'll enter what kind of action we expect the image to show at the creative description.

What are the benefits of this graphic born video approach?

One is that the pictures are beautiful and the backgrounds can be set to your heart's content, which is very controllable, and the other is that you can also control the movements more finely.

third type

The way is to shoot yourself.

Take a picture of yourself and upload it to a graphic born video from Korin AI.

Next, enter relevant descriptions at the image creative description, such as making the character move, describing its eye state, etc.

However, it is recommended that you try to make the movements slower and the character dynamics not too strong. After all, when a person normally faces the camera and talks, the range of movement will not be particularly large, and too large a range of movement is prone to finger deformation bug.

After uploading the photo and completing the description, click "Generate Now" to get the video we want.

That's the first step, getting the digital persona moving.

Moving on to step two - lip-synching.

There are two ways to lip-sync, the

first type

is that we can directly use the lip-sync feature of Kling AI.

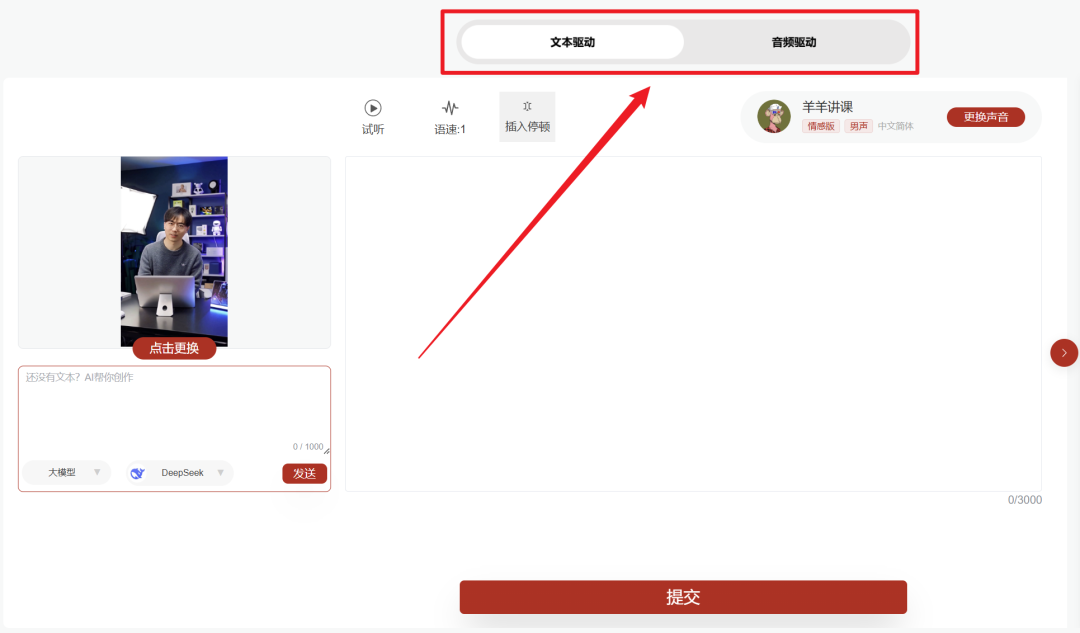

After clicking on "lip-sync", you can upload a video on the left, and choose between text-driven and voice-driven audio.

If you choose Text Driven, you can also pick a tone and enter the text you want to say to generate a video of the digital person explaining the passage.

The downside, however, is that despite the wide selection of tones, you can't use your own voice, making it difficult to sound exactly like you do.

But there are benefits to lip-synching, and we can go with other people's timbres, which might be more pleasing to the ear.

There is also a sound-driven approach to lip-syncing, where we can upload a piece of our own audio, and Kaling AI supports common audio formats such as MP3.

Upload the audio and you can generate the video directly.

However, Kling AI only supports generating 5-second clips at a time.

We can try to compress the audio to less than 5 seconds by shifting speeds, but this is not a long-term solution and is better suited for short spoken word videos, short digital videos, or combining multiple clips.

second type

way, we can also use the Must Fire AItool.

Must Fire AI:https://www.1ai.net/29524.html

It has the advantage of being able to perform image cloning and sound cloning with better generation.

Here's how it works:

First, the video is uploaded to clone the digital doppelgänger, uploading the dynamic video generated by the previous Korin AI;

Next, clone your own voice at Voice Clone;

Finally, create in the Digital Split panel, if text-driven, type in what you want the digital person to say, select your cloned voice, and click submit to generate a digital person video.

If you use the audio-driven method, you can upload a piece of your own voice and generate a digital human video as well.

Overall, the most critical are these two steps: Mr. into a dynamic video, and then let this dynamic video to complete the lip-synching operation, so that the digital human video we want to create is completed.