Feb. 23 - This week.OpenAI An employee of Elon Musk's publicly accused Elon Musk's xA company, claiming that it released misleading benchmark results for its latest AI model, Grok 3. In response, xAI co-founder Igor Babushkin insists the company did nothing wrong.

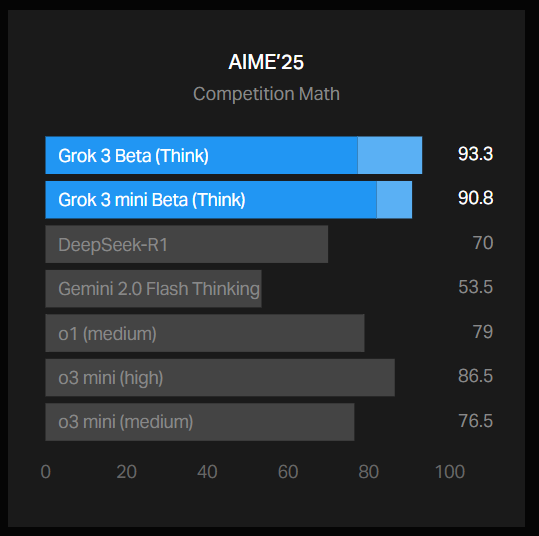

xAI has posted a chart on its blog showing Grok 3's performance on AIME 2025, a set of high-stakes math problems from a recent invitational math exam. While some experts have questioned the validity of AIME as an AI benchmark, AIME 2025 and its earlier versions are still widely used to assess the mathematical capabilities of models.

1AI notes that xAI's charts show that two versions of Grok 3 -- Grok 3 Reasoning Beta and Grok 3 mini Reasoning -- outperform on AIME 2025 the OpenAI's currently strongest available model, o3-mini-high. however, OpenAI staff were quick to point out on the x platform that thexAI's chart does not include the AIME 2025 score for o3-mini-high at "cons@64"..

The term "cons@64" refers to "consensus@64", which allows a model to try each question 64 times in a benchmark test and take the most frequent answer as the final answer. As you can imagine, this approach tends to significantly improve a model's benchmark score, and omitting this data from the charts could lead one to believe that one model outperformed another, which may not be the case.

In the "@1" condition of AIME 2025 (i.e., the score of the model's first attempt), Grok 3 Reasoning Beta and Grok 3 mini Reasoning scored lower than o3-mini-high. Grok 3 Reasoning Beta also scores slightly lower than OpenAI's o1 model in the "medium computation" setting.However, xAI is still touting Grok 3 as "the world's smartest AI".

Babushkin argued on Platform X that theOpenAI has published similarly misleading benchmarking charts in the past. Although these charts were used to compare the performance of their own models.

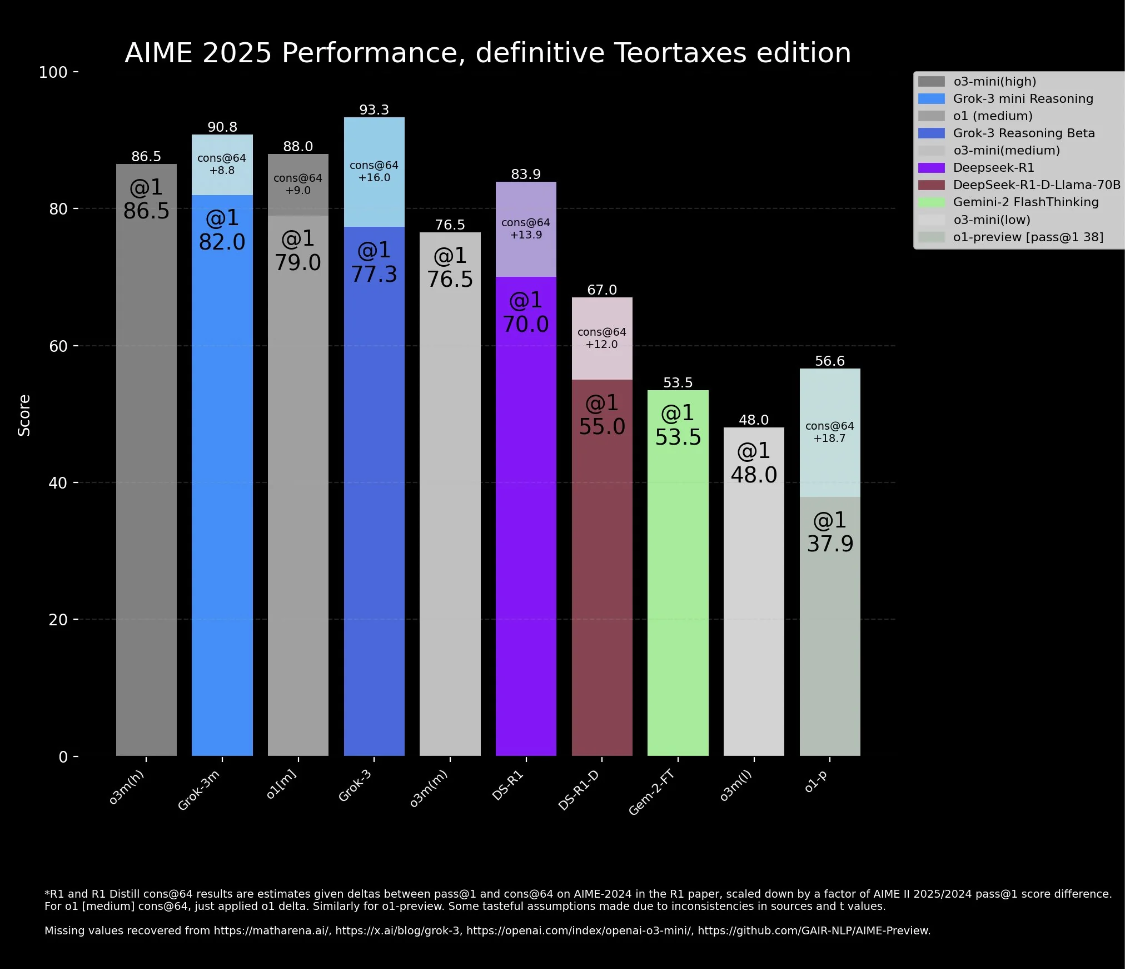

In the midst of this controversy, a neutral third party has redrawn a more "accurate" chart:

But as AI researcher Nathan Lambert points out in a post, perhaps the most important metric of all remains unknown: the computational (and monetary) cost of each model to achieve the best possible score. This just goes to show that most AI benchmarks still fall woefully short of communicating the limitations and strengths of their models.