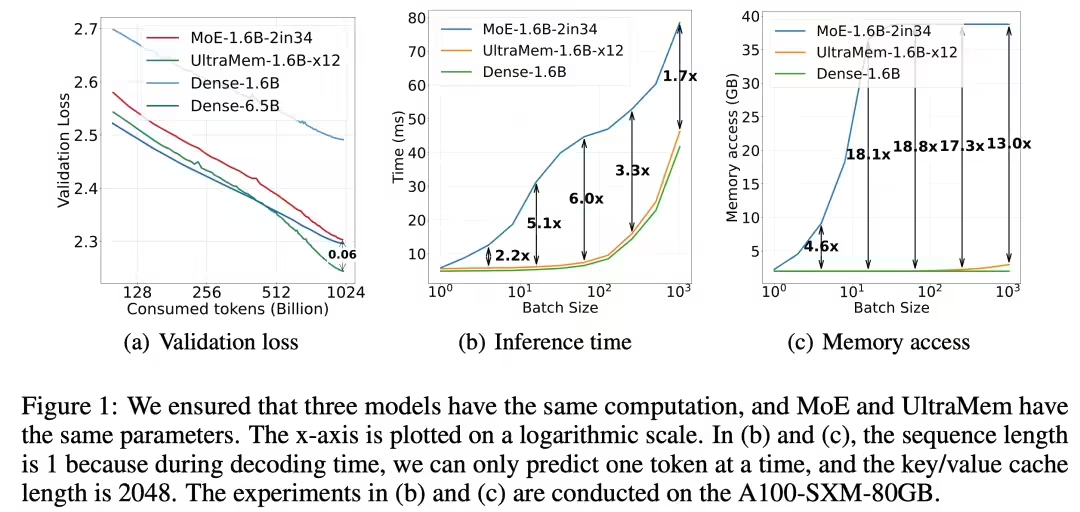

February 12 news.Bean curdThe Big Model team announced today thatByteDanceThe Beanbag Big Modeling team presentsNew sparse modeling architecture UltraMemThis architecture effectively solves the problem of MoE inference.High number of visits and depositsThe inference speed is higher than that of the MoE architectureUpgrade 2-6 timesReasoning CostsUp to 83%The study also reveals the Scaling Law of the new architecture. The study also reveals the Scaling Law of the new architecture, demonstrating that it not only has excellent Scaling characteristics, but also outperforms MoE in terms of performance.

Experimental results show that training UltraMem models with 20 million values can achieve industry-leading inference speed and model performance with the same computing resources, opening up a new path to building billions of values or experts.

UltraMem is described as a sparse modeling architecture that also decouples computation and parameters, ensuring modeling effectiveness whileSolves the visiting problem of inference. The experimental results show that with the same parameters and activation conditions, UltraMem Beyond MoE in modeling effects, and will reason about the speed ofUpgraded 2-6 timesIn addition, at common batch size scales, UltraMem's access cost is almost equal to that of a Dense model with the same amount of computation. In addition, at common batch size scales, UltraMem's access cost is almost comparable to that of a Dense model with the same amount of computation.

Under the Transformer architecture, the performance of a model is logarithmically related to its number of parameters and computational complexity. As the size of the LLM continues to grow, the inference cost increases dramatically and slows down.

Although the MoE architecture has successfully decoupled computation and parameters, a smaller batch size activates all experts at inference time, leading to a sharp rise in accesses and consequently a significant increase in inference latency.

Note: "MoE" refers to the Mixture of Experts architecture, which is a framework forArchitectural design to improve model performance and efficiency. In the MoE architecture, the model consists ofComposed of multiple sub-models (experts), each expert is responsible for processing a portion of the input data. During the training and inference process, depending on the characteristics of the input data, it will beSelective activation of some experts to perform calculations, thus decoupling computation and parameters and improving the flexibility and efficiency of the model.