DeepSeek has taken the race to generate models to another level.

Now there are even people ready to run models with 671B parameters locally.

But running such a large model locally is not child's play; you'll need to make significant hardware upgrades just to perform inference operations.

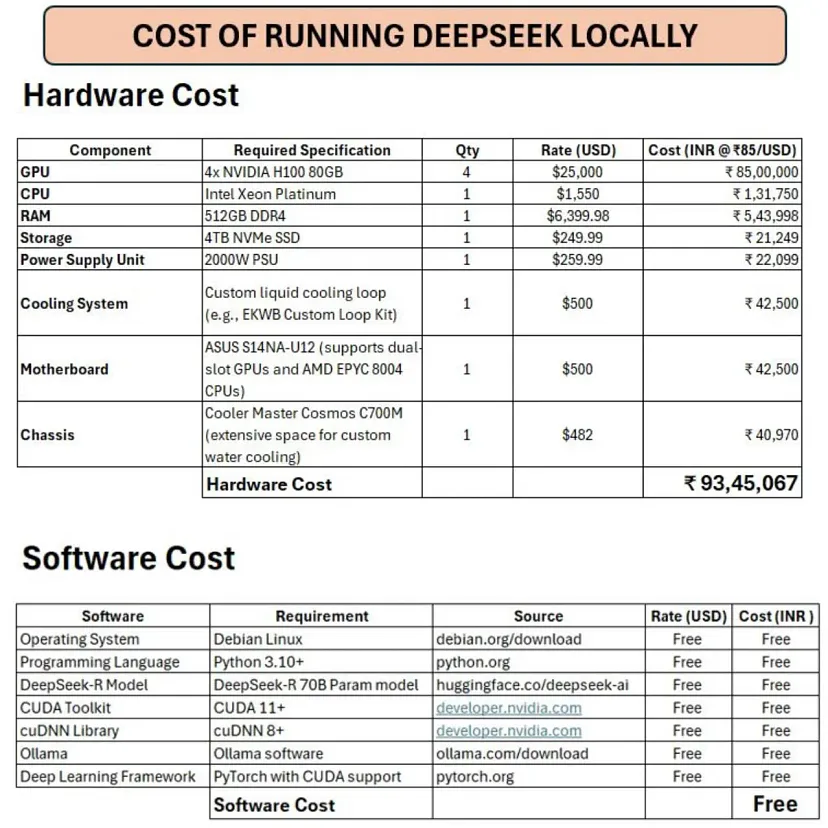

Here's a rough breakdown of what it would cost to run DeepSeek-R1 on your PC.

hardware cost

Most of the cost is spent on hardware. We will discuss GPUs, CPUs, memory (RAM), SSD storage, cooling systems, and more.

The configuration you need is as follows (at today's exchange rate 1 USD = 7.29 CNY):

GPU

- 4 NVIDIA H100 80GB GPUs(Each $25,000)

- Total cost:US$ 100,000 (approximately $729,000)

- reason:These GPUs are state-of-the-art gas pedals optimized for AI workloads, enabling faster training and inference for large models like DeepSeek-R1.

- NVIDIA H100:The NVIDIA H100 is an advanced GPU based on the Hopper architecture with a fourth-generation tensor core and Transformer engine that enables up to 9x faster AI training and 30x faster inference than the previous A100 GPU.

CPU

- Intel Xeon Platinum

- Total cost: US$ 1,550 (approximately $11,299.50)

- reason:High-end CPUs ensure smooth multitasking and system stability during resource-intensive operations.

- The Intel Xeon Platinum is essential for DeepSeek-R1 inference because of its advanced AI acceleration features, such as Intel AMX and AVX-512, which dramatically improve the performance of deep learning tasks.

- It delivers up to 42% of AI inference performance boost over its predecessor, making it ideal for handling high-load jobs. In addition, its optimized memory and interconnect technologies ensure efficient processing of large datasets and complex models.

Memory (RAM)

- 512GB DDR4 ($6,399.98)

- Total Cost: $6,399.98 (approximately $46,655.85)

- reason: Large memory capacity is critical for processing massive datasets and model parameters without performance bottlenecks.

Storage

- 4TB NVMe SSD ($249.99)

- Total Cost: $249.99 (approximately $1,822.43)

- reason: Fast storage ensures rapid reading of data during training.

- SSDs (Solid State Drives) are devices that utilize flash memory to store data with faster read and write speeds, higher endurance, and better energy efficiency than traditional mechanical hard drives (HDDs).

- 4TB NVMe SSDs refer specifically to high-capacity (4 TB) SSDs that utilize the NVMe (Non-Volatile Memory Express) protocol, which utilizes the PCIe interface to achieve faster data transfer rates than older SATA interface-based SSDs.

- NVMe SSDs are particularly well suited for tasks where speed and high-capacity storage are critical, such as gaming, video editing, or server applications.

Power Supply Unit (PSU)

- 2000W PSU ($259.99)

- Total Cost: $259.99 (approximately $1,895.33)

- reason: High-wattage power supplies can reliably provide stable power to multiple GPUs.

Cooling System

- Customized Liquid Cooling Circulation ($500)

- Total Cost: $500 (approximately $3,645.00)

- reason: The GPU generates a lot of heat while running, and the liquid cooling system helps prevent overheating.

Motherboard

- ASUS S14NA-U12 ($500)

- Total Cost: $500 (approximately $3,645.00)

- reason: Supports dual-socket GPUs and high-end CPUs.

Chassis

- Cooler Master Cosmos C700M ($482)

- Total cost: $482 (approximately $3,513.78)

- reason: The spacious chassis can accommodate custom cooling systems and multiple GPUs.

- Total hardware cost: approximately $109,941 (approximately $801,469.89)

Software Costs

The software required to run DeepSeek-R1 is all free, but you will need to have the following components on hand:

- Operating System: Debian Linux (free)

- Programming Languages: Python 3.10+ (free)

- DeepSeek-R model: 70B Parametric modeling (free)

- CUDA Toolkit & cuDNN: NVIDIA's deep learning library (free)

- Deep Learning Framework: PyTorch with CUDA support (free)

- Software totals: 0 dollars

Key Takeaways

- Hardware costs dominate: The GPU, memory and cooling system account for approximately 99% of the total cost.

- Expertise is required: Building such a system requires knowledge of high performance computing.

- Alternatives: For short-term projects, cloud services (e.g. AWS, Google Cloud) may be more economical, but will incur ongoing costs.

Is it worth it?

For well-funded researchers, corporations, or enthusiasts with specific needs (e.g., privacy protection, offline use), building a system locally provides unmatched control and speed; for other users, using a cloud platform or choosing a smaller model may be more practical.

but,Since the overall price is close to $110,000 (about $801,900.00), for individuals, such a commitment is indeed unaffordable.

However, you can try the distilled, more affordable model versions.

So, are you going to run DeepSeek-R1 locally? Think again!