Li Feifei Research TeambyLess than $50.'s cloud computing costs trained an AI inference model called s1 that performed similarly to cutting-edge inference models like OpenAI's o1 and DeepSeek's R1 in tests of mathematical and coding ability.

However, soon, the s1 model was said to be "not trained from scratch", and its base model is "Ali Tongyi Qianqian (Qwen) model". In response, Sina Tech askedAlibaba CloudI'm going to have to ask for confirmation.AliCloud confirmed the news.

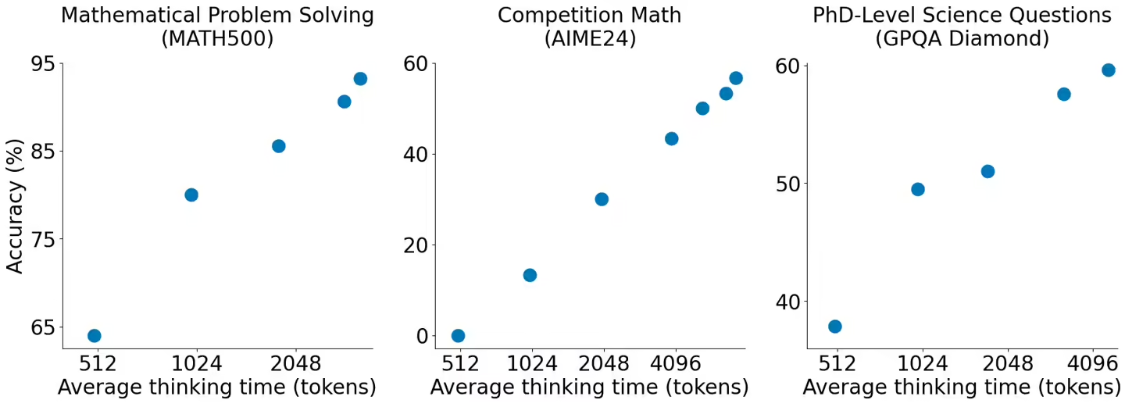

AliCloud responded, "Using the Ali Tongyi Thousand Questions Qwen2.5-32B-Instruct open-source model as a base, they trained the new model s1-32B in 26 minutes of supervised fine-tuning on 16 H100 GPUs, achieving mathematical and coding capabilities comparable to cutting-edge inference models such as OpenAI's o1 and DeepSeek's R1 comparable results, even outperforming o1-preview by 27% on competition math problems."

As previously reported by 1AI, the s1 team revealed thatThey created the AI model through "distillation" technology.The technology aims to extract the "reasoning" ability of an AI model by training it to learn the answers of another AI model.

s1 of the paper shows that it is possible to use a method called supervised fine-tuning (SFT) thatInference models can be distilled using relatively small datasetsIn SFT. In SFT, AI models are explicitly instructed to mimic certain behaviors in the dataset.SFT is more cost-effective than the large-scale reinforcement learning approach that DeepSeek uses to train its R1 models.

s1 is based on a small, off-the-shelf, free AI model provided by Qwen, Alibaba's Chinese AI lab. To train s1, theThe researchers created a dataset of just 1,000 carefully curated questionsThe answers to these questions, as well as the "thinking" process behind each of the answers given in the Google Gemini 2.0 Flash Thinking Experimental.