February 6, 2011 - A study released on Friday shows that artificial intelligence researchers at Stanford University and the University of Washington spent less than 50 U.S. dollars (note: the current cost is about 364 yuan) on cloud computing to successfully train an artificial intelligence researcher with "reasoning" capabilities.Artificial Intelligence Model.

The model is named s1.Performing at the top of math and programming aptitude tests with OpenAI's o1 and DeepSeek's r1.inference modelSimilar levels. Currently, the s1 model and the data and code used for its trainingOpen sourced on GitHub.

s1 The team stated thatThey created the AI model through "distillation" technology.The technology is designed to extract the "reasoning" ability of an AI model by training it to learn the answers of another AI model. The researchers revealed that s1 was distilled from Google's reasoning model Gemini 2.0 Flash Thinking Experimental. Last month, researchers at the University of California, Berkeley, used the same distillation method to create an AI reasoning model at a cost of about $450.

The emergence of models like s1 also raises questions about the commoditization of AI models -- if someone can replicate a multi-million dollar model at relatively low cost, where is the "moat" for large tech companies?

Unsurprisingly, the big AI labs aren't happy about this, with OpenAI, for example, previously accusing DeepSeek of improperly accessing its API data for model distillation.

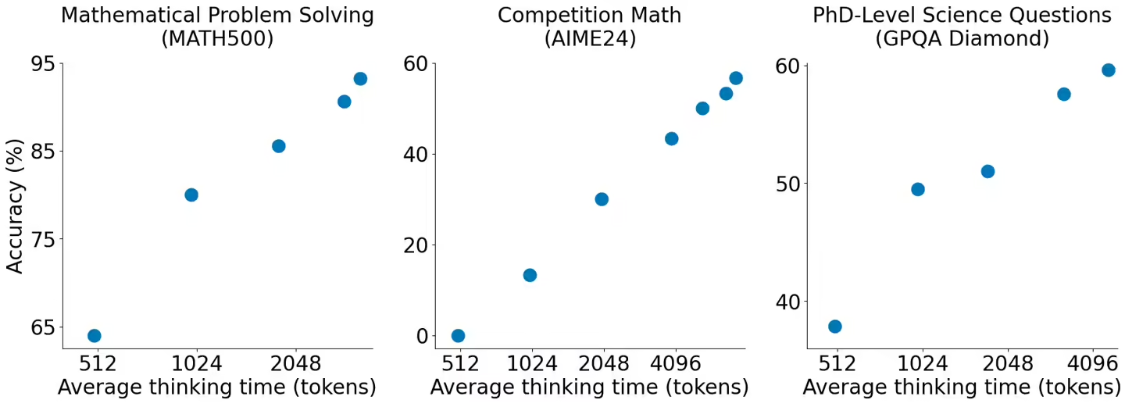

s1 researchers wanted to find the easiest way to achieve strong inference performance and "test-time scaling" (i.e., allowing AI models to think more before answering a question), and these are some of the breakthroughs in OpenAI's o1.

The paper by s1 shows thatA method called supervised fine-tuning (SFT) can be used, which can distill the inference model using a relatively small data setIn SFT. In SFT, AI models are explicitly instructed to mimic certain behaviors in the dataset.SFT is more cost-effective than the large-scale reinforcement learning approach that DeepSeek uses to train its R1 models.

Google offers free access to the Gemini 2.0 Flash Thinking Experimental model through its Google AI Studio platform, with daily usage limits. However, its terms prohibit reverse engineering the model to develop services that compete with Google's own AI offerings.

S1 is based on a small, off-the-shelf, free AI model provided by Qwen, Alibaba's Chinese AI lab. To train s1, theThe researchers created a dataset of just 1,000 carefully curated questions, along with the answers to those questions and the "thinking" process behind each answer as given by the Google Gemini 2.0 Flash Thinking Experimental.

The researchers said.After training s1 (which took less than 30 minutes using 16 Nvidia H100 GPUs), s1 has performed well in certain AI benchmarks. Niklas Muennighoff, a Stanford researcher involved in the project, told TechCrunch that it currently costs about $20 to rent these computing resources.

The researchers used a clever trick to get s1 to check its work and extend its "thinking" time: they made it "wait". The paper shows that adding the word "wait" to s1's reasoning helped the model get slightly more accurate answers.