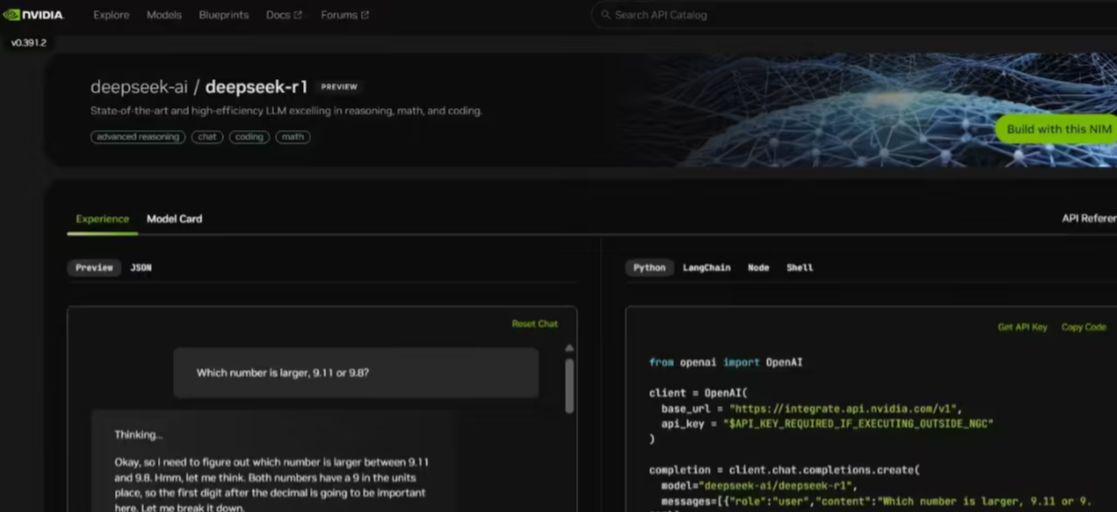

January 31st.NvidiaAnnounced today,DeepSeekThe -R1 model is now available as an NVIDIA NIM Microservices Preview at build.nvidia.com.The DeepSeek-R1 NIM microservice can deliver up to 3,872 tokens per second on a single NVIDIA HGX H200 system.

It was stated that developers can test and experiment with application programming interfaces (APIs), theThe interface is expected to be available soon as a downloadable NIM microserviceThis is part of the NVIDIA AI Enterprise software platform.

DeepSeek-R1 NIM microservices simplify deployment by supporting industry standard APIs. Organizations can maximize security and data privacy by running NIM microservices on their preferred accelerated computing infrastructure. By combining NVIDIA AI Foundry with NVIDIA NeMo software, organizations will also be able to create customized DeepSeek-R1 NIM microservices for dedicated AI agents.

1AI notes that to make it easier for organizations of all sizes to deploy AI services, Nvidia launched NIM (Nvidia Inference Microservices) cloud-native microservices in March 2024.

NIM is a suite of cloud-native microservices optimized to reduce time-to-market and simplify the deployment of generative AI models anywhere in the cloud, data centers, and GPU-accelerated workstations. It extends the developer pool by abstracting the complexity of AI model development and production packaging using industry-standard APIs.