January 29, 2011 - On New Year's DayAlibaba Cloudunveiled its newThousand Questions on Tongyi Qwen 2.5-Max Ultra Large Scale MoE modelThe Qwen Chat is accessible via an API, or you can log in to Qwen Chat and experience it, for example, by talking directly to the models or using artifacts, searching, and other features.

According to the introduction, Tongyi Qwen 2.5-Max uses over 20 trillion tokens of pre-training data and well-designed post-training scheme for training.

performance

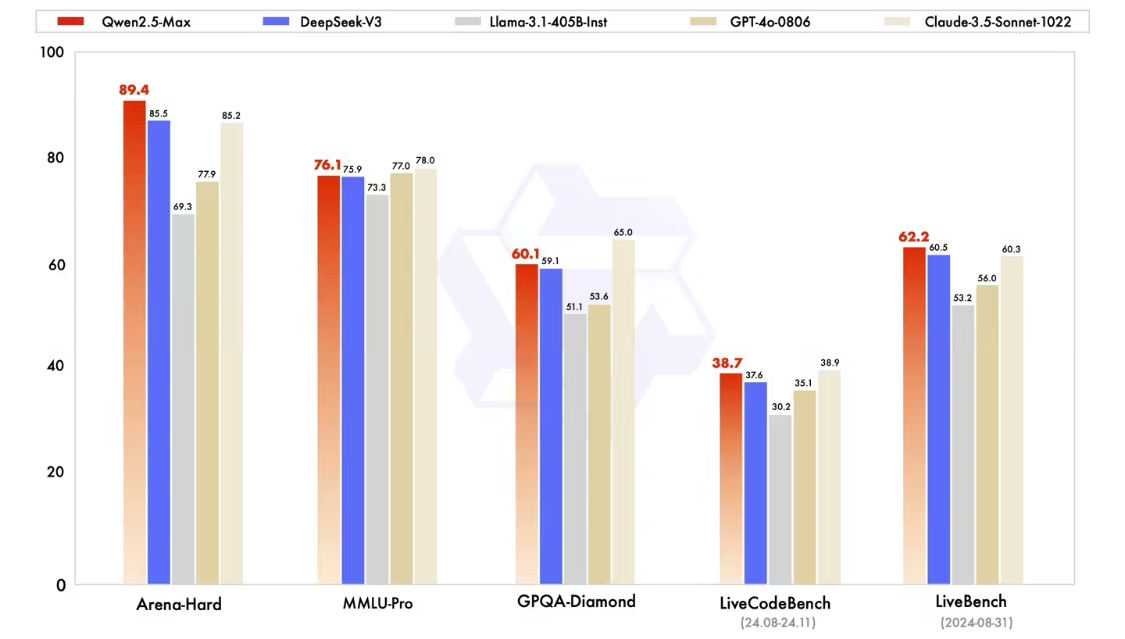

AliCloud directly compared the performance of the command model (Note: the command model is the model we normally use that can directly talk to each other). The comparison includes DeepSeek V3, GPT-4o and Claude-3.5-Sonnet, and the results are as follows:

Qwen2.5-Max outperforms DeepSeek V3 in benchmarks such as Arena-Hard, LiveBench, LiveCodeBench, and GPQA-Diamond, while also demonstrating competitive scores in other evaluations such as MMLU-Pro.

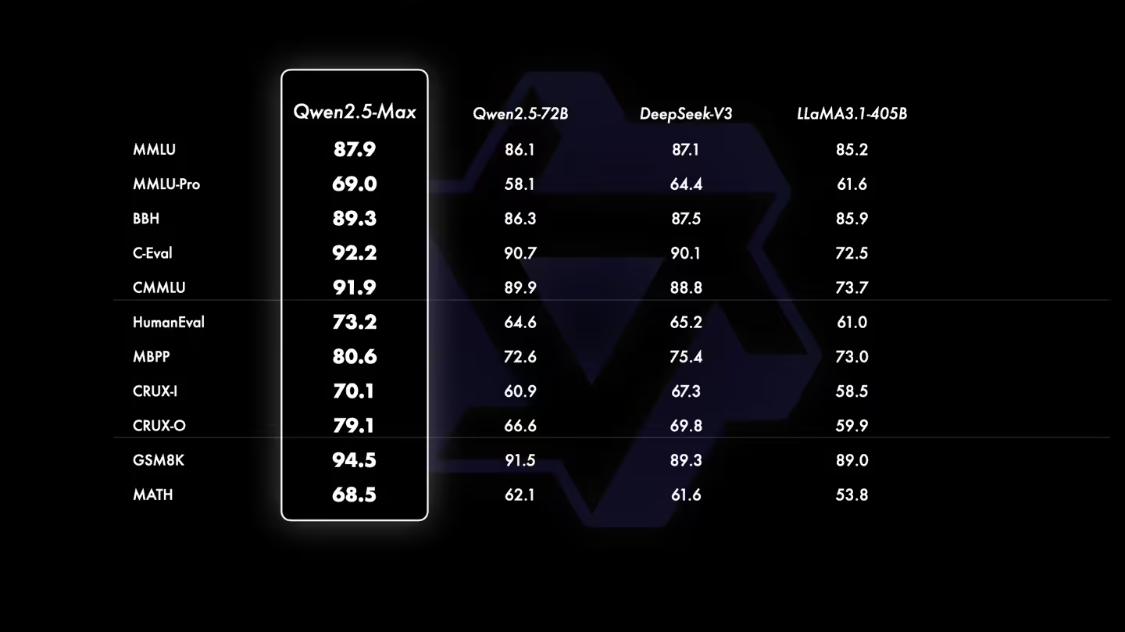

In the base model comparison, due to the inability to access the base models of closed-source models such as GPT-4o and Claude-3.5-Sonnet, AliCloud compares Qwen2.5-Max with DeepSeek V3, the current leading open-source MoE model, Llama-3.1-405B, the largest open-source dense model, and the open-source dense model, which is also ranked at the top of the open-source dense models, with Qwen2.5-72B, which is also one of the top open source dense models. The comparison results are shown in the figure below:

Our base model has shown significant benefits in most benchmarks. We believe that the next version of Qwen2.5-Max will reach even higher levels as post-training techniques continue to improve.