Want to become a master of AI video production? Master theKeLing AIs universal generation function to make your video creation more handy, this post will introduce the Kerin AIGuidelines for useVideo generation advanced tutorial.

Standard versus high quality models

"Standard Mode" is a model with faster video generation speed and lower reasoning cost, which can be used to quickly verify the effect of the model and satisfy users' creative realization needs; [High Quality Mode] is a model with richer video generation details and higher reasoning cost, which can be used to generate high-quality videos and satisfy the creators' needs for higher-order works.

For the standard model and high quality model, respectively, there are the following advantages, we can choose the model generation according to the actual situation; the

Standard model:Video generation is faster and less costly to reason. It is good at generating portraits, animals, and scenes with large dynamic amplitude, and generates more intimate animals with a soft color palette, and is also a model that received good reviews when Kerin was first released;.

High quality model:Video generation is richer in details, higher inference cost, good at generating portraits, animals, buildings, landscapes and other videos, richer in details, more advanced composition and tonal atmosphere, is a model most used by Kerin at this stage for fine video creation.

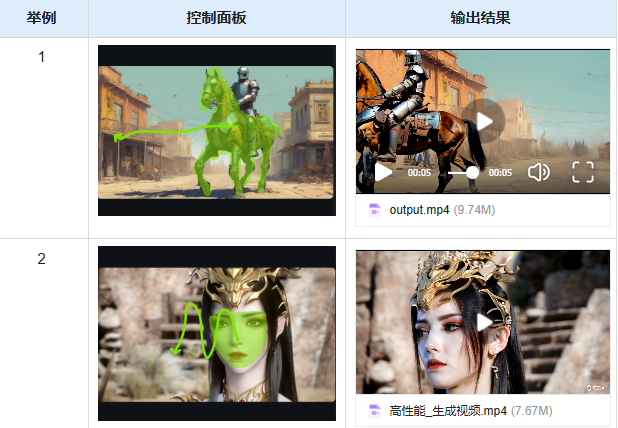

Camera Control

The high quality modes of Kerin 1.0 and Kerin 1.5 both support camera control, which now supports 6 basic camera movements including "Horizontal camera movement, Vertical camera movement, Advancement/Distraction, Vertical panning, Horizontal panning", "Rotary panning, Horizontal panning", "Rotary panning", and "Horizontal panning". It now supports 6 basic frames including "horizontal frame, vertical frame, push/pull, vertical pan, rotate frame, and horizontal frame". Koring 1.0 model Vince video, but also additional support for "left rotation advance, right rotation advance, advance upward, downward shift and pull farther" 4 masters of the lens, to help creators generate video images with a clear effect of the lens.

The lens control belongs to a kind of lens language, in order to meet the diversity of video creation, so that the model better respond to the creator's control of the lens, the platform to increase the lens control function, to control the lens behavior of the video screen with absolute commands, you can adjust the displacement parameter for the amplitude of the lens to choose, the following is a different example of the "a big cat playing the piano by the lake". The following is an example of the different camera movements for "A big cat playing piano by the lake".

head-to-tail frame capability

First and last frame function, that is, upload two pictures, the model will use these two pictures as the first and last frames to generate a video, by clicking on "Add Last Frame" in the upper right corner of the "Graphic Video" function to experience it.

The first and last frame function can realize a finer control of the video, at this stage is mainly used in the video creation of the first frame of the last frame of the control requirements of the video generation, can better achieve the expected dynamic transition of the generated video, but it should be noted that the first frame of the last frame of the video content needs to be as similar as possible, if the difference is large will cause the camera to switch.

Some tips:

Try to choose two pictures with the same theme and close together, so that the model is easy to articulate smoothly within 5s, and if the two pictures are quite different, it may trigger a lens switch.

Many creators will perform similar image selection through image generation and subsequently utilize the first and last frame capabilities for video generation, e.g.

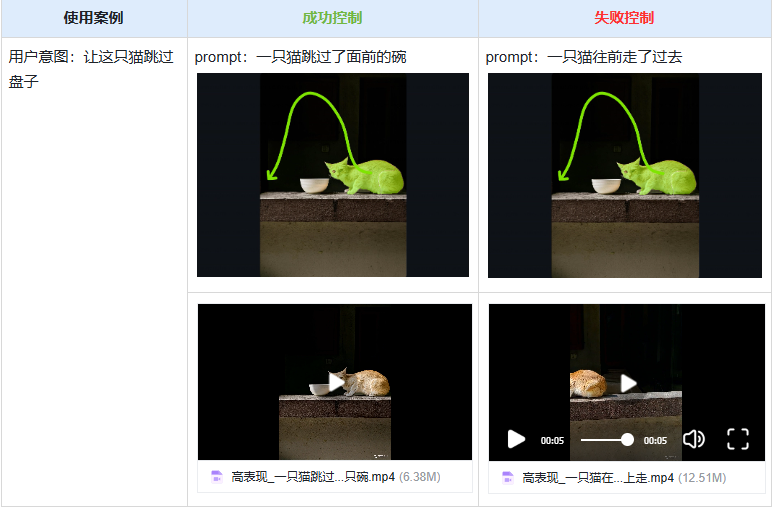

Motion Brush Capability

Motion brush function, that is, upload any picture, the user can in the picture through the "automatic selection" or "paint" on a region or subject to select, add motion trajectory, at the same time, enter the expected motion Prompt (subject +), click to generate the model will generate for the user to add the specified motion after the graphic video results to control the performance of a particular subject to complement the advanced graphic video can be generated. At the same time, enter the expected motion Prompt (Subject + Motion), click Generate and the model will generate the video result for the user after adding the specified motion, so as to control the motion performance of a specific subject, complementing the advanced controllable generation of graphic video.

Motion brush function as a stronger controllable generating ability of Tucheng video, can further generate the desired area or subject of the specified movement according to the user's wishes, such as Tucheng video is more difficult to realize the "ball movement", as well as the generation of the "character 1 animal steering and walking route", etc., support for 6 kinds of Support 6 kinds of subject and trajectory settings at the same time, in addition, this time to support the "static brush" function, painted with a static brush, the model will fix the pixel points of the painted area, to avoid the occurrence of the mirror, if you do not want to move the trajectory of the camera movement may be caused by the proposed bottom of the picture to add a static brush.

Some tips

It is recommended that when using the motion brush function, try to add a Promot description, the Promot cat description and the region, the subject of the movement to maintain consistency, such as "puppy running on the road", also follow the "subject + motion" prompt word writing.

Selecting critical localizations of an object (such as an animal's head) enables more accurate motion control.

For objects that can't move in the physical world, if we give a motion trajectory setting, the model understands the picture and motion commands and generates a running mirror effect.

If you want to avoid a mirror effect on the model, you need to use the "Static Brush" function, which fixes the pixels in the area after painting.

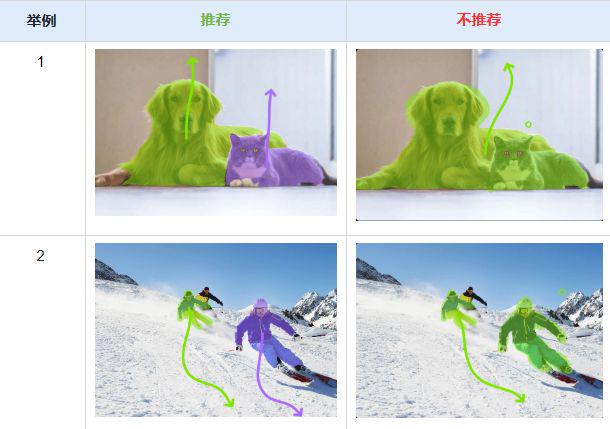

Suggestions for image selection areas.

Individual dynamic brushes only select single objects with consistent categories [Recommended].

A single dynamic brush paints only one interconnected area, not multiple areas separated from each other [Recommended].

The State Brush can select multiple areas that are not linked to each other, but it is still recommended that each individual selection be within the same category.

Recommendations on movement trajectories

Both the direction and length of the trace curve come into play, and assuming that the start of the trace curve is inside the selection, the end of the trace is expected to be where the object rests at the end of the video.

The intermediate motion process of the selected object will move strictly in accordance with the drawn trajectory.

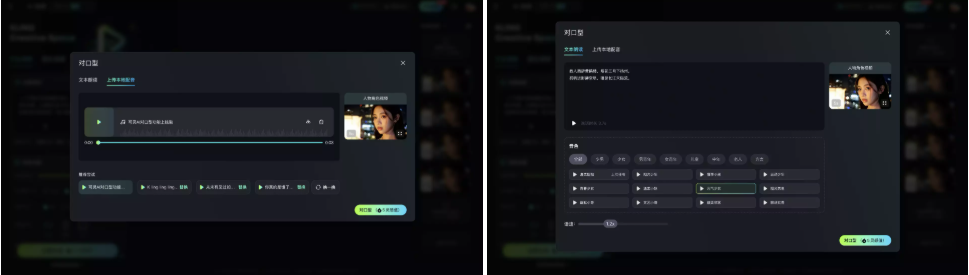

Lip Sync

The [lip-sync] function supports you to upload local dubbing/singing files after generating character videos in Keyline A1, or generate dubbing online through the text reading function, and Keyline A1 will be able to synchronize your video character's lip-sync with the audio perfectly as if a real person is talking/singing, and the video is instantly as if it were born!

Usage.

1) Using Kerin AI, generate a video that contains the full face of the character: Click on "lip-sync" under Preview Video.

2) Within the open lip-sync pop-up window, use the text to read aloud to generate a dub, or upload a local dub/singing file.

The tones of the text reading are super realistic large model tones, and support to adjust the speaking speed in the range of 0.8-2x speed.

3) Click the lip-sync button and wait for the video to be generated to get the result of perfect synchronization between the video character's lip-sync and the audio.

Note: lip-syncing is a paid feature, the price is related to the length of the character video, a 5s video lip-syncing costs 5 Inspiration Value, a 10s video lip-syncing costs 10 Inspiration Value.

If you if you upload audio, or text read aloud generated audio, exceeds the screen length library ", will also provide you with the ability to crop the length of the audio.

Some tips:

The videos generated by Keyline 1.0 model and Keyline 1.5 model support lip-synching as long as the face conditions of the video screen are met.

Currently, Keling AI supports lip-syncing for character-based characters (real/3D/2D), but not for animal-based characters.