Researchers from Anhui University of Technology, Nanyang Technological University, and Lehigh UniversityOpen SourceBeMultimodal large model——TinyGPT-V.

TinyGPT-V uses Microsoft's open source Phi-2 as the basic large language model, and also uses the visual model EVA to achieve multimodal capabilities.Although TinyGPT-V has only 2.8 billion parameters, its performance is comparable to models with tens of billions of parameters..

In addition, TinyGPT-V training only requires a 24G GPU, and does not require high-end graphics cards such as A100 and H100 for training.

Therefore, it is very suitable for small and medium-sized enterprises and individual developers, and can be deployed on mobile devices such as mobile phones and laptops.

Open source address: https://github.com/DLYuanGod/TinyGPT-V

Paper address: https://arxiv.org/abs/2312.16862

TinyGPT-V main architecture

TinyGPT-V mainly consists of three major parts: the large language model Phi-2, the visual encoder, and the linear projection layer.

Developers chose Microsoftup to dateThe open source Phi-2 is used as the basic large language model of TinyGPT-V. Phi-2 has only 2.7 billion parameters, but its understanding and reasoning capabilities are very strong. In many complex benchmark tests, it has achieved results close to or exceeding those of a large 13 billion parameter model.

The visual encoder uses the same architecture as MiniGPT-v2, based on ViT's EVA model. This is a pre-trained visual base model that remains frozen throughout the training of TinyGPT-V.

The role of the linear projection layer is to embed the image features extracted by the visual encoder into the large language model so that the large language model can understand the image information..

TinyGPT-VFirstThe linear projection layer uses the Q-Former structure from BLIP-2, which canmaximumDegree reuse of BLIP-2 pre-training results.

The second linear projection layer is initialized with a new Gaussian distribution to bridge the dimensionality gap between the output of the previous layer and the language model embedding layer.

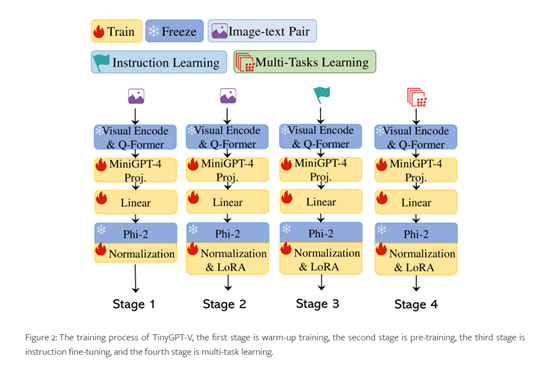

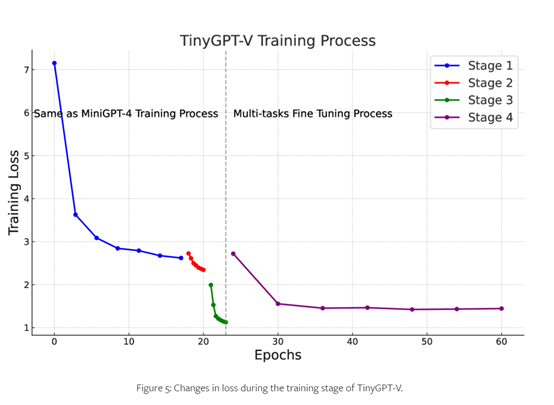

TinyGPT-V training process

The training of TinyGPT-V went through four stages, and the data sets and experimental processes used in each stage were different.

FirstThe warm-up training phaseThe purpose is to adapt the Phi-2 model to image-based input. The training data used in this phase includes three datasets: Conceptual Caption, SBU, and LAION, totaling about 5 million images and corresponding description texts.

The second stage is pre-training, the purpose is to further reduce the loss on the image-text pair. This stage also usesFirstThe conceptual caption, SBU and LAION datasets of the same stage are used in the experiment. There are 4 stages, and each stage has 5000 iterations.

The third stage is instruction tuning, using MiniGPT-4 and LLaVA to train the model on some image-text pairs with instructions, such as "Describe the content of this picture."

The fourth stage is multi-task tuningThis phase uses more complex and rich multimodal datasets, such as sentences with complex semantic alignment in LLaVA, object parsing datasets in Flickr30K, multi-task mixed corpora, and plain text corpora.

At the same time, a learning rate strategy similar to that in the second stage was adopted, which eventually reduced the loss from 2.720 to 1.399.

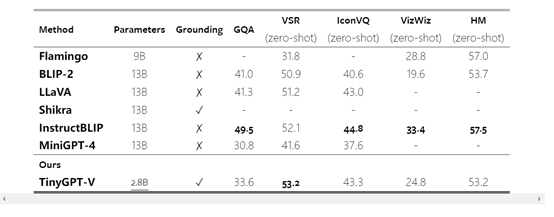

To test the performance of TinyGPT-V, the researchers evaluated its performance on multiple visual language tasks such as visual question answering, visual-spatial reasoning, and image caption generation from multiple perspectives.

The results show that TinyGPT-V has small parameters but very strong performance. For example, in the VSR spatial reasoning task, it surpassed all the models tested with an accuracy of 53.2%.