Recently, researchers proposed a new image segmentation method called Generalizable SAM (GenSAM) model. The design goal of this model is to achieve targeted segmentation of images through a general task description, getting rid of the reliance on sample-specific cues. In a specific task, given a task description, such as "camouflaged sample segmentation", the model needs to accurately segment the camouflaged animals in the image according to the task description, without relying on manual specific cues for each image.

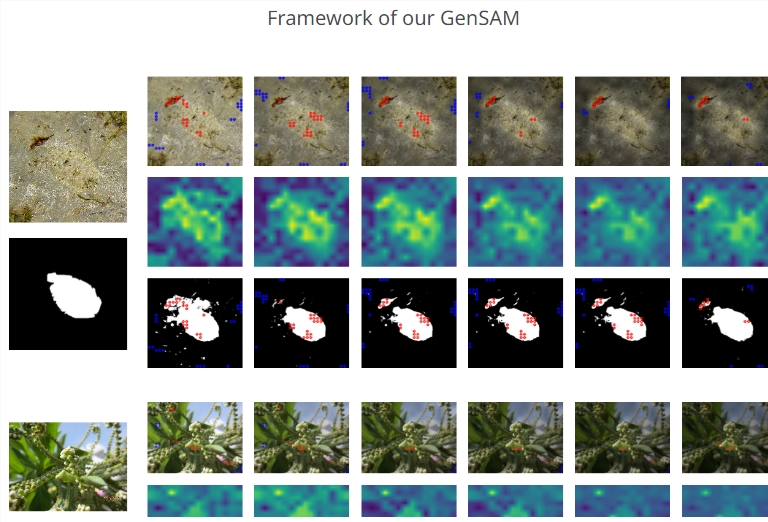

To solve this problem, the GenSAM model introduces the Cross-modal Chains of Thought Prompting (CCTP) and Progressive Mask Generation (PMG) frameworks. The CCTP thought chain maps the common text prompts of the task to all the images under the task, generating a personalized consensus heat map of the object of interest and its background, thereby obtaining reliable visual cues to guide segmentation. In order to achieve adaptability during testing, the PMG framework iteratively reweights the generated heat map to the original image, guiding the model to focus on possible target areas from coarse to fine.

The experimental results of GenSAM show that the model performs better than the baseline method and the weakly supervised method in the task of camouflaged sample segmentation and has good generalization performance. The introduction of this model is an important step for the practical application of cued segmentation methods such as SAM.

The innovation of this research is that by providing a general task description, the GenSAM model can process unlabeled images of all relevant tasks in batches without manually providing specific hints for each image. This makes the model more efficient and scalable when processing large amounts of data.

In the future, the method of the GenSAM model may provide new ideas and solutions for image segmentation tasks in other fields. The researchers hope that this general task description-guided image segmentation method can promote the development of computer vision and improve the segmentation accuracy of the model in complex scenes.

- Paper link: https://arxiv.org/pdf/2312.07374.pdf

- Project link: https://lwpyh.github.io/GenSAM/