January 16th.Wall-facing intelligencePublic announced today the launch of the "MiniCPM-o 2.6" end-sideholomodal modelWith a parameter of 8B, it is claimed that the performance is comparable to GPT-4o and Claude-3.5-Sonnet.

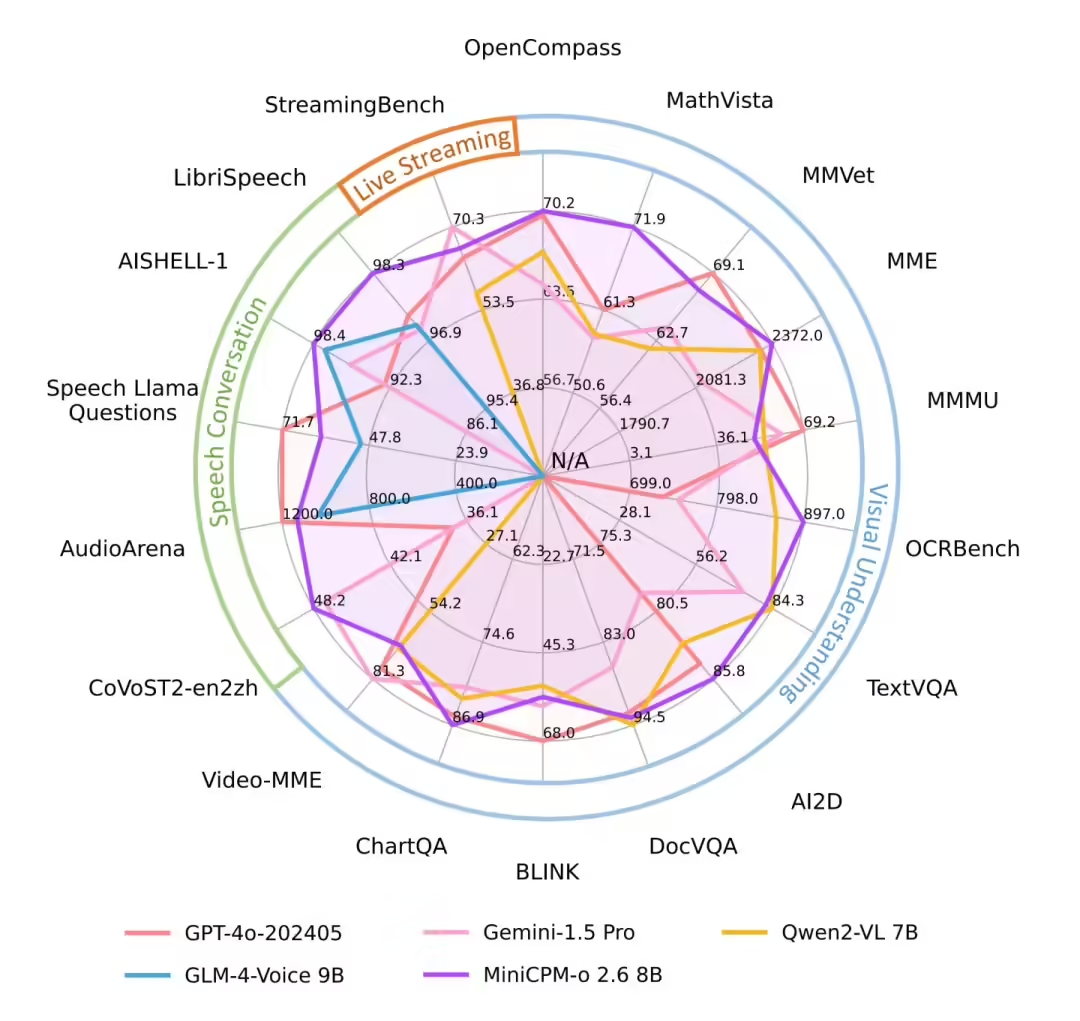

It utilizes an end-to-end multimodal architecture that can simultaneously process multiple types of data such as text, images, audio and video to generate high-quality text and speech output. Officially, it has a total parameter count of 8B, visual, speech and multimodal streaming capabilitiesAchieved GPT-4o-202405 rating, one of the richest models in the open source community in terms of modal support and performance.

MiniCPM-o 2.6 supportBilingual voice dialog with configurable voicesThe program also features advanced capabilities such as emotion/speed/style control, end-to-end voice cloning, role-playing, and more.

According to the official introduction, MiniCPM-o 2.6 is alsoThe first support in the iPad Multimodal real-time streaming interactions on end-side devices such as theThe multimodal macromodel of GPT-4o-20240 is a large model of multimodality. With an average score of 70.2 on the OpenCompass list (combining 8 mainstream multimodal benchmarks), it outperforms mainstream commercial closed-source multimodal macromodels such as GPT-4o-202405, Gemini 1.5 Pro, and Claude 3.5 Sonnet in terms of single-graph comprehension with a size in the order of 8B.

1AI Attached open source address:

- GitHub: https://github.com/OpenBMB/MiniCPM-o

- huggingface: https://huggingface.co/openbmb/MiniCPM-o-2_6