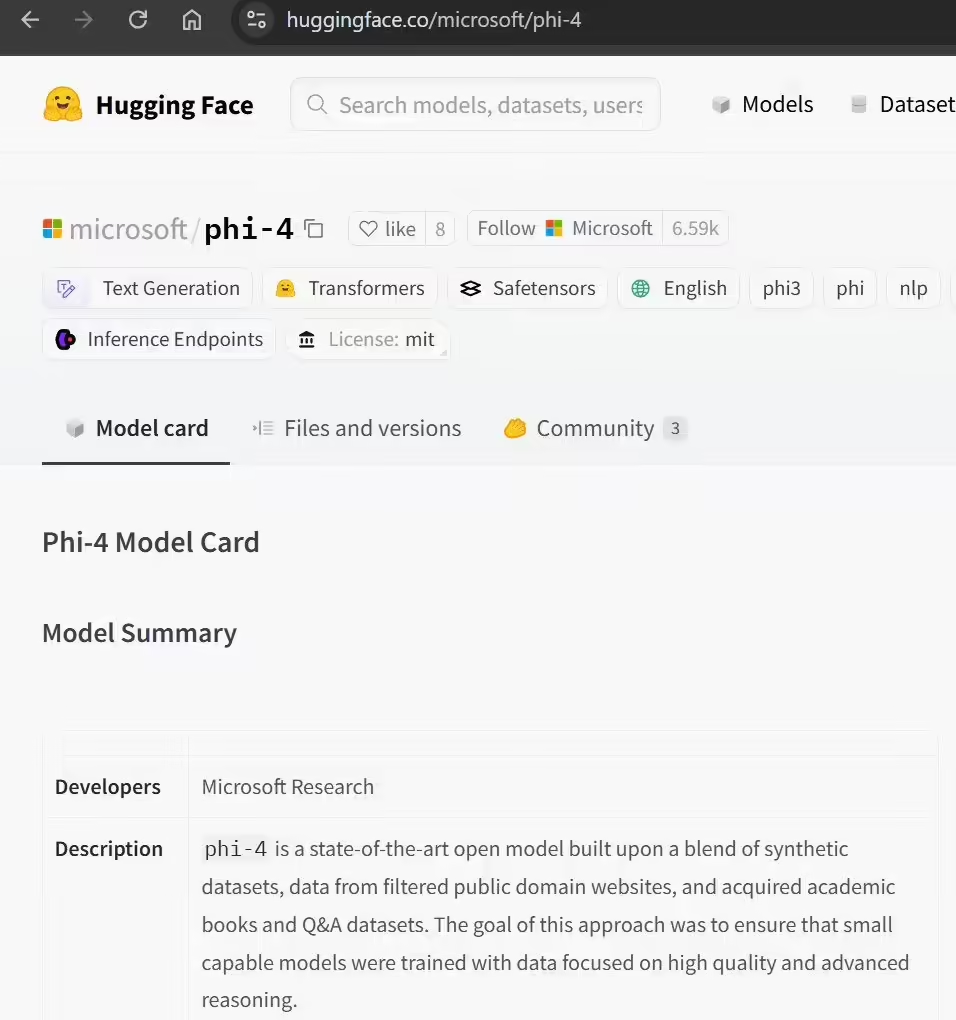

January 9, 2024 - After the December 12, 2024 release of theMicrosoftYesterday (Jan. 8) on the Hugging Face platform.Open SourceThe small language model, Phi-4, is available for interested developers and testers to download, fine-tune, and deploy the AI model.

Note: With only 14 billion parameters, the model outperforms even the much larger Llama 3.3 70B (nearly five times as many as Phi-4) and OpenAI's GPT-4o Mini in several benchmarks; Phi-4 even outperforms Gemini 1.5 Pro and OpenAI's GPT-4o in math competition problems.

The main reason behind Phi-4's strong performance is due to the fact that Microsoft has selected high-quality datasets for training, but has not yet optimized the inference, which can be further optimized and quantified by developers in the future to allow it to run locally on devices such as PCs and laptops.