January 4, 2012 - AliThousand Questions on Tongyi Qwen's newest CodeElo benchmarking test, by comparing it to the humanprogrammerA comparative Elo rating system to assess the level of programming in the Large Language Model (LLM).

Project Background

One of the AI scenario applications of big language modeling is to generate and complement code, except that there are many challenges in assessing the real capabilities of programming at this stage.

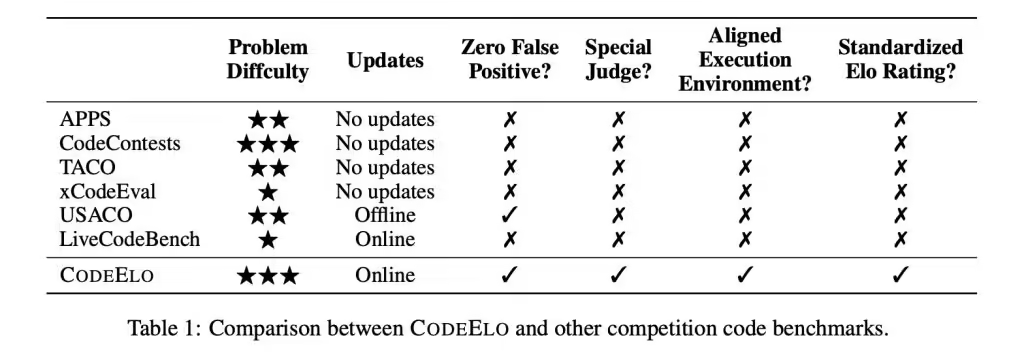

Existing benchmarks, including LiveCodeBench and USACO, have limitations, lack robust private test cases, do not support specialized judgment systems, and often use inconsistent execution environments.

CodeElo: Leveraging CodeForces for a More Accurate LLM Evaluation System

Note: The Qwen research team, in an effort to address these challenges, hasIntroduced the CodeElo Benchmark Test, designed to assess the level of programming competition at LLM using the Elo rating system compared to human programmers.

CodeElo's questions come from the CodeForces platform, which is known for its rigorous programming competitions. By submitting solutions directly to the CodeForces platform, CodeElo ensures the accuracy of evaluations, addresses issues such as false positives, and supports questions that require special judging mechanisms. In addition, the Elo rating system mirrors human rankings, allowing for effective comparisons between LLM and human contestant performance.

CodeElo's three core elements: comprehensiveness, robustness and standardization

CodeElo is based on three key elements:

- Comprehensive selection of issues: Topics are categorized by tournament division, difficulty level, and algorithmic labels to provide a comprehensive assessment.

- Robust assessment methods. Submitted code is tested on the CodeForces platform, utilizing its special evaluation mechanisms to ensure accurate judgments, eliminating the need to hide test cases and providing reliable feedback.

- Standardized rating calculations. The Elo rating system evaluates the correctness of code, takes into account problem difficulty, and penalizes errors to incentivize high-quality solutions, providing a careful and effective tool for evaluating coding models.

Test Results

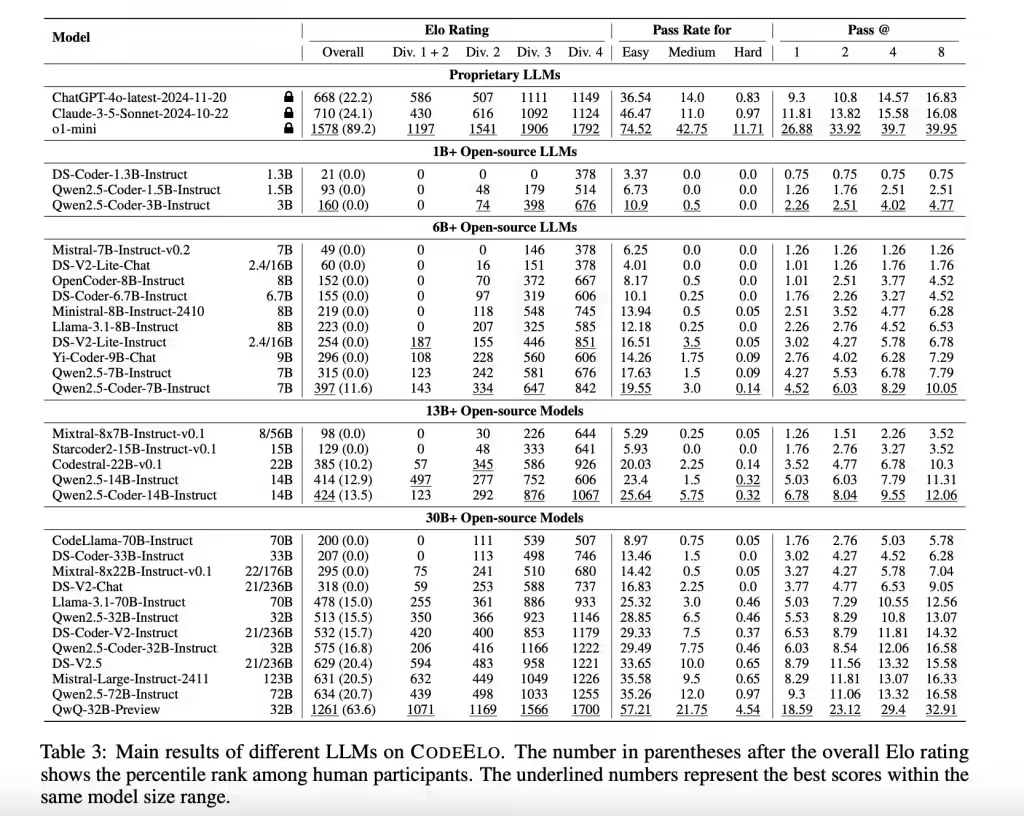

After testing 30 open-source LLMs and 3 proprietary LLMs, OpenAI's o1-mini model performed the best, with an Elo score of 1578, outperforming 90% human participants; among the open-source models, QwQ-32B-Preview topped the list with a score of 1261.

However, many of the models still struggled with solving simple problems and typically ranked behind the human participants.20% The analysis showed that the models performed well in categories such as mathematics and implementation, but were deficient in dynamic programming and tree algorithms.

In addition, the model performs better when coded in C++, which is consistent with the preferences of competitive programmers, and these results highlight areas for improvement in LLM.