According to the Financial Times, a recent report on the DeepSeek Release history, optimization direction of the expert meeting minutes document circulated in the industry. In response, DeepSeek responded that the company did not authorize any personnel to participate in brokerage investor exchanges, theThe so-called "DeepSeek experts" are not company personnel.The information exchanged is not true.

DeepSeek states that there are strict rules and regulations in place within the company.Employees are expressly prohibited from giving outside interviews and participating in investor communicationsand other types of institutional information exchange meetings for investors in the market. All related matters are subject to publicly disclosed information.

1AI noted that the official public number of Deep Seek published a blog post on December 26, announcing the launch and simultaneous open-source of the DeepSeek-V3 model, and users can log on to the official website chat.deepseek.com to talk to the latest version of the V3 model.

DeepSeek-V3 is described as a 671 billion parameter Mixed of Experts (MoE, which uses multiple expert networks to partition the problem space into homogeneous regions) model with 37 billion activation parameters, pre-trained on 14.8 trillion tokens.

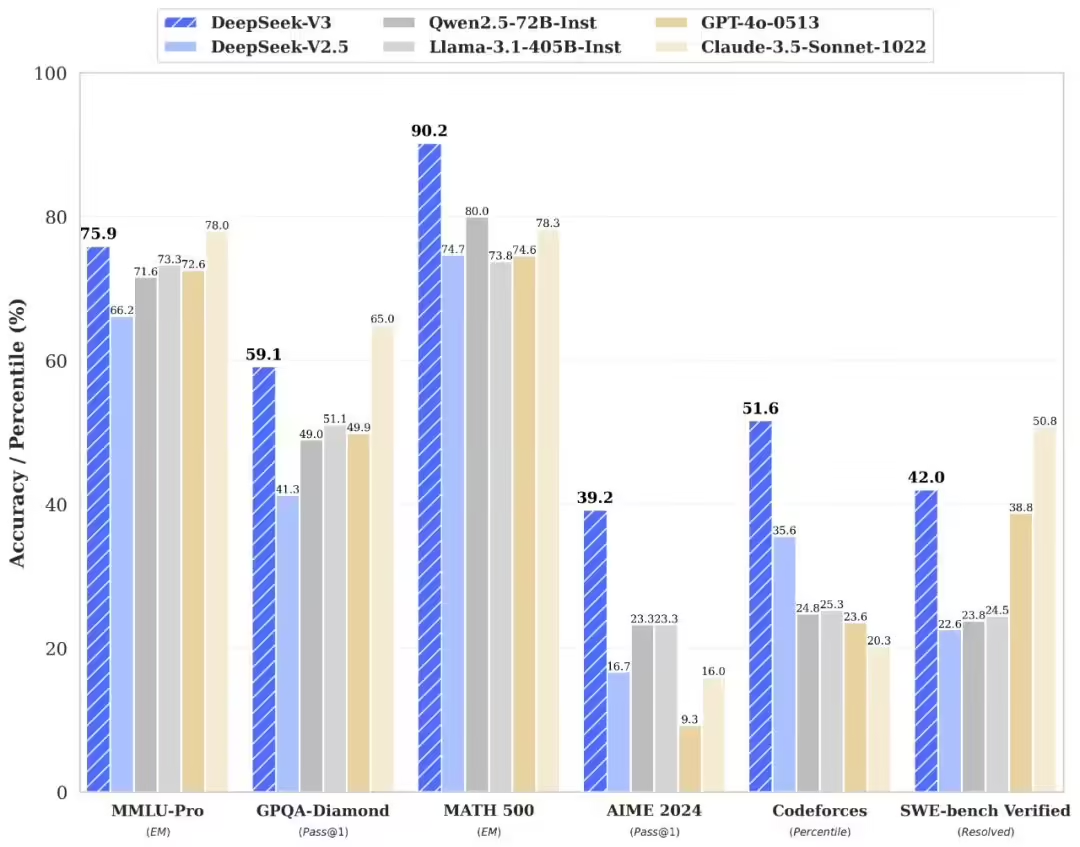

It outperforms open-source models such as Qwen2.5-72B and Llama-3.1-405B in many evaluations, and outperforms the world's top closed-source models such as GPT-4o and Claude-3.5-Sonnet.