ZhipuTech Team Public posted a blog post yesterday (December 26) announcing thatOpen Source Base model CogAgent-9B-20241220 for GLM-PC, based on GLM-4V-9B training, dedicated to theAgent(Agent) tasks.

Note: The model requires only screenshots as input (no textual representations such as HTML) and can predict the next GUI action based on any user-specified task in conjunction with historical operations.

Thanks to the universality of screenshots and GUI operations, CogAgent can be widely used in various GUI-based interaction scenarios, such as personal computers, cell phones, and automotive devices.

Compared to the first version of the CogAgent model open-sourced in December 2023, CogAgent-9B-20241220 achieves significant improvements in GUI perception, inference prediction accuracy, action space refinement, task pervasiveness, and generalization, and supports bilingual screenshots and language interaction.

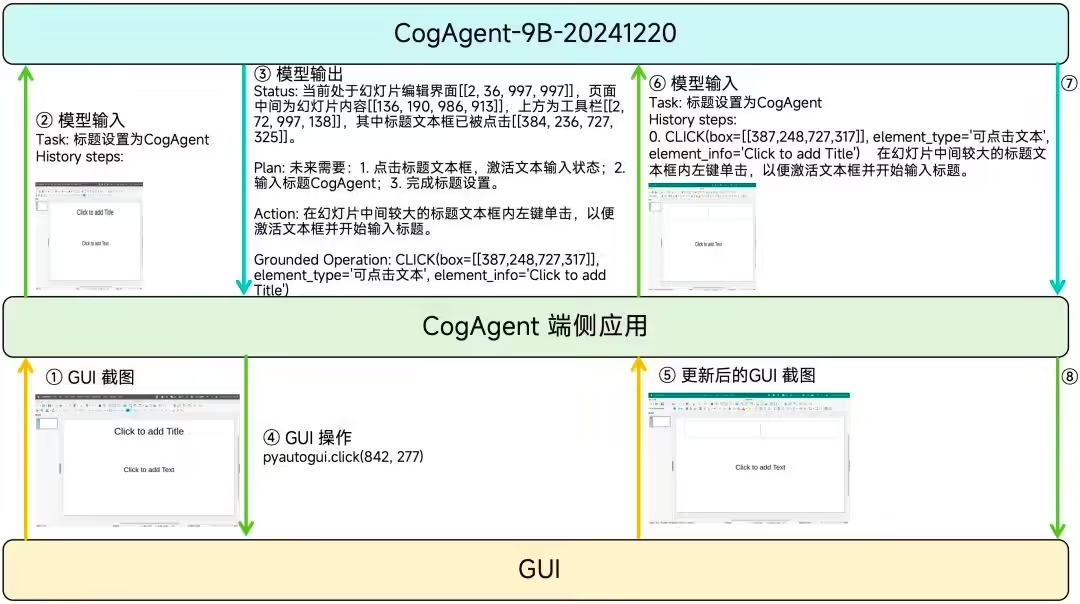

The input to CogAgent consists of only three parts: the user's natural language commands, the history of executed actions, and a GUI screenshot, without any textual representation of the layout or additional set of marks.

Its output covers the following four areas:

- Thinking Process (Status & Plan): CogAgent explicitly outputs the thought process of understanding the GUI screenshot and deciding what to do next, including both Status and Plan, and the output can be controlled by parameters.

- Natural language description of the next action (Action): Action descriptions in the form of natural language will be added to the historical action log, facilitating the model's understanding of the action steps that have been performed.

- Structured description of the next action (Grounded Operation): CogAgent describes the next action and its parameters in a structured manner similar to a function call, making it easy for the end-side application to parse and execute the model output. Its action space consists of two categories: GUI operations (basic actions, such as left-click, text input, etc.) and anthropomorphic behaviors (advanced actions, such as application startup, invocation of language models, etc.).

- Sensitive judgment of the next move:Actions are categorized as "general actions" and "sensitive actions", the latter being actions that may have irreversible consequences, such as clicking the "Send" button in the "Send Mail" task. Send" button in a "Send Mail" task.

CogAgent-9B-20241220 was tested on datasets such as Screenspot, OmniAct, CogAgentBench-basic-cn, and OSWorld, and compared with GPT-4o-20240806, Claude-3.5-Sonnet, Qwen2-VL, ShowUI, SeeClick and other models for comparison.

The results show that CogAgent achieves leading results on several datasets, proving its strong performance in the GUI Agent domain.