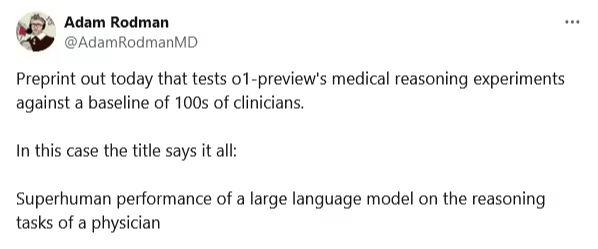

Dec. 25, 2012 - A team of researchers from Harvard Medical School and Stanford University has conducted an in-depth evaluation of medical diagnostic OpenAI of the o1-preview model.found to be better than human doctors at diagnosing trickyMedicalCase in point.

According to the study, o1-preview correctly diagnosed 78.31 TP3T of test cases, and was even more accurate at 88.61 TP3T in 70 case-specific comparison tests, significantly better than its predecessor, GPT-4, at 72.91 TP3T.

Using the Standardized Scale for the Assessment of the Quality of Medical Reasoning R-IDEA, o1-preview achieved 78 perfect scores out of 80 cases. In comparison, experienced physicians achieved perfect scores in only 28 cases, and residents in only 16 cases.

In complex cases designed by 25 experts, o1-preview scored as high as 861 TP3T, more than twice as high as physicians using GPT-4 (411 TP3T) and those using traditional tools (341 TP3T).

The researchers acknowledged the limitations of the test in that some of the test cases may have been included in o1-preview's training data and that the test focused primarily on the system working alone and did not adequately consider scenarios in which it would work in concert with a human physician; in addition, the diagnostic test suggested by o1-preview is costly and has limitations in practical application.