TechCrunch, a technology media outlet, published a blog post yesterday (December 23) arguing that OpenAI of o3 While the model has achieved glowing results in tests such as ARC-AGI.However, the high computational cost behind it makes it difficult to popularize it in practical applications in the short term.

o3 Performance

One of the new features of o3 is the ability to adjust the inference time, which is categorized into three computation levels: low, medium, and high; the higher the computation level, the better the task execution performance of o3.

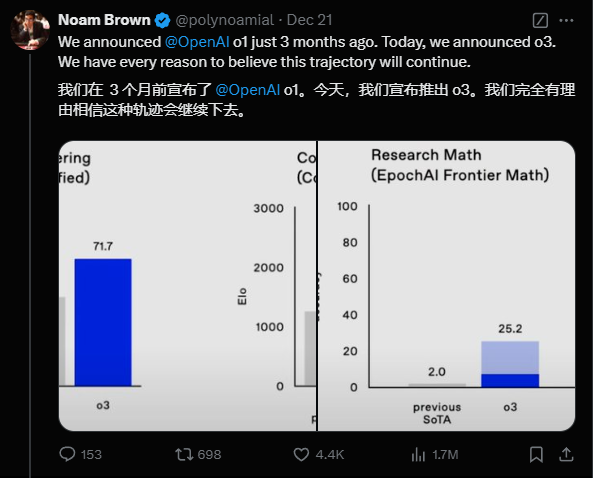

And o3 is a major step towards that goal for OpenAI. o3 scored 87.5% on the high compute setting and 75.7% on the low compute setting in the ARC-AGI benchmarks, tripling the performance of o1.

In EpochAI's Frontier Math benchmark, o3 solved 25.2% (no other model exceeded 2%), setting a new record.

o3 Excessive modeling costs

François Chollet, creator of the ARC-AGI benchmarks, wrote in a blog post that OpenAI's o3 model, while an important breakthrough in AI, is too expensive.

According to the performance icons from the ARC-AGI test, the high-scoring version of o3 uses more than $1,000 (currently about Rs. 7,303) worth of computational resources per task, the o1 model uses about $5 of computational resources per task, and the o1-mini uses only a few cents.

This means that while OpenAI achieved a high score of nearly 88%, it consumed more than 170 times the computational resources, and the high-computing version of o3 cost more than $10,000 (currently about $73,033) to invoke resources for the entire test, making the cost of using the o3 model affordable only to well-heeled organizations and individuals.

The high computational cost of o3 models makes them more suitable for complex problems, such as long-term strategic decisions, rather than small, everyday problems; more efficient AI inference chips and more cost-effective AI chips may be the key to lowering the cost of using o3 models in the future.