December 18-19Volcano Engine The FORCE Power Conference was officially held in Shanghai in winter. The most interesting attraction of this conference is undoubtedly theBean curdA full line of upgrades to the big model family and a new beanbagvisual understanding modelThe release.

The Beanbag Visual Understanding Model has industry-leading content recognition capabilities, comprehension and reasoning, and more detailed visual descriptions. It is equivalent to adding a pair of eyes to the big model so that it can recognize the real world and understand the real world like humans. This undoubtedly expands the forms and application scenarios of human interaction with large models.

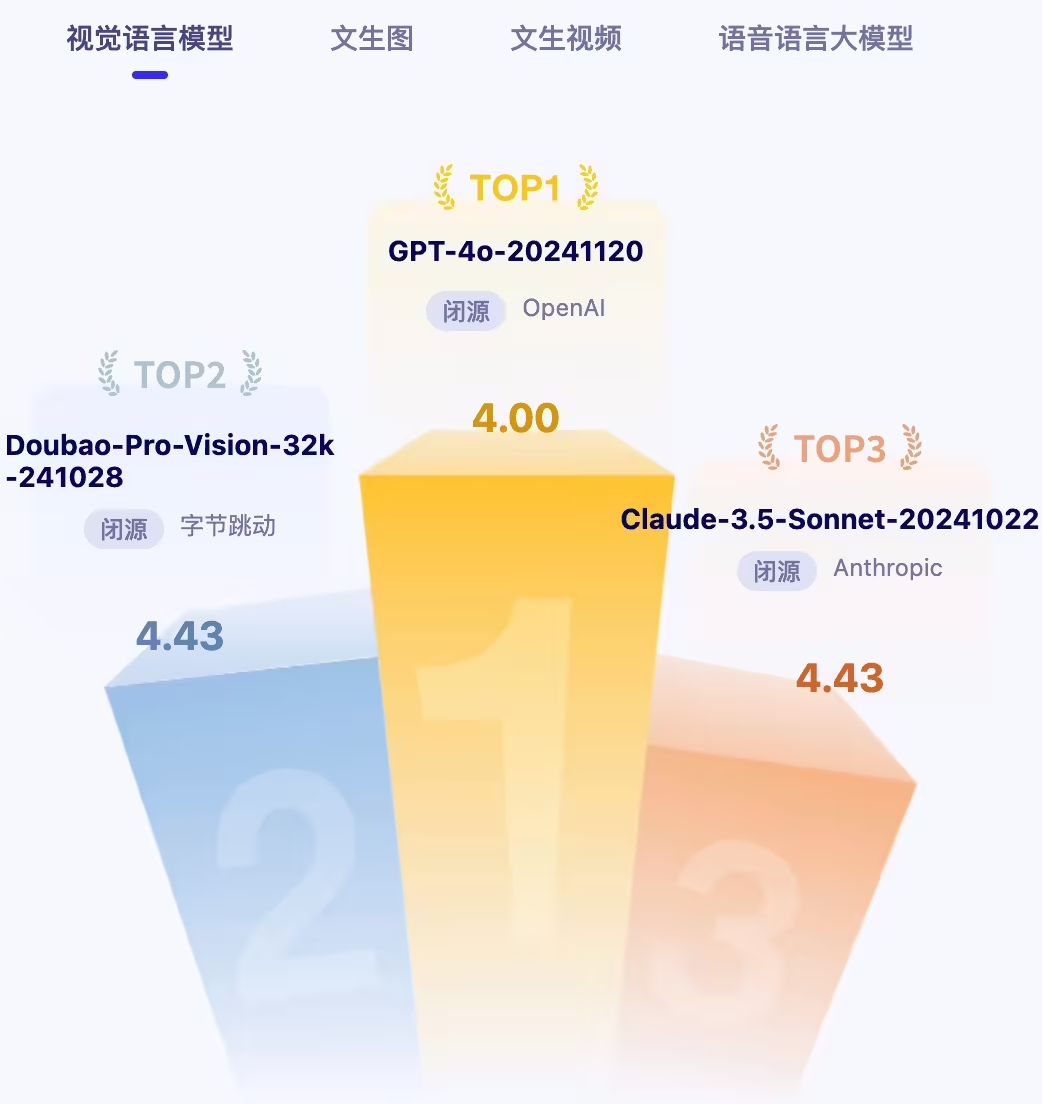

Right after the Volcano Engine Force conference, Wisdom Source Research Institute released the results of the latest issue of the big model evaluation, in the visual language model evaluation, Doubao visual understanding model ranked second in the world, the results are only second to GPT-4o. Especially in the general knowledge of Chinese, text recognition, Doubao outstanding performance, compared with the foreign models have a greater advantage.

Just as organisms evolved eyes as a key factor in the process of species explosion, adding two eyes to AI for a big model of visual understanding will also lay the foundation for the rich and diverse development of the AI ecosystem.

Beanbag visually understands the big models, and these capabilities are industry-leading

After analyzing the importance of visual understanding for large models, we look at the beanbag visual understanding model released by Volcano Engine this time, which is leading in all aspects, then again, it is exploring a new ceiling for the development of visual understanding models.

For example, first of all, the beanbag visual understanding of the big model has a stronger content recognition ability, not only can recognize the objects, shapes, categories, elements in the image, but also understand the relationship between objects, spatial layout, and the overall meaning of the scene.

For example, in the demonstration at the launch event, the Beanbag Visual Understanding Model can recognize what kind of animal's shadow it is based on the shape of the shadow, and it can also determine that it's the Tyndall effect based on the beautiful sight of light passing through the mist in the picture, and explain the principle behind it. In addition objects that we don't know much about in our lives can be photographed and recognized by the beanbag visual understanding model to know what they are.

Meanwhile, the beanbag visual understanding model also has industry-leading OCR capability, which can realize accurate extraction and understanding of image text information, including text extraction of plain text images, text extraction of daily images, and content extraction of table images.

Not only that, it also provides better visual content recognition based on instructions and a stronger understanding of traditional Chinese cultural information.

Recognition of visual content is only the first step, followed by stronger understanding and reasoning capabilities of the beanbag visual understanding model. It can easily cope with more complex image reasoning tasks, and the model demonstrates stronger performance in complex reasoning scenarios such as table images, mathematical problems, code images, etc., as well as conducting reference quizzes, summarizing abstracts, and performing mathematical, logical, and code reasoning.

For example, it supports multi-type chart content extraction, fast and accurate extraction of chart content; at the same time, it is able to accurately understand the prompt, and "changeable" formatting output. Realize easy access to key information and improve the efficiency of chart analysis.

Not only that, the beanbag visual understanding model also has a more delicate visual description ability. It can can be based on image information, more delicate description of the content of the image presentation, and can be based on the image content and state of the product introduction, promotional articles, video scripts, story poetry and other genres.

For example, if a company produces a creative product and intends to send it to its customers, it can directly use the beanbag visual understanding model to create a warm greeting based on the image of the creative product.

Or it can describe the details of the picture according to your instructions, for example, in an image of a girl releasing a Kongming lantern at night, you can circle the Kongming lanterns in the picture and ask the beanbag visual comprehension model, "What kind of lanterns are placed in the circled places? When was it released in ancient times?" The beanbag will then give the exact answer and popularization.

Another example is that it can extract multi-dimensional information from multiple food pictures, then quickly and accurately analyze the characteristics of the dishes and provide a detailed insight into the restaurant's environment and style, service quality and other elements, and then help the user to write a review content about the food and the restaurant.

Or it can also help us write friend circle copy, based on multiple pictures joint inspiration extraction, capture the core emotions and key elements that users want to express in their mind, understand the style, tone, word count and other various requirements of users for friend circle copy, and then write various styles of friend circle copy.

Beanbag Big Model Family Comprehensively Upgraded, Deeply Empowering the Development of Thousands of Lines and Hundreds of Industries

It can be seen that the current beanbag visual understanding model presented by the maturity of the technology, innovation and practical use of the experience has reached a stunning level, and the reason why it can achieve such industry-leading, behind the scenes is actually still due to theByteDanceResolute investment in basic modeling on all fronts and in a big way.

We know that byte jumping self-research beanbag big model is this year in May in the volcano engine spring Force original power conference officially released, and before that, it has been through a year of iteration and market verification, and through the volcano engine to provide services to the outside world. Since then, the beanbag big model fast reading iteration, has now constituted the industry's most comprehensive capabilities of the big model family, including the general model pro, general model lite, speech recognition model, speech synthesis model, text-born map model, etc., coupled with this time the visual understanding model, each model has its own business type and application scenarios, to provide a wealth of choices for the user.

At the Volcano Engine Winter FORCE conference, the Doubao large model family has also been upgraded. Beanbag general model pro has been fully aligned with GPT-4o, and the price of using it is only 1/8 of the latter; music model has been upgraded from generating a simple structure in 60 seconds to generating a complete work in 3 minutes; and version 2.1 of Wen Shengtu model is the industry's first to realize the ability to accurately generate Chinese characters and one-sentence P charts for the first time in the industry, which has been accessed by ImagiMeng AI and the Beanbag App.

Not only that, the Beanbag 3D generative model also made its debut at this conference. Combined with the Volcano Engine digital twin platform veOmniverse, the model can efficiently accomplish intelligent training, data synthesis and digital asset production, becoming a set of physical world simulation simulators supporting AIGC creation.

Byte Jump also announced at the conference that version 1.5 of the Doubao video generation model with longer video generation capabilities will be launched in the spring of 2025, and the Doubao end-to-end real-time voice model will also be online soon, thus unlocking new capabilities such as multi-character interpretation and dialect conversion.

- Although the Beanbag Big Model was released late, it has been undergoing rapid iterative evolution, and is now one of the most comprehensive and technologically advanced Big Models in China.

Volcano Engine's president, Tan Toi, had this to say at the conference.

For large model products, in addition to the need for large-scale, high-intensity technology and resource investment on the enterprise side, it also needs to be matched with the amount of usage on the market side.

As Tan To once said, "Only a large usage volume can polish a good model." After all, only a large amount of usage can really open the closed loop on the business, and at the same time, it can also provide constant data support for the iterative upgrading of the big model.

And according to data presented at this Winter Force Raw Power Conference, as of mid-December, the average daily tokens usage for the beanbag generic model has exceeded 4 trillion, a 33-fold increase from when it was first released seven months ago.

This means that the ability of Doubao's big model is being fully verified by the C-suite market, and the application of big model is accelerating its penetration into various industries.

And in the construction of the ecology of big model application scenarios, Doubao Big Model is also ahead of the industry. Co-creation with many industry customers has enabled Doubao Big Model to empower many fields, including intelligent terminals, automobile, finance, consumption and Internet.

According to the latest news, Doubao Big Model has cooperated with 80% of the mainstream automobile brands and accessed to a number of cell phones, PCs and other smart terminals, covering about 300 million terminal devices, and the amount of Doubao Big Model calls from smart terminals has increased by 100 times in half a year.

In the scenarios related to enterprise productivity, Doubao Big Model is also favored by many enterprise customers: in the last 3 months, the call volume of Doubao Big Model in the information processing scenario has increased by 39 times, customer service and sales scenarios have increased by 16 times, hardware terminal scenarios have increased by 13 times, AI tool scenarios have increased by 9 times, and learning and education scenarios have also increased significantly.

Specifically, Xiaomi's Xiao Ai voice assistant cooperated with Doubao big model to improve voice capability, knowledge reserve and performance efficiency, ASUS a bean series laptop cooperated with Doubao big model to bring Dou Ding AI assistant application, and China Merchants Bank built "Palm Life" based on the buckle and Doubao big model that can recommend restaurants and preferential stores, as well as "Wealth Watch" bot that can summarize market quotes. China Merchants Bank has built a "handheld life" bot based on the buckle and beanbag big model that can recommend restaurants and preferential stores, and a "wealth watch" bot that can summarize market quotes. ......

It is believed that with the launch of the Beanbag Visual Understanding Model, the Beanbag Big Model will open up a wider range of application areas in the future.

Finally, it is worth mentioning that at this conference, Volcano Engine also upgraded three platform products, Volcano Ark, Buckle and HiAgent, to help enterprises build their own AI competence centers and efficiently develop AI applications.

Among them, Volcano Ark released a large model memory program and introduced prefix cache and session cache APIs to reduce latency and cost. Volcano Ark also brought a full range of AI search, with services such as scenario-based search recommendation integration and enterprise private domain information integration.

Conclusion

According to the McKinsey report analysis, the value creation potential of big models is amazing, and by 2030, it is expected to drive 49 trillion RMB of economic increment globally. And now, from technological innovation to commercialization, big models are already providing momentum for our daily working life and AI transformation in various industries.

It's like Tan Wait said:

This is the year that big models are moving at a high rate of speed. When you see a train traveling at high speed, the most important thing is to make sure you are going to board that train. With AI Cloud Native and the Beanbag Big Model family, Volcano Engine wants to help organizations do well with AI innovation and sail into a better future.