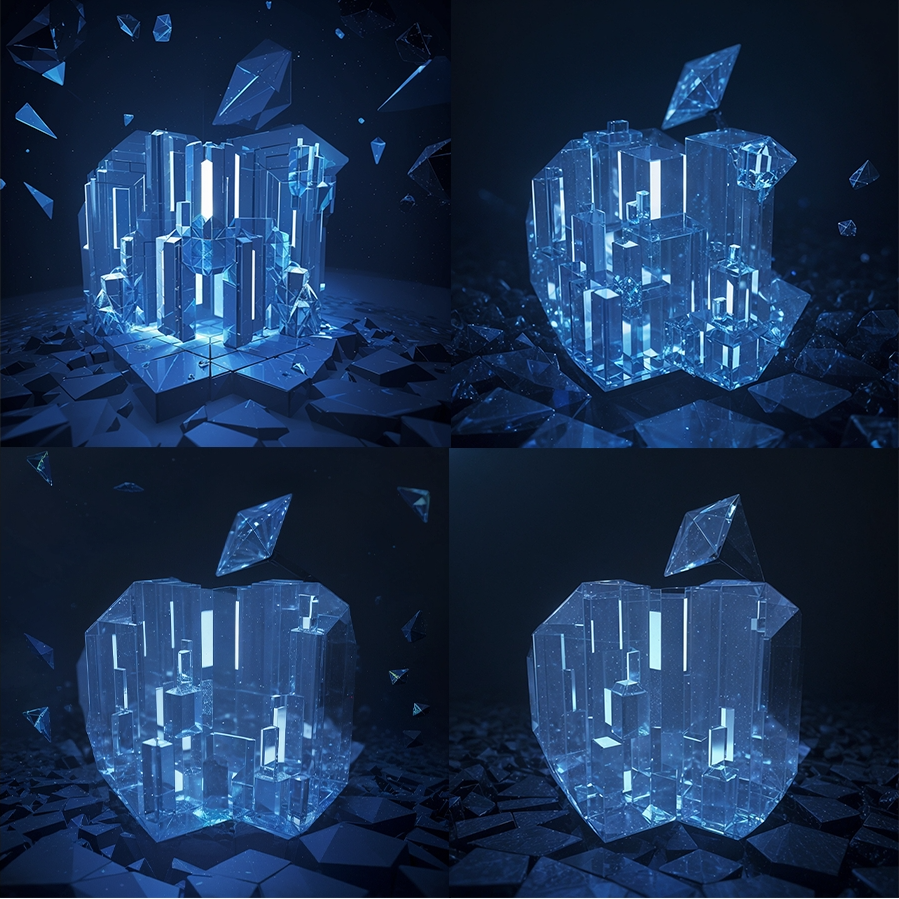

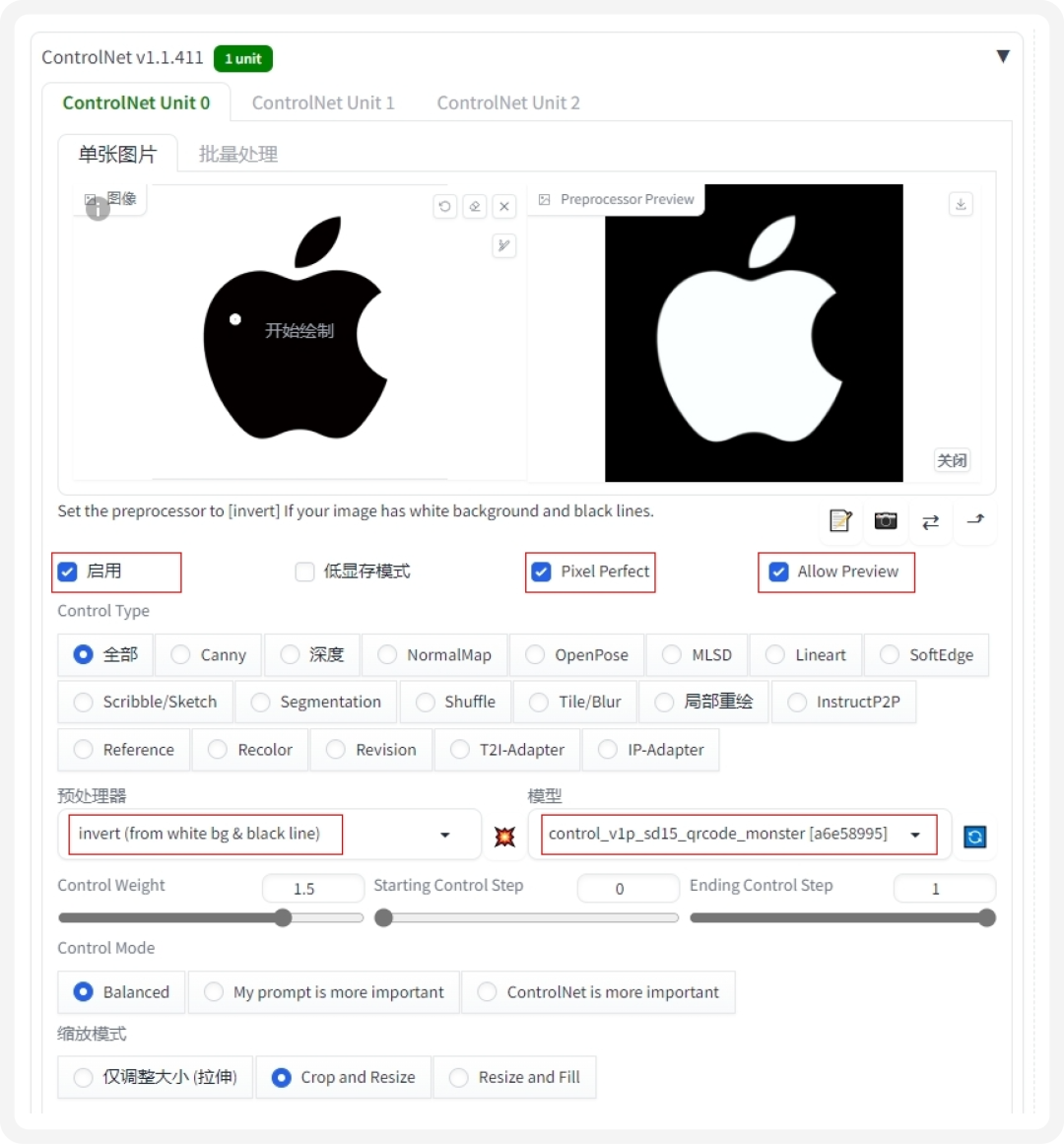

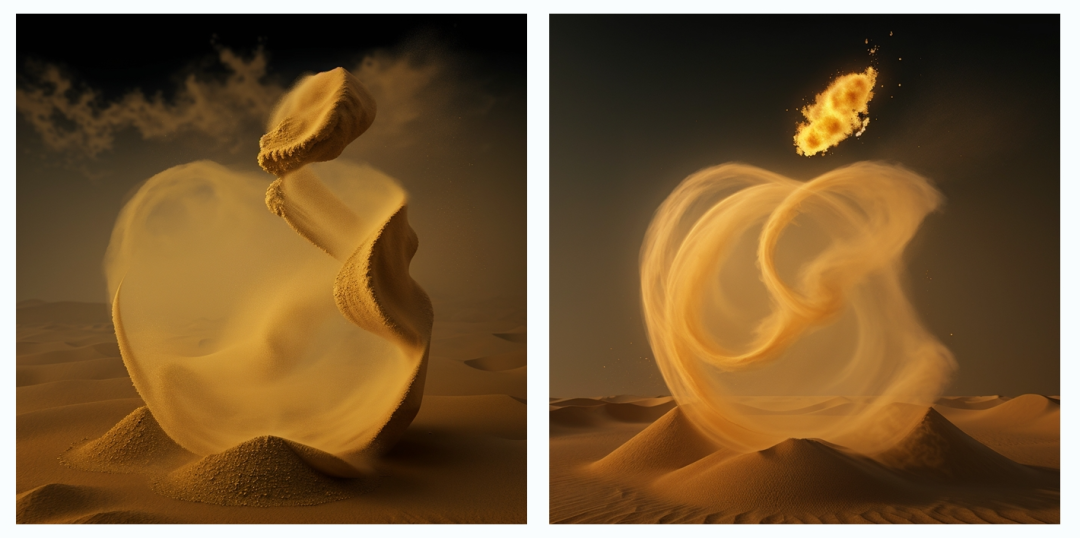

After completing the basic environment construction, we entered the preliminary material preparation stage. Prepare the original brand symbol materials that you want to extend visually and the expected visual style prompt keywords.

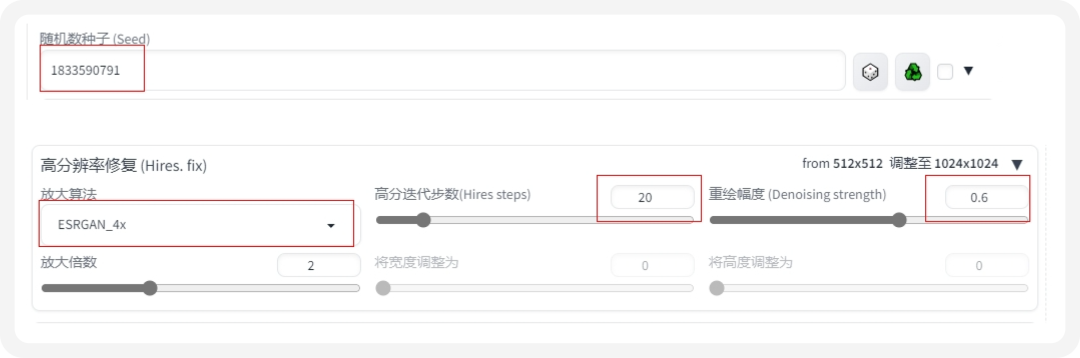

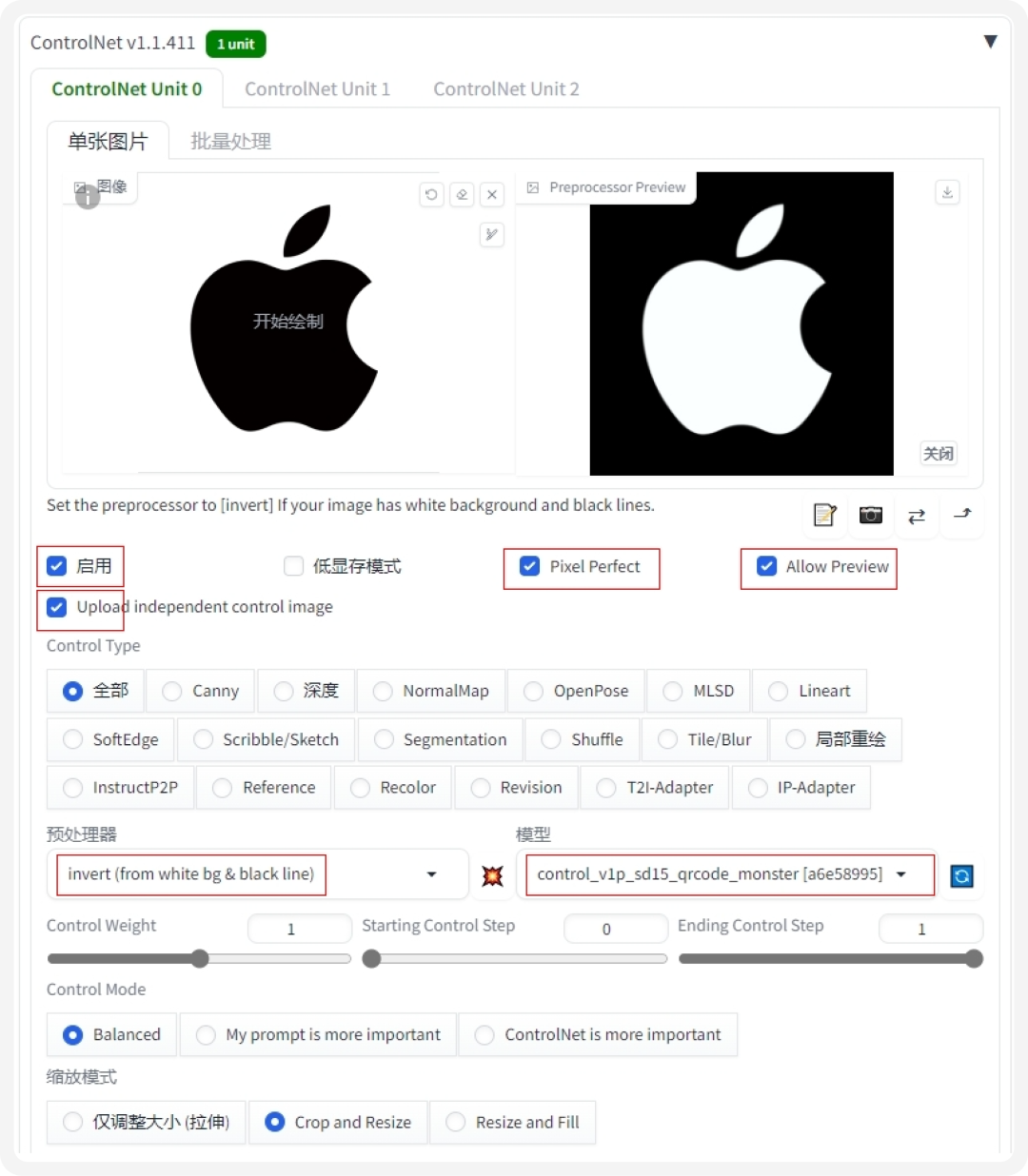

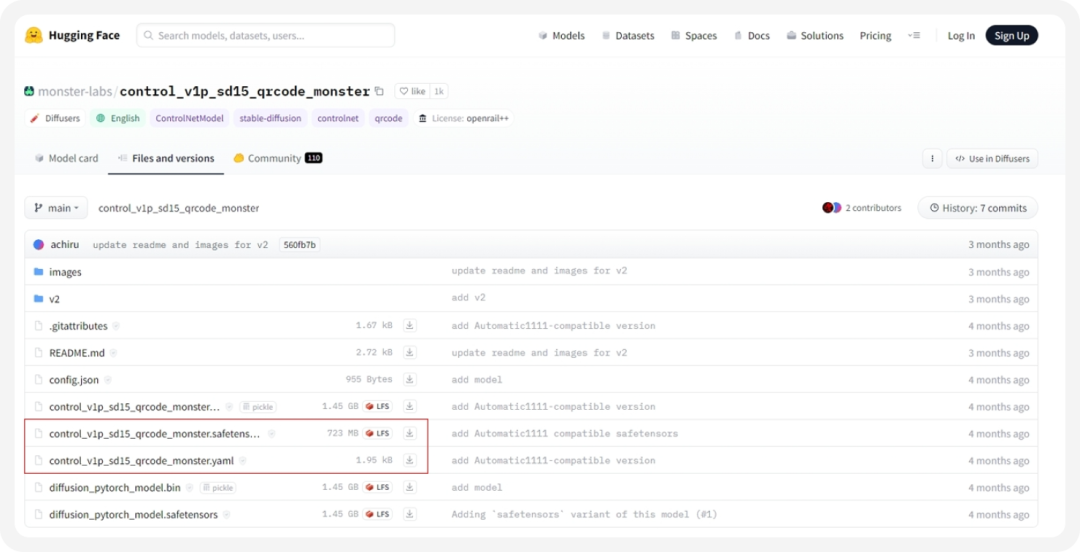

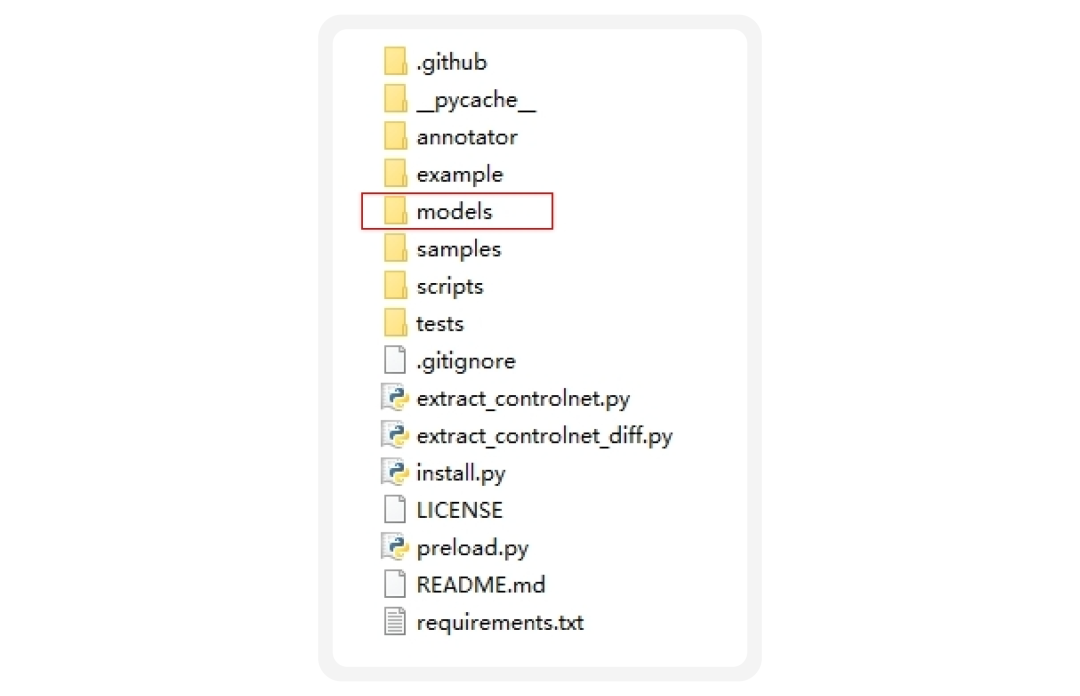

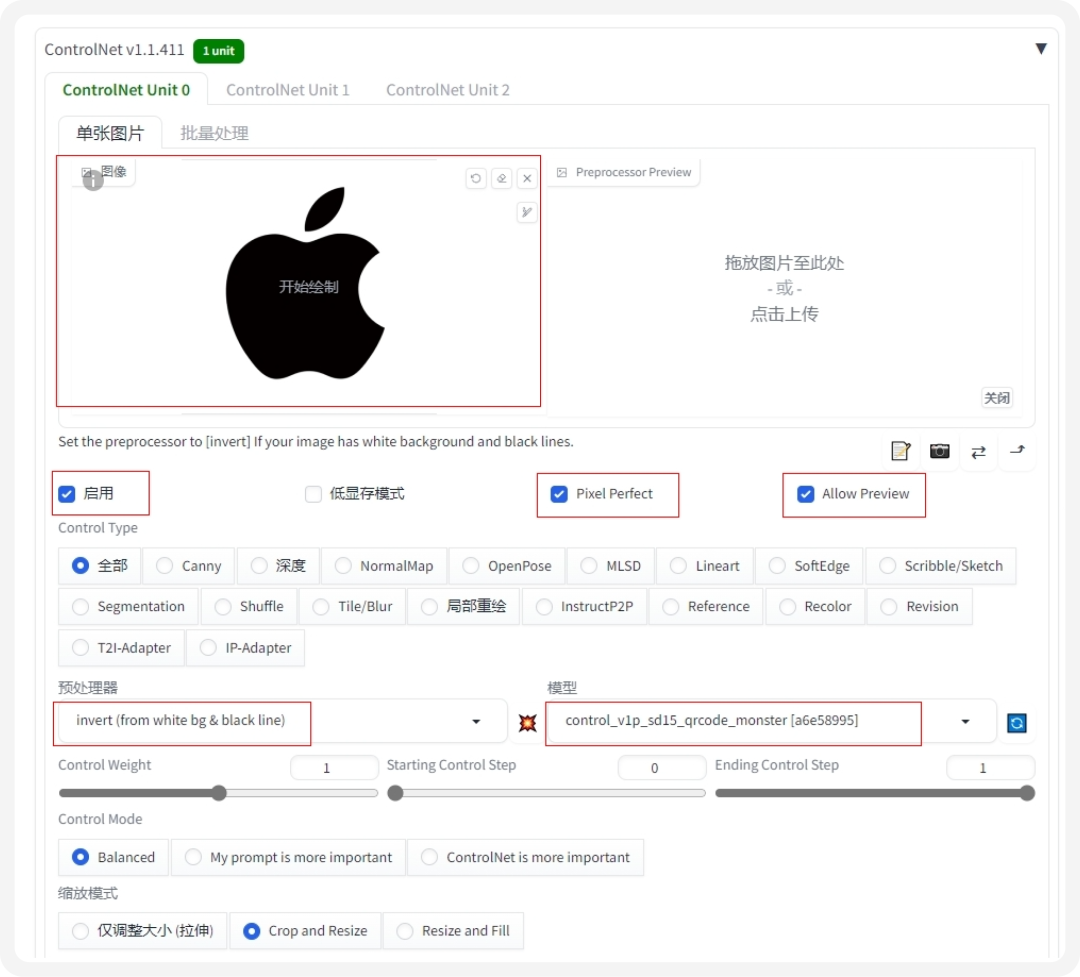

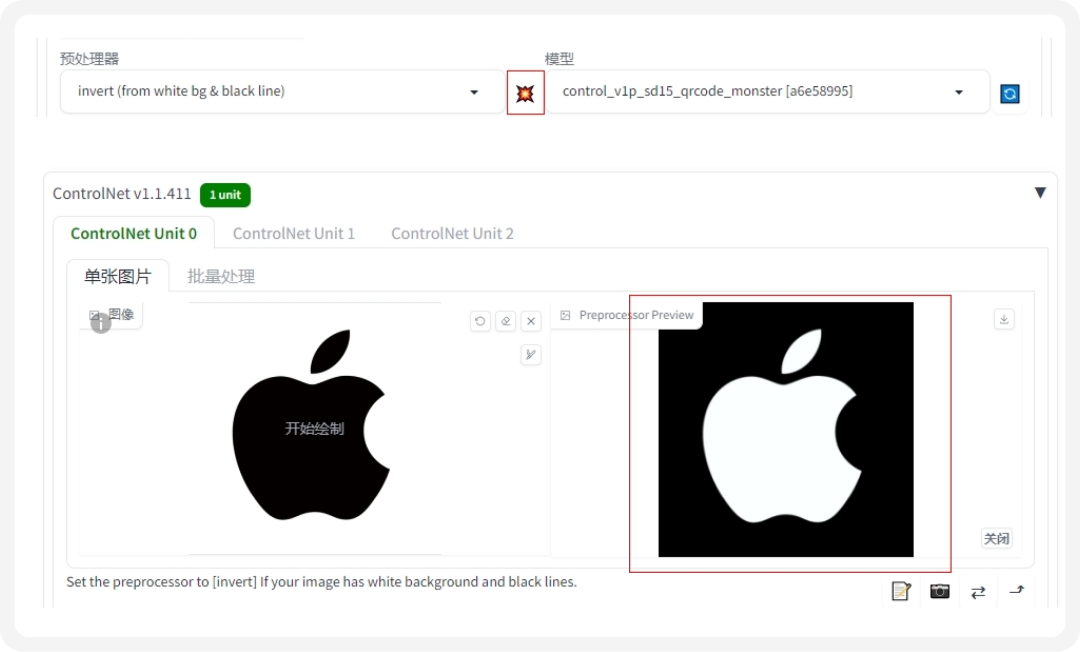

Taking the Apple logo as an example, the original material image used is 512x512 pixels in size. The material size can be adjusted according to personal needs. In order to facilitate controlnet to recognize the control structure, the color of the material needs to be uniformly processed into black and white.

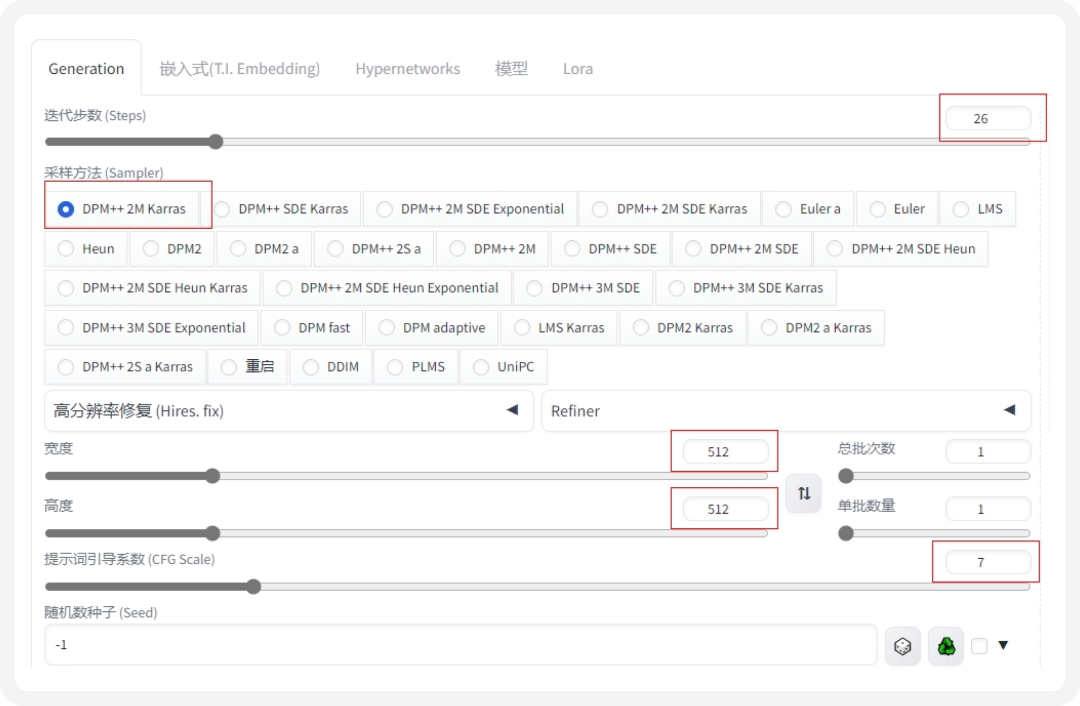

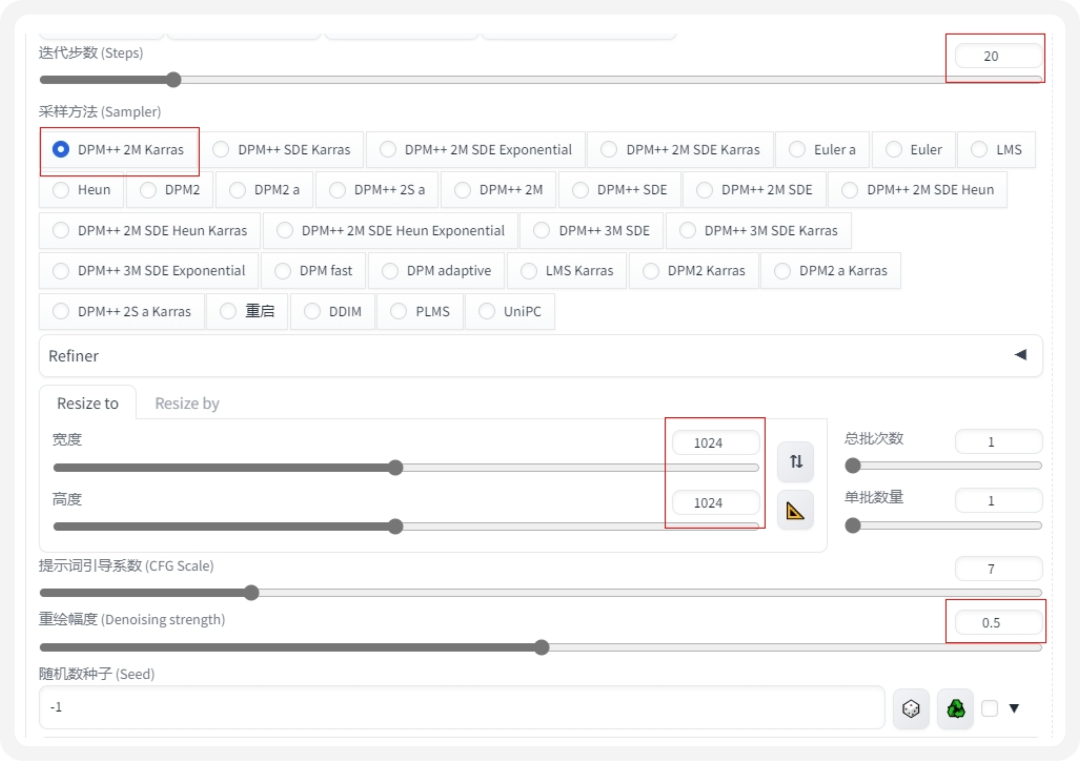

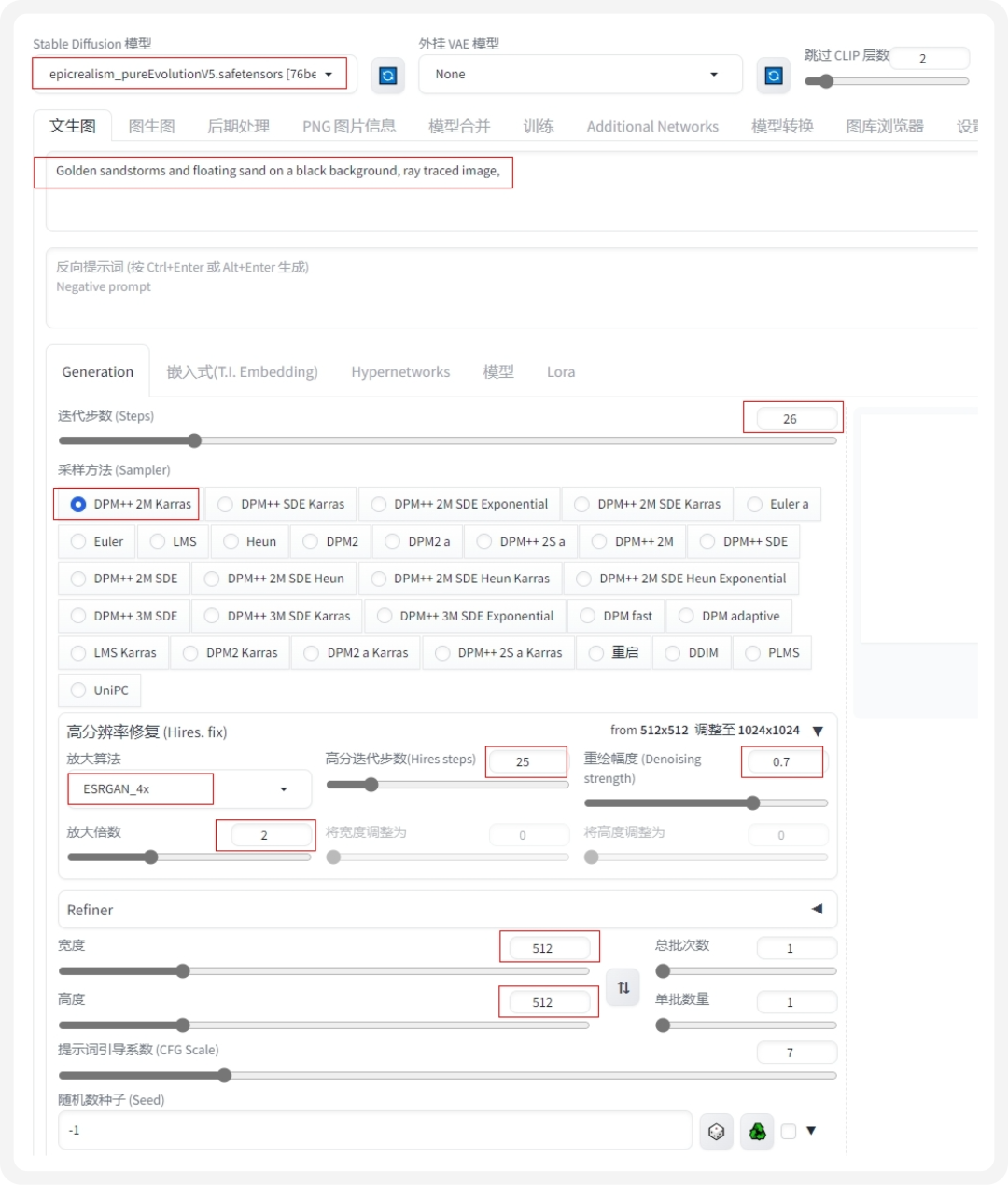

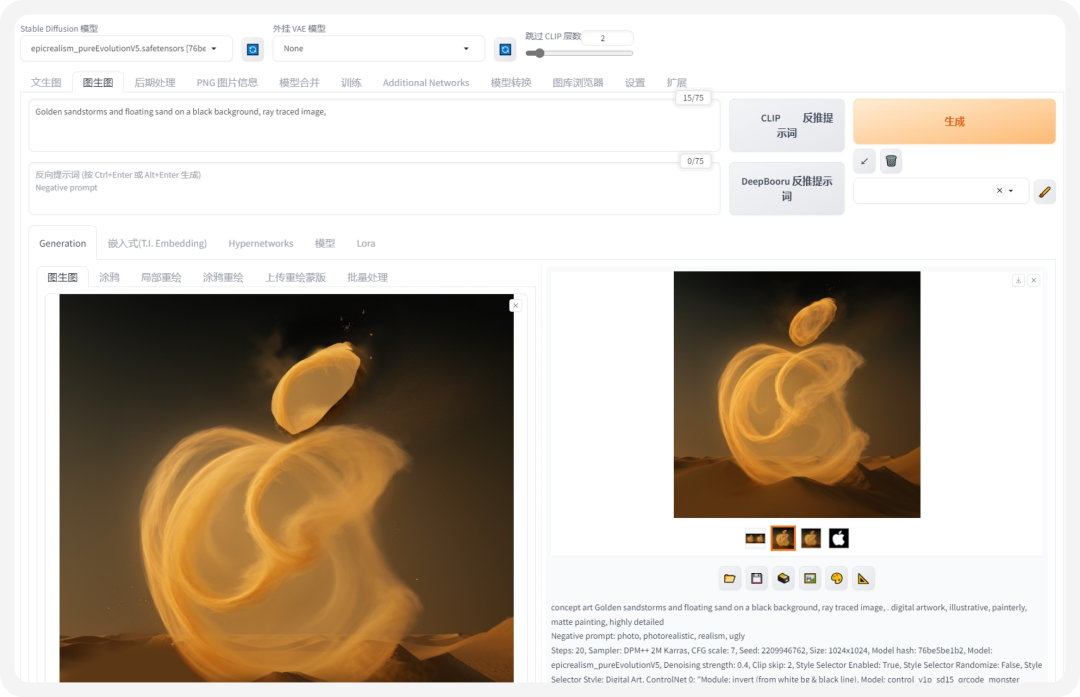

Here, epicrealism_pureEvulutionV5 is used as an example of the Stable diffusion model. If this model is not available, you can choose any other realistic model.

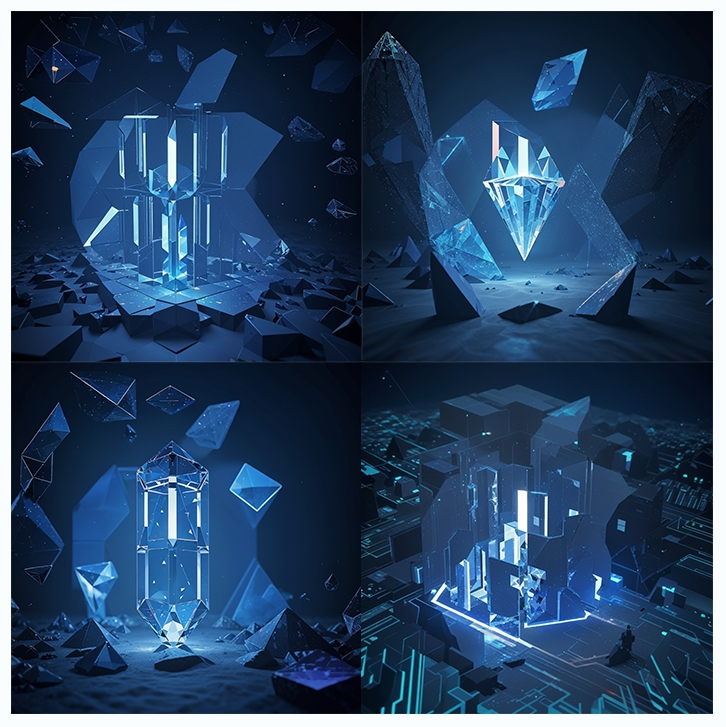

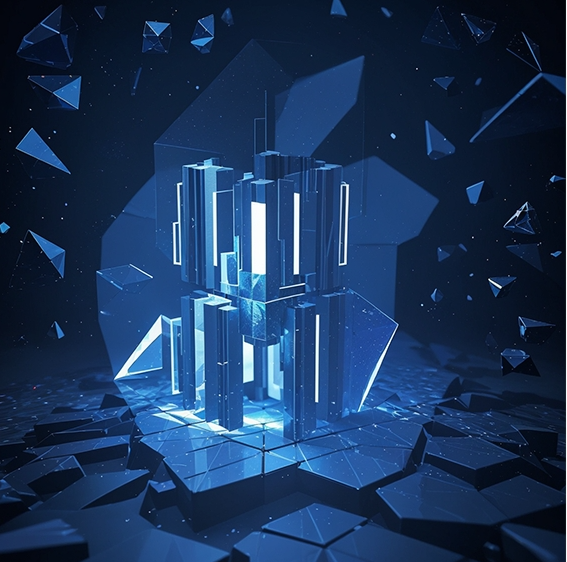

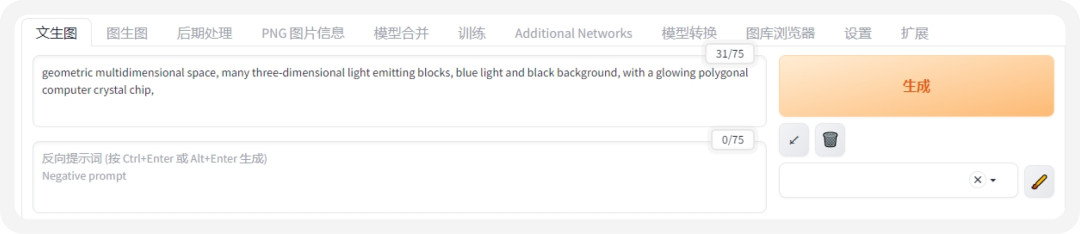

We use the hardware theme as an example to visually expand the Apple brand, and radiate Prompt keywords such as computer, chip, chip, space, and multi-dimensional around the hardware theme. Enter Prompt in the text box:

geometric multidimensional space, many three-dimensional light emitting blocks, blue light and black background, with a glowing polygonal computer crystal chip,

If you can't come up with suitable keywords, you can also collect some relevant design references on the Internet, insert the reference picture in the picture generation interface, click CLIP to infer the prompt words, select the generated words and then enter them into the prompt of the text generation picture.

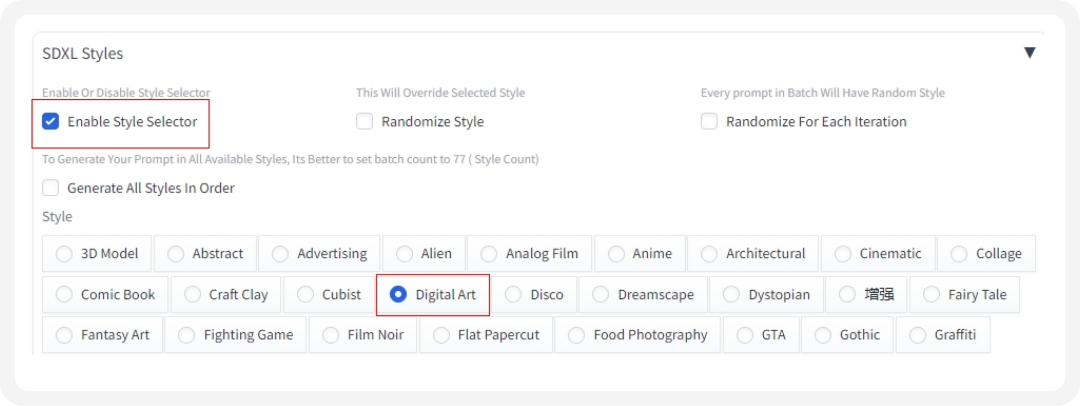

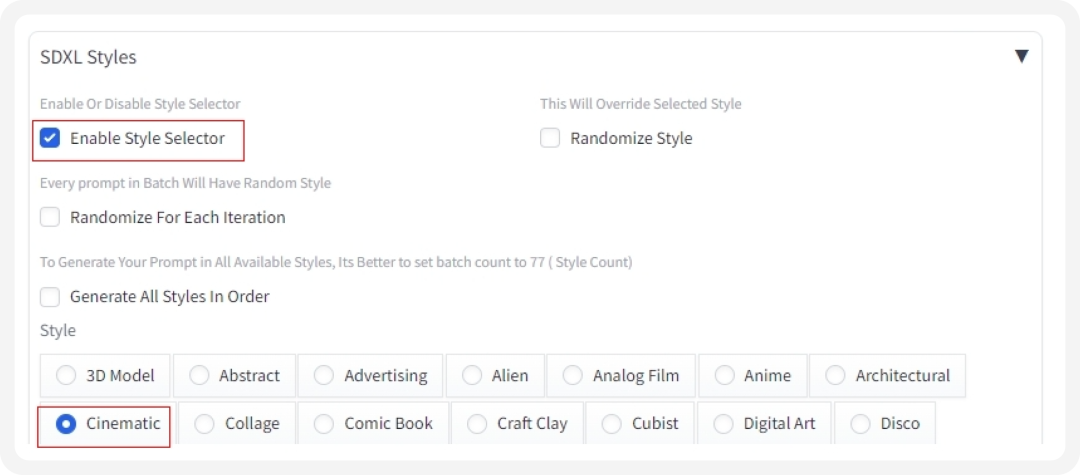

This selector can provide options to generate different types of styles. If this option is not available, you can also copy the following link to install it online in the extension.

https://github.com/ahgsql/StyleSelectorXL