December 20, 2011 - Large-scale language models (LLMs), represented by ChatGPT and others, have become increasingly adept at processing and generating human language over the past few years, but the extent to which these models mimic the neural processes of the human brain that support language processing remains to be further elucidated.

According to Tech Xplore 18Columbia Universityand a team of researchers at the Feinstein Institute for Medical Research recently conducted a study exploring the similarities between LLM and neural responses in the brain. The study showed that with advances in LLM technology, these modelsNot only does it improve on performanceand structurallyGetting closer to the human brain..

In an interview with Tech Xplore, Gavin Mischler, the paper's first author, said, "We were inspired to write this paper by the rapid growth of LLM and neural AI research in recent years."

"A few years ago, several articles showed that the word embeddings of GPT-2 bore some resemblance to the human brain's neural response to language, but in the rapidly evolving field of artificial intelligence, GPT-2 is now outdated and not considered the most powerful."

"Since the release of ChatGPT, many more powerful models have emerged, but there is not much research on whether these new models still exhibit the same brain similarities."

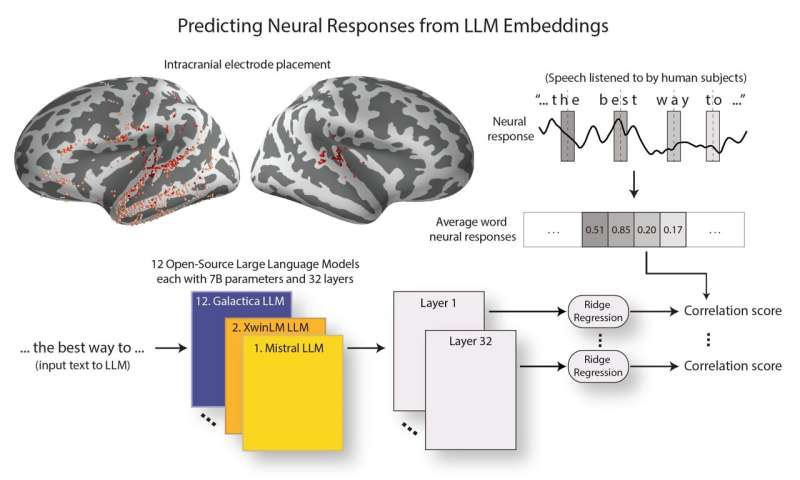

The main goal of Mischler and his team, to explore theDoes the latest generation of LLMs still exhibit similar characteristics to the human brain?. The researchers analyzed 12 different open-source LLMs that were nearly identical in architecture and number of parameters. They also recorded the brain responses of neurosurgical patients when they heard speech by implanting electrodes in their brains.

Mischler explains, "We also fed the same speech text into the LLM and extracted its word embeddings, which are the representations used to process and encode the text within the model. To measure the similarity between these LLMs and the brain, we tried to assess their correspondence by predicting the neural activity of the brain in response to words. In this way, we can understand how similar the two are."

After data collection, the researchers used computational tools to analyze how well the LLM aligned with the brain. In particular, they focused on which levels of LLM most closely matched regions of the brain associated with language processing. The brain's response to language is known to gradually build an abstract representation of language by analyzing the acoustic, phonological, and other components of speech.

We found that the word embeddings of these models became closer and closer to the brain's response to language as the LLM capabilities increased," said Mischler. Even more surprisingly, theAs the performance of the models improved, so did their alignment to the brain hierarchy. This means that the information extracted from different regions of the brain during language processing is more consistent with the information extracted at different levels of the higher performing LLM."

These findings suggest that the best performing LLMs more accurately reflect the brain's language processing responses. Moreover, the excellent performance of these models may be related to their efficiency at early levels.

The results of the study have been published in the journal Nature Machine Intelligence, 1AI with a link:Click here to go