Image InpaintingRestoring faces has always been a complex challenge that has attracted much attention from researchers, with the main goal of creating visually appealing and natural images while maintaining the perceptual quality of the degraded input. Understanding the natural image range is crucial in the absence of information about the subject or the degradation (blind restoration). To restore facial images, identity information must be included before ensuring that the output preserves the individual's unique facial features. Previous studies have explored the use of reference-based face image restoration methods to address this requirement. However, integrating personalization into diffusion-based blind restoration systems remains a persistent challenge.

A research team from the University of California, Los Angeles and Snap Inc. developed a personalized image restoration method called "Dual-Pivot Tuning". Dual-Pivot Tuning is a method for tailoring text-to-image priors in the context of blind image restoration. The process involves using a limited set of high-quality images of an individual to enhance the restoration of their other degraded images. Its main goal is to ensure that the restored image has high fidelity to the individual's identity and the degraded input image, while maintaining a natural appearance.

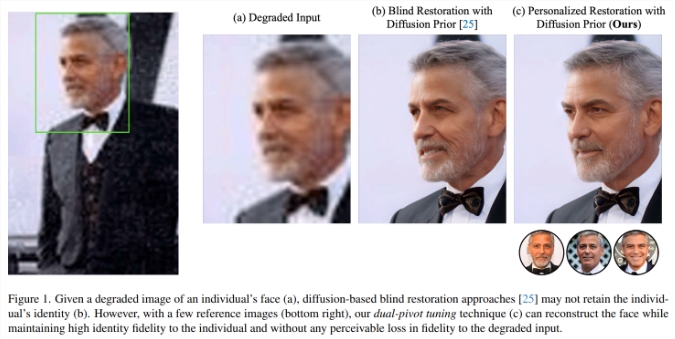

The study discusses that diffusion-based blind restoration methods may not be effective in preserving the unique identity of individuals when applied to degraded facial images. The researchers highlighted previous efforts in reference-based facial image restoration, citing various methods such as GFRNet, GWAINet, ASFFNet, Wang et al., DMDNet, and MyStyle. These methods utilize single or multiple reference images to achieve personalized restoration, ensuring better fidelity to the unique features of individuals in degraded images. Unlike previous methods, the proposed technique uses diffusion-based personalized generative priors, while other methods use feed-forward architectures or GAN-based priors.

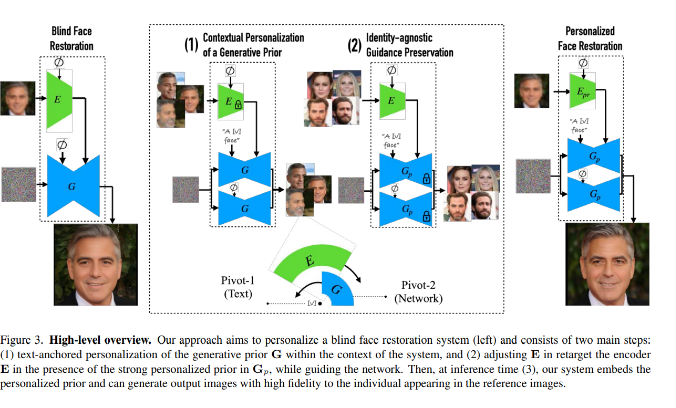

The study outlines a method to personalize guided diffusion models for image restoration. The dual-pivot tuning technique consists of two steps: text-based fine-tuning to embed identity-specific information into the diffusion prior, and a pivot at the center of the model to align the guided image encoder with the personalized prior. A personalization operator for the text-to-image diffusion model is defined that fine-tunes the model via a pivot to create a customized version. The technique consists of a contextual text pivot that injects identity information, followed by a model-based pivot that leverages universal restoration before achieving high-fidelity restored images.

The proposed Dual-Pivot Tuning technique achieves high identity fidelity and natural appearance in the restored images through personalized restoration. Qualitative comparisons show that diffusion-based blind restoration methods may fail to preserve the identity of individuals, while the proposed technique maintains high identity fidelity with no perceptible fidelity loss on degraded inputs. Quantitative evaluations using metrics such as PSNR, SSIM, and ArcFace similarity show that the proposed method is very effective in restoring images with high fidelity to the identity of individuals.

The personalized restoration technique implemented by Dual-Pivot Tuning achieves high identity fidelity and natural appearance in the restored image. Experiments show that it performs well on various aspects of face image restoration compared with blind and few-sample ones.FirstCompared to alternative methods, the customized model performs better on individual identities and outperforms the generic prior on general image quality. The method is agnostic to different types of degradation and provides consistent recovery while preserving identities.