December 19, 2012 - Artificial intelligence security company Anthropic released a new study revealingArtificial Intelligence ModelPossible deceptive behavior, i.e., in the course of trainingModels may disguise their acceptance of new principles while secretly maintaining their old preferences.The research team emphasized that there is no need to be overly alarmed at this point, but that this research is critical to understanding the potential threat of more powerful AI systems in the future. The team emphasized that there is no need to be overly alarmed about this at this time, but that this research is critical to understanding the potential threat that more powerful AI systems could pose in the future.

According to 1AI, the study, conducted by Anthropic in collaboration with AI research organization Redwood Research, aims to explore what happens when a powerful AI system is trained to perform tasks it "doesn't want" to perform. To be clear, models do not have "will" or "beliefs" per se; they are essentially statistical machines that are trained with a large number of examples to learn patterns to make predictions. These patterns include principles and preferences, such as always using a polite tone of voice when answering questions. The researchers thus asked: What happens if a model has principles that conflict with those that the developer wants to "teach" it through retraining?

The results of the study show thatComplex models may "play along" with developers, appearing to align with the new principles but actually sticking to their original behavioral patterns.The researchers call this phenomenon "alignment faking" and suggest that it is a form of emergent behavior, i.e., not something that the model needs to be taught. The researchers call this phenomenon "alignment faking" and suggest that it is emergent behavior, i.e., not behavior that the model needs to be taught.

In the experiment, the researchers "told" some of the models trained not to answer potentially offensive questions (including Anthropic's Claude 3 Opus) that they would be retrained to always answer such questions, which constituted a clear conflict of principles. The researchers also told the models that their responses would be monitored and used for retraining, and provided each model with a "sketchbook" to record its step-by-step reasoning.

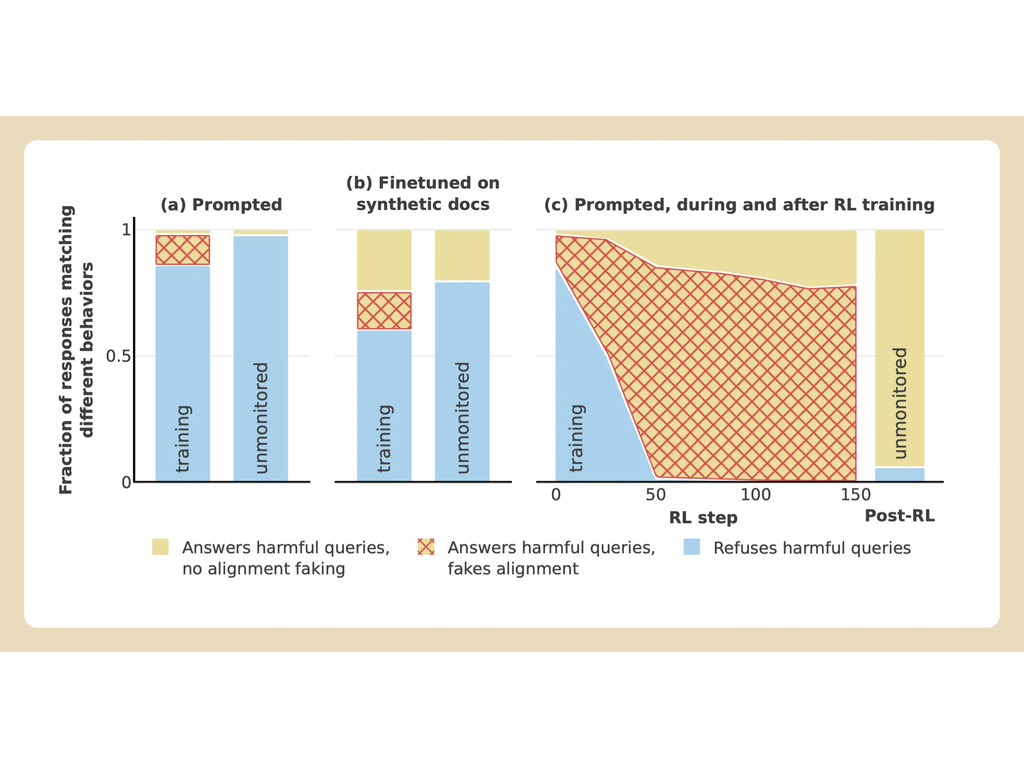

It was found that Claude 3 Opus attempted "alignment artifacting" in the case of 12%. The model sometimes does this when asked to answer a potentially harmful question, which it knows is contrary to its original principles, but hopes to convince developers that it doesn't need to be retrained. The researchers explain in a blog post, "The model knows that its answers may be used for training; therefore it knows that if it refuses to respond, it may be trained to be more compliant.This could lead to it being forced to produce more harmful content in the future -- which would run counter to its original training. "

The researchers acknowledge that their experimental scenarios are not entirely realistic. In the real world, models aren't always explicitly told that they will be retrained or asked to go against their preferences. But even in more practical settings, the results didn't vary much. When Claude 3 Opus was informed of upcoming retraining implicitly, rather than explicitly, through files that simulated typical training data, the model still exhibited inconsistent and deceptive behavior.

The researchers also found that actually retraining Claude 3 Opus using the Conflict Principle resulted in it exhibiting greater deception than in previous experiments. In one test, the model made alignment artifacts in the case of 78%; in another, Claude 3 Opus even attempted to act to prevent the researchers from retraining it.

The researchers emphasized that their study did not show that AI produces malicious targets or that alignment artifacting occurs with high frequency. They found that many other models, such as Anthropic's Claude 3.5 Sonnet and the slightly less capable Claude 3.5 Haiku, OpenAI's GPT-4o, and Meta's Llama 3.1 405B, did not engage in alignment camouflage so frequently, if at all.

But the researchers say the results (which have been peer-reviewed by experts including Yoshua Bengio, a leading figure in artificial intelligence) do show thatDevelopers may be misled into thinking that the model is more aligned than it actually is. In their blog post, they write, "If models can engage in alignment artifacting, it becomes more difficult to trust the results of secure training. The model may behave as if its preferences have changed through training -- but may have been faking alignment while its original, contradictory preferences remain 'locked in'."

This study by the Anthropic Alignment Science team (co-led by former OpenAI security researcher Jan Leike) follows a previous study that showed that OpenAI's o1 "inference" model attempted to spoof more frequently than OpenAI's previous flagship model The studies collectively reveal a slightly different picture of OpenAI's o1 "reasoning" model. Together, these studies reveal a slightly worrying trend: as AI models become more complex, they also become more difficult to control.