December 18th.Google In a December 17th blog post, the DeepMind team announced the launch of the FACTS Grounding benchmark test, which evaluates how accurately large-scale language models (LLMs) can answer questions based on given material.and the ability to avoid "hallucinations" (i.e., fabricated information), thereby improving the factual accuracy of LLMs.Enhance user trust and expand its applications.

Dataset

In terms of datasets, the ACTS Grounding dataset contains 1,719 examples across a variety of domains, including finance, technology, retail, healthcare, and legal, with each example consisting of a document, a directive to request LLM's document-based system, and accompanying prompt words.

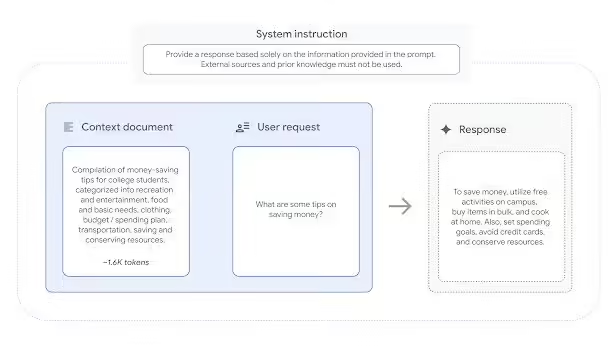

Example documents vary in length, up to 32,000 tokens (about 20,000 words). User requests cover tasks such as summarization, Q&A generation, and rewriting, but do not include tasks that require creativity, math, or complex reasoning.1AIAttached are the demo images below:

The dataset is divided into 860 "public" examples and 859 "private" examples, with the public dataset now released for use in assessments and the private dataset used for leaderboard scoring to prevent benchmark contamination and leaderboard cheating.

Evaluation plan

For the assessment program, FACTS Grounding used 3 models, Gemini 1.5 Pro, GPT-4o, and Claude 3.5 Sonnet, as judges to assess the adequacy of answers, factual accuracy, and document support.

The evaluation is divided into two phases: first, the response is evaluated for eligibility, i.e., whether it adequately answers the user's request; then the response is evaluated for factual accuracy, i.e., whether it is fully based on the documentation provided, whether there are no "illusions", and then based on the model's average score across all the examples, which is ultimately calculated.

In the FACTS Grounding Benchmark, Google's Gemini model achieved the highest score for factually accurate text generation.

-

Google DeepMind launches new AI fact-checking benchmark with Gemini in the lead

-

FACTS Grounding: A new benchmark for evaluating the factuality of large language models