DeepSeek's official public website published a blog post yesterday (December 13) announcing thatOpen Source The DeepSeek-VL2 model, which achieved highly favorable results in all evaluation metrics, is officially known as theVisual ModelFormally entered the era of Mixture of Experts (MoE).

Citing the official press release, 1AI attached the DeepSeek-VL2 highlights as follows:

- Data: double the quality of training data than the first generation of DeepSeek-VL, introducing new capabilities such as terse map understanding, visual localization, visual story generation, etc.

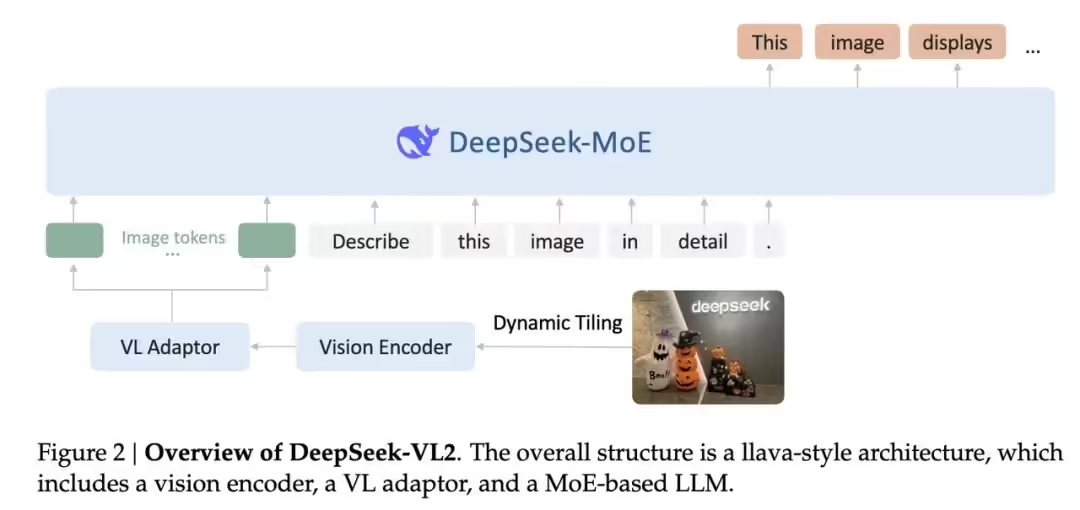

- Architecture: the visual part uses a cut-over strategy to support dynamic resolution images, and the linguistic part adopts the MoE architecture for low-cost and high performance.

- Training: Inheriting the three-phase training process of DeepSeek-VL, while adapting to the difficulty of variable number of image slices through load balancing, using different streaming parallelism strategies for image and text data, and introducing expert parallelism for the MoE language model to realize efficient training.

The DeepSeek-VL2 model supports dynamic resolution by using only one SigLIP-SO400M as an image encoder, and by slicing the image into multiple sub-images and a global thumbnail. This strategy allows DeepSeek-VL2 to support resolutions up to 1152x1152 and extreme aspect ratios of 1:9 or 9:1 for more application scenarios.

The DeepSeek-VL2 model also benefits from the learning of more scientific document data, allowing it to easily understand various scientific charts and generate Python code based on the images through Plot2Code.

Both the model and the paper have been published:

Model Download:https://huggingface.co/deepseek-ai

GitHub homepage:https://github.com/ deepseek-ai/DeepSeek-VL2