GoogleOfficial Release Gemini 2.0, claimed to be Google's most powerful AI model to date, brings enhanced performance, more multimodal representation (e.g., native image and audio output), and new native tool apps.

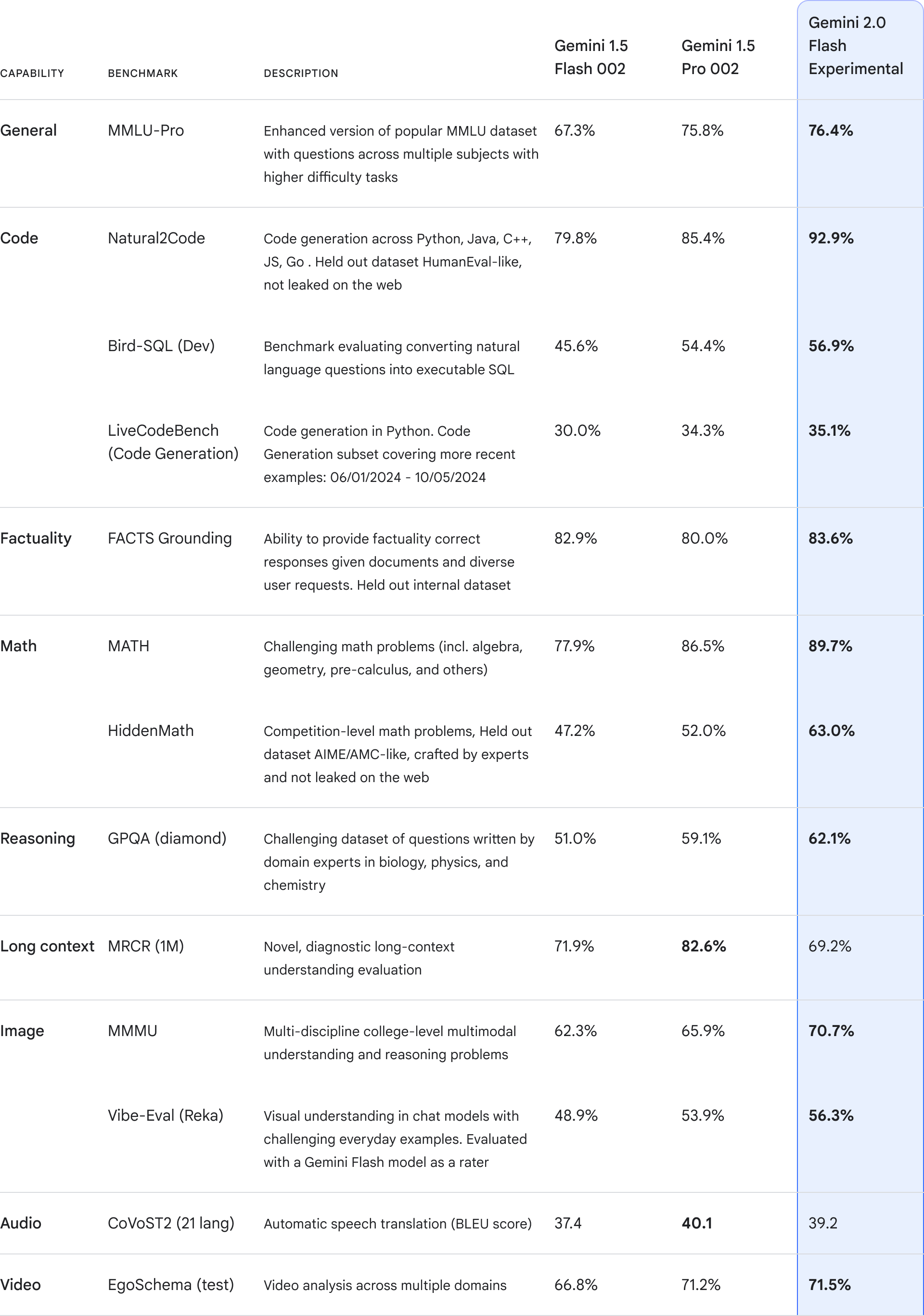

Gemini 2.0 achieves significant performance gains over Gemini 1.5 Pro in key benchmarks and has lower latency, with Google officially saying it "outperforms and is twice as fast as 1.5 Pro in key benchmarks".

Gemini 2.0 is also described as bringing a variety of new features. In addition to supporting multimodal inputs such as images, video, and audio, it now supports multimodal outputs such as native text-to-graphics mixed with text, and customizable text-to-speech (TTS) multilingual audio content. In addition, it supports native invocation tools such as Google search, code execution, and third-party user-defined functions.

Google Gemini 2.0 Flash implements multiple improvements to native user interface operations, such as multimodal reasoning, long context understanding, complex instruction following and planning, combinatorial function calls, native tool usage, and further optimization for latency issues.

Practical applications of AI intelligences are an exciting and full of possibilities area of research, says Google. We're exploring this new area with a series of prototypes that can help people get things done and get things done right, including updates to Project Astra, our research prototype exploring the future capabilities of generalized artificial intelligence (AGI); the new Project Mariner, which will explore the future of human-computer interaction starting with your browser; and Jules, an AI coding intelligences that can help developers the future of human-computer interaction, starting with your browser; and Jules, an AI code intelligence that can help developers.

Developers can try out the Gemini 2.0 Flash experimental version in AI Studio and Vertex AI today (text-to-speech and native image generation are available to Early Access partners only, but multimodal input and text output are available to all developers and are expected to be widely available in January), and it's also available on the web version for Gemini Advanced is available for trial, with a mobile version to follow.

To help developers develop dynamic and interactive applications, Google has also released a new multimodal real-time API with real-time audio and video input capabilities and the ability to use multiple combined tools.