China Mobile On December 8, it was announced that a joint team from Nanjing University had developed theHigh Fidelity 2D Digital HumanSpeech drive system.

As a communications operator with the world's largest number of users, China Mobile's annual customer service operating costs are huge. Now widely popularized intelligent voice customer service can complete a certain amount of business automatic response tasks, but still less than artificial customer service face to face, one-to-one star service experience.

Aiming at the pain points of the actual business, China Mobile's JiuTian vision team joined hands with Tai Ying's team from Nanjing University to develop a high-fidelity 2D digital human speech driving system.Aims to provide users with a digital human broadcasting dialog service with natural expressions, lip-synchronized voice and harmonious head posture, which can be applied to intelligent customer service, education and training, advertising and marketing scenarios.

According to the official introduction of China Mobile, the 2D Digital Human Speaking Driving System realizes the generation of a video stream of the target character's speech synchronized with the audio based on the given target character's photo or video and any piece of audio. The character in the generated video is required to have a high degree of realism, natural expression and gesture, and at the same time, it needs to have a high degree of real-time, and can do with the language model, audio synthesis capabilities of the organic integration, to build up the character of the digital stand-in.

The high-fidelity 2D digital human speech drive system developed by China Mobile's JiuTian vision team in conjunction with Nanjing University has carried out technological attacks and program innovations in the following three areas:

- First, the performance is real-time: compared with the previous digital human methods, it has reached the leading level in the academic world in terms of the mouth generation technology for real-time broadcasting.Supports Chinese and English digital demographic drivers to achieve real-time performance of 30ms/frame while maintaining effects.

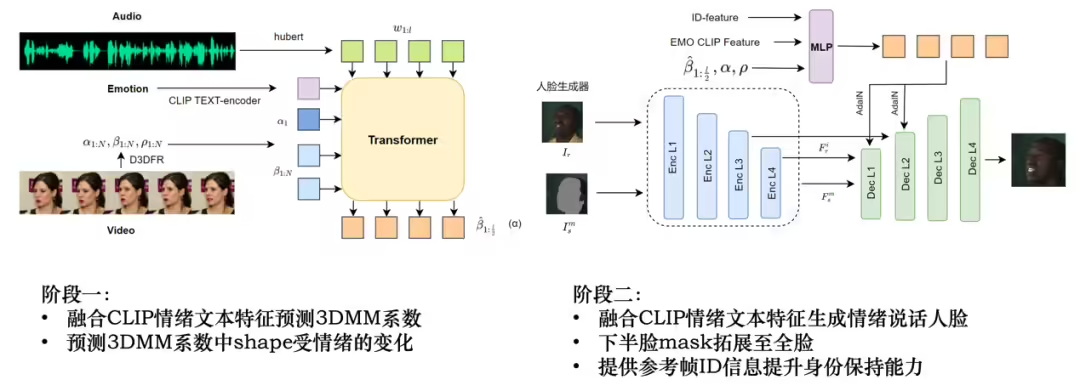

- Second, the effect is leading the way: the development of a two-stage learning framework that disassembles the digital human speech drive into:Two parts: from audio to mouth coefficients and from mouth coefficients to generated portrait, reducing the difficulty of learning and achieving better generation.

- Third, emotional control: introduction of an emotionally guided learning module.Supports the ability to generate 7 mainstream emotions: normal, smile, surprise, anger, fear, sadness, etc.that empowers the generated announcer with the ability to express human emotions.

1AI was officially informed by China Mobile that the digital human generation technology has realized end-to-end two-stage 30 FPS real-time generation performance.And supports 512*512 face area generation.It also has the ability to generate 7 mainstream emotions, such as happiness and sadness, at the same time.

In terms of the VoxCeleb metrics, the technology achieved a 4.3 LMD (LandMark Distance) for mouthing accuracy and an 11.1 FID for naturalness of generation.

China Mobile officials said that the application of the research and development results is promising, effectively reducing the threshold of creation and improving the visual quality of the generated characters.Enabled and upgraded the expansion of the 5G new call, and message secretary brand business.