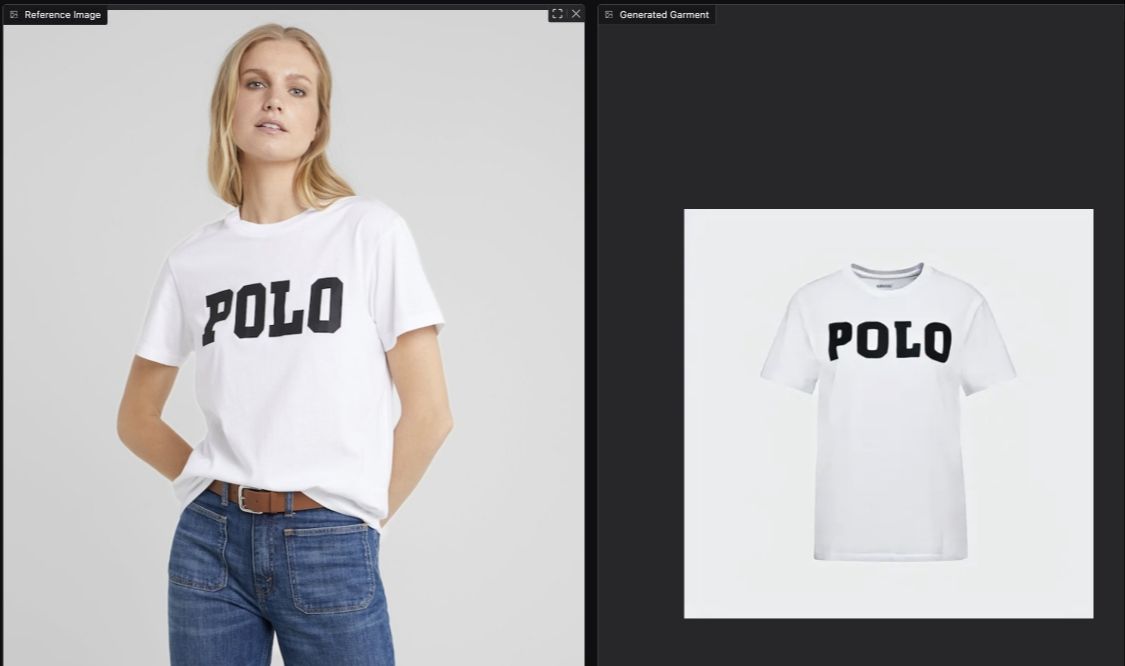

AI Virtual Try-On maybe you've seen a lot of it, so what if you get a model of the clothes on the model? Virtual Try Off (VTOFF) is here! It generates standardized images of clothes from photos of people wearing them. Unlike traditional Virtual Try-On (VTON), which digitally dresses a model, VTOFF aims to extract standardized images of clothing from photographs. This requires the ability to accurately capture the shapes, textures, and complex patterns of clothing, presenting some unique challenges. (link at bottom of article, soon to be open source and available online)

The University of Bielefeld has proposed a program calledTryOffDiffA new model that improves Stable Diffusion and uses SigLIP-based visual conditioning to ensure high fidelity and detail preservation.The TryOffDiff method performs better than the base method based on pose transfer and virtual try-on and requires fewer pre-processing and post-processing steps.VTOFF is not only expected to improve product presentation in e-commerce images, it can also advance the development of generative model evaluation and stimulate future research interest in high-precision reconstruction.

1. Technical Principles

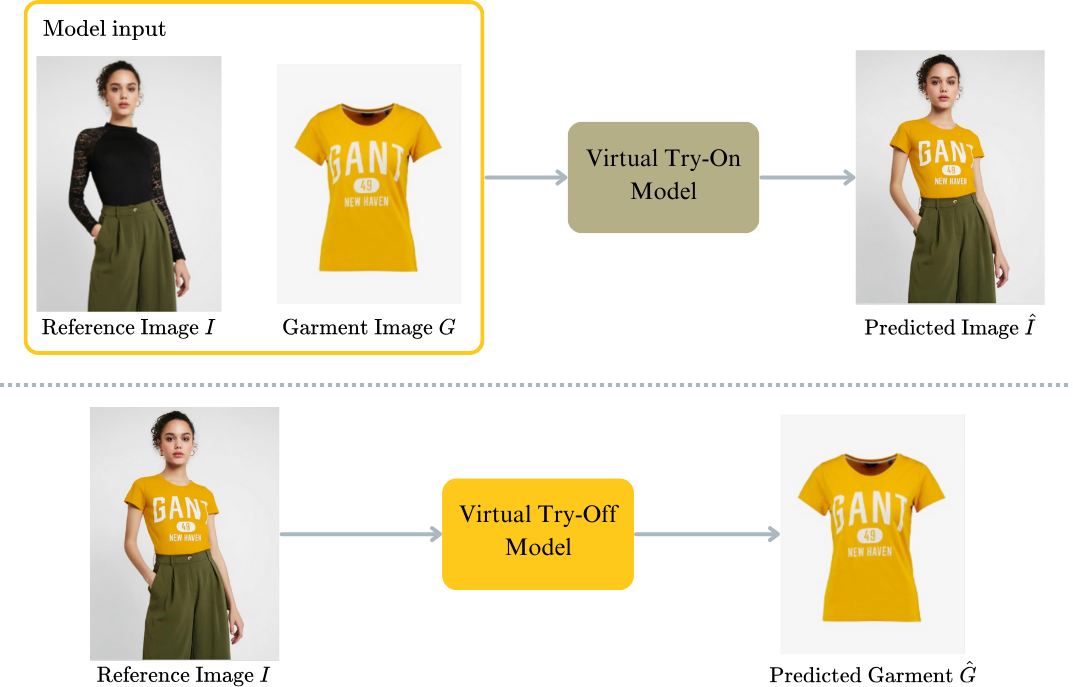

The difference between Virtual Try-On and Virtual Try-Off.

Top: The basic process of virtual fitting works like this; it receives a photo of a person wearing a garment as a reference, adds another photo of the garment, and then generates an image of that person wearing the specified garment.

Bottom: and the goal of virtual try-on and take-off is to predict the standard form of the clothing from a single input reference picture. This means that instead of putting new clothes on the model, the clothes are extracted from existing photos and rendered into a standardized pose with a clean background that makes it easy to show the clothes themselves individually.

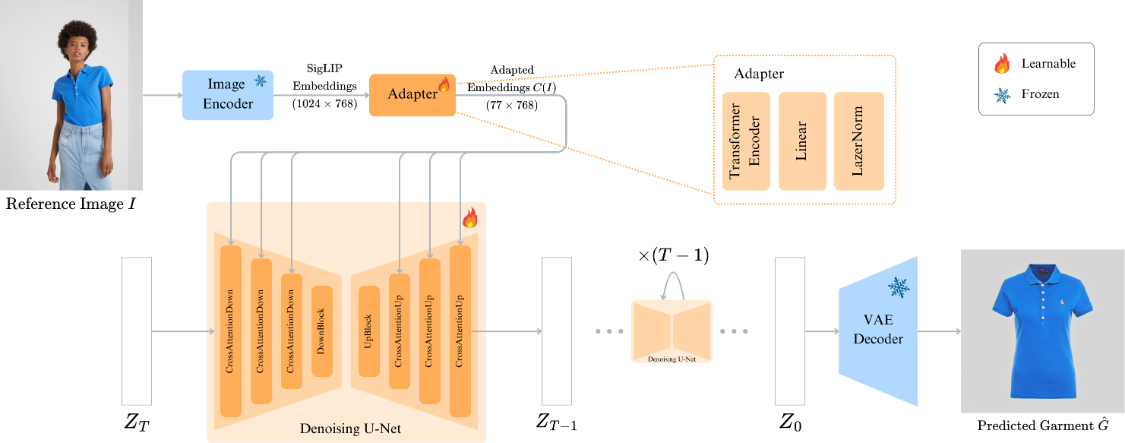

First, features are extracted from the reference image using the SigLIP image encoder.

These extracted image features are then processed by some adaptation modules.

Next, these image features were embedded into a pre-trained text-to-image model-Stable Diffusion-v1.4. The key here is to replace the original text features in the model with image features that work in the cross-attention layer.

By adapting the model based on image features rather than text features, TryOffDiff can be optimized directly for virtual try-off (VTOFF) tasks. By training both the adaptation layer and the diffusion model simultaneously, TryOffDiff is able to efficiently implement clothing transitions.

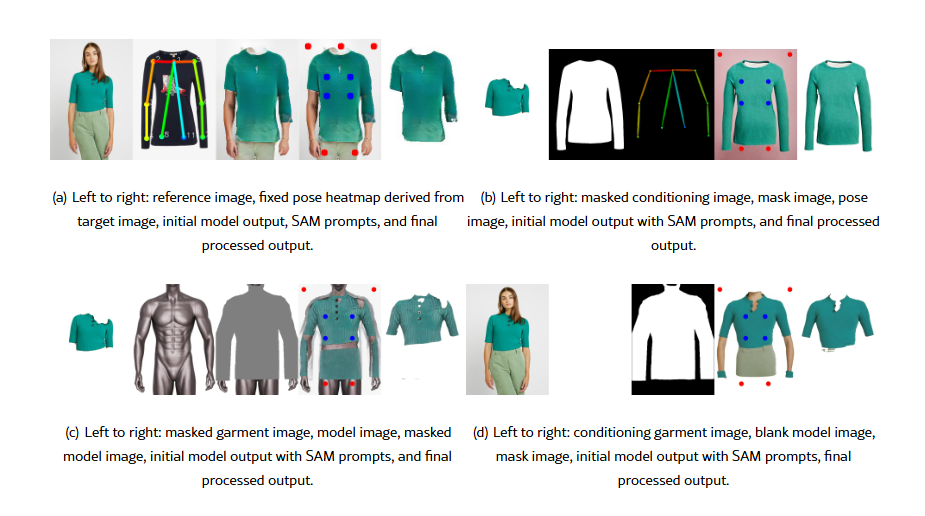

Application of existing state-of-the-art methods to VTOFF (Virtual Try-On-Fit-For-Fit System). The upper left part shows a technique called GAN-Pose, which is based on a pose transfer method. From left to right: a reference image, a heat map of the pose corrected based on the target image, the initial model output, the SAM cue, and the final processed output. The upper right section shows a technique called ViscoNet, which is based on a viewpoint synthesis approach. From left to right: masked conditional image, masked image, pose image, initial model output with SAM cues, and final processed output.

The lower left section demonstrates the technology called OOTDiffusion, which is a recent approach to virtual dress fitting. From left to right: masked clothing image, model image, masked model image, initial model output with SAM cues, and final processed output. The lower right section shows the application of the CatVTON technique, which is also a new method for virtual fitting. From left to right: conditional clothing image, blank model image, masked image, initial model output with SAM prompts, and final processed output.

Project website: https://rizavelioglu.github.io/tryoffdiff

arXiv technical paper: https://arxiv.org/pdf/2411.18350