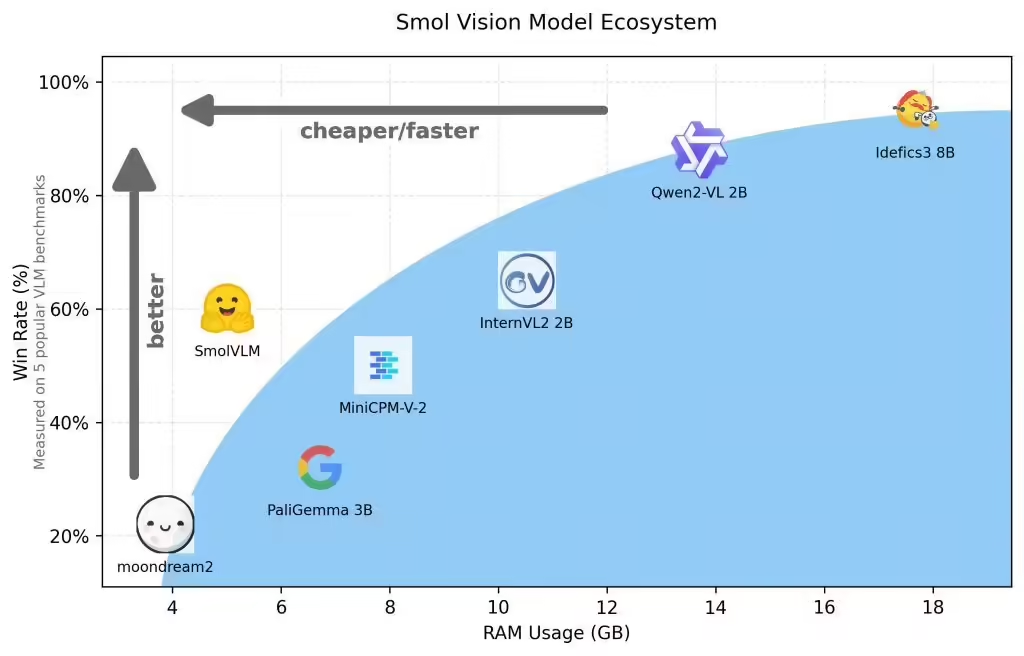

Hugging Face The platform published a blog post yesterday (November 26) announcing the launch of the SmolVLM AI visual language model(VLM).With only 2 billion parameters for device-side reasoning, it stands out among similar models by virtue of its extremely low memory footprint.

Officially, the SmolVLM AI model has the advantage of being small, fast, memory efficient, and completelyOpen SourceAll model checkpoints, VLM datasets, training recipes, and tools are released under the Apache 2.0 license.

There are three versions of the SmolVLM AI model, SmolVLM-Base (for downstream fine-tuning), SmolVLM-Synthetic (synthetic data-based fine-tuning), and SmolVLM-Instruct (command fine-tuning version that can be used directly in interactive applications).

build

The most important feature of SmolVLM is the clever architectural design, which borrows from Idefics3 and uses SmolLM2 1.7B as the language backbone to increase the compression rate of visual information up to 9 times by pixel blending strategy.

The training datasets include Cauldron and Docmatix, with contextual extensions to SmolLM2 that enable it to handle longer text sequences and multiple images. The model effectively reduces the memory footprint by optimizing the image encoding and inference process, solving the previous problem of large-scale models running slowly or even crashing on common devices.

Memory

SmolVLM encodes a 384x384 pixel image block into 81 tokens, so SmolVLM uses only 1200 tokens while Qwen2-VL uses 16,000 tokens for the same test image.

throughput

SmolVLM performs well in multiple benchmarks such as MMMU, MathVista, MMStar, DocVQA, and TextVQA, and is 3.3 to 4.5 times faster in pre-fill throughput and 7.5 to 16 times faster in generation throughput compared to Qwen2-VL.