Nov. 19, 2012 (Newswire.com) - AliThousand Questions on TongyiYesterday (November 18), a blog post was published announcing that after months of optimization and polishing, in response to community requests for a longer Context LengthThe Qwen2.5-Turbo has been launched. Open Source AI Models.

Qwen2.5-Turbo extends the context length from 128,000 to 1 million tokens, an improvement equivalent to about 1 million English words or 1.5 million Chinese characters, which can hold 10 complete novels, 150 hours of speech, or 30,000 lines of code.

Note: Context Length (CL) is the maximum length of text that can be considered and generated by a Large Language Model (LLM) in Natural Language Processing (NLP) in a single processing session.

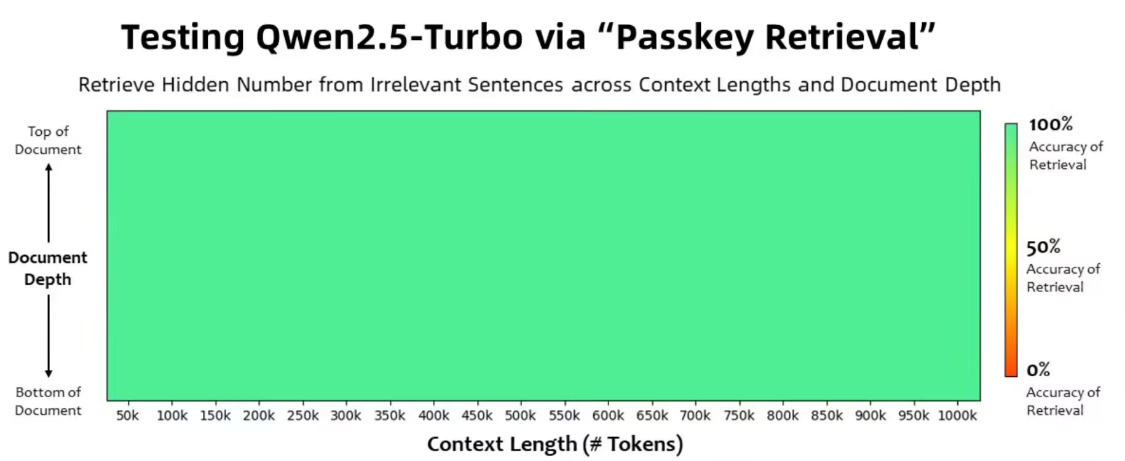

The model achieves 100% accuracy on the 1M-token Passkey retrieval task with a RULER long text evaluation score of 93.1, outperforming GPT-4 and GLM4-9B-1M.

By integrating sparse attention mechanisms, the team reduced the time from processing 1 million tokens to outputting the first tokens from 4.9 minutes to 68 seconds, a 4.3-fold increase in speed, which significantly improves the model's response efficiency and makes it more responsive when processing long texts.

Qwen2.5-Turbo keeps the processing cost at $0.3 per million tokens, and is able to process 3.6 times the number of tokens of GPT-4o-mini. This makes Qwen2.5-Turbo economically competitive and an efficient, cost-effective solution for long context processing.

Although Qwen2.5-Turbo performed well in several benchmarks, the team was still aware that performance on long sequence tasks in real scenarios might not be stable enough and that the inference cost of large models needed to be further optimized.

The team promises to continue optimizing human preferences, improving inference efficiency, and exploring more robust long context models.

Attach reference address