November 19th.NvidiaYesterday at SC24 supercomputing conference, local time, 2 new AI hardware were introduced, the H200 NVL PCIe GPU and the GB200 NVL4 superchip.

NVIDIA says that about 70% of enterprise racks provide less than 20kW of power and are air-cooled, whereas H200 NVL in PCIe AIC form factor It's the relatively low-power air-cooled AI compute card for these environments.

H200 NVL for double slot thickness.Maximum TDP power consumption is down to 600W from 700W in the H200 SXM, with some reductions across all computing powers.(Note: e.g. INT8 Tensor Core power slips about 15.6%), though HBM memory capacity and bandwidth is the same 141GB, 4.8TB/s as the H200 SXM.

In addition, the H200 NVL PCIe GPUs support dual or quad 900GB/s per GPU NVLink bridge interconnects.

NVIDIA says the H200 NVL has 1.5 times the memory capacity and 1.2 times the bandwidth of the previous H100 NVL.1.7x AI inference performance and 30% outperformance in HPC applications.

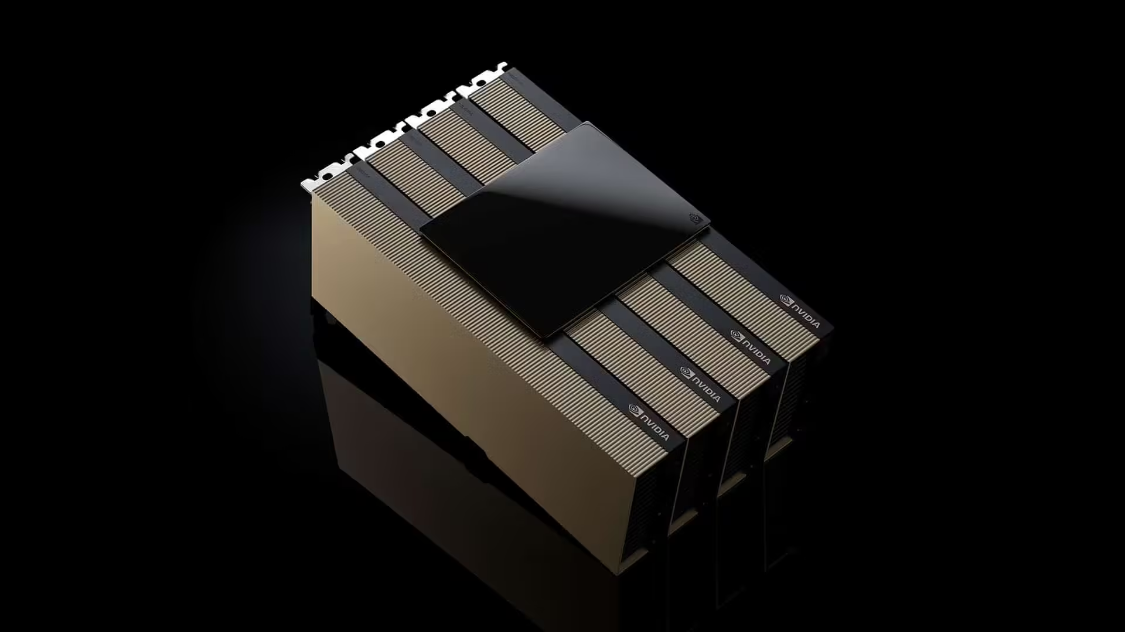

NVIDIA also introduced this time a single-server solution for the GB200 NVL4 SuperchipThe module aggregates 2 Grace CPUs and 4 Blackwell GPUs with an HBM memory pool of 1.3TB.Equivalent to 2 sets of GB200 Grace Blackwell SuperchipsThe overall power consumption comes to 5.4kw.

Compared to the previous generation GH200 NVL4 system with 4 Grace CPUs and 4 Hopper GPUs, the new GB200 NVL4 2.2x simulation performance, 1.8x AI training performance and 1.8x AI inference performance.

The GB200 NVL4 superchip will be available in the second half of 2025.