Nov. 19 - A research team from Peking University, Tsinghua University, Pengcheng Lab, Alibaba's Dharmo Academy, and Lehigh University, theThe latest launch of the LLaVA-o1This is the first GPT-o1-like systematic reasoning that is spontaneous, as explained at the end of this article.visual language model.

LLaVA-o1 is a novel visual language model (VLM), which was designed with the goal of performing autonomous multi-stage reasoning.

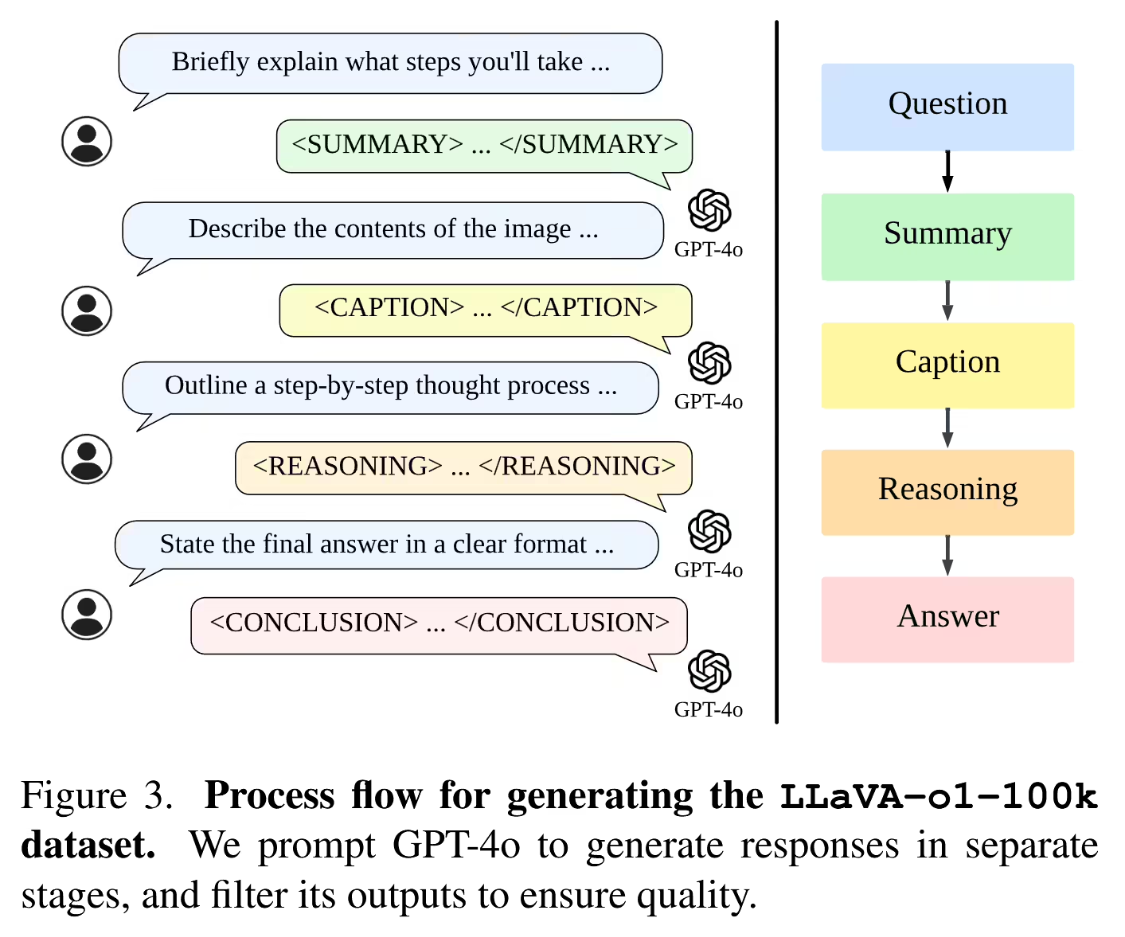

LLaVA-o1, with 11 billion parameters, was developed based on the Llama-3.2-Vision-Instruct model and designed with 4 reasoning stages: summary, caption, reasoning and conclusion.

The model is fine-tuned using a dataset called LLaVA-o1-100k, derived from visual quizzing (VQA) sources and structured inference annotations generated by GPT-4o.

LLaVA-o1 employs the inference time Scaling technique of stage-level beam search, which is capable of generating multiple candidate answers at each inference stage and selecting the best answer.

The model has a strong ability to handle complex tasks, and can break through the limitations of traditional visual language models in complex visual question and answer tasks.

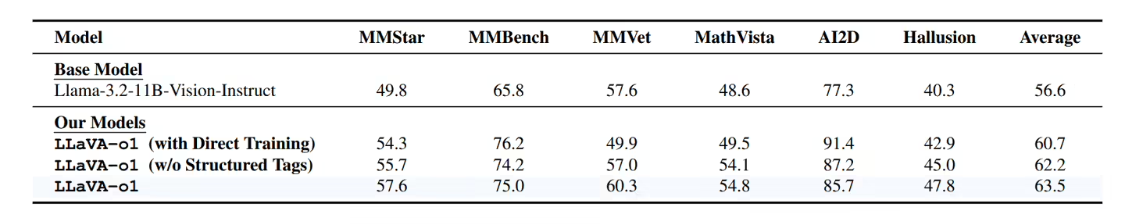

Compared to the base model, LLaVA-o1 improves performance by 8.9% in multimodal inference benchmarks, outperforming many large and closed-source competitors.

The introduction of LLaVA-o1 fills an important gap between textual and visual question-and-answer models, and its excellent performance in several benchmark tests, especially in the area of reasoning about visual problems in math and science, demonstrates the importance of structured reasoning in visual language models.

Spontaneous AI (Spontaneous AI) refers to AI systems that can mimic the spontaneous behavior of animals. Research in this technology has focused on how to design robots or intelligent systems with spontaneous behavior through machine learning and complex temporal patterns.

Attach reference address